Home >Backend Development >Python Tutorial >Building a chatbot with Semantic Kernel - Part Plugins

Building a chatbot with Semantic Kernel - Part Plugins

- Linda HamiltonOriginal

- 2024-12-06 07:33:15663browse

On our previous chapter, we went through some of the basic concepts of Semantic Kernel, finishing with a working Agent that was able to respond to generic questions, but with a predefined tone and purpose using the instructions.

On this second chapter, we will add specific skills to our Librarian using Plugins.

What is a Plugin?

A Plugin is a set of functions exposed to the AI services. Plugins encapsulate functionalities, allowing the assistant to perform actions that are not part of its native behavior.

For example, with Plugins we could enable the assistant to fetch some data from an API or a Database. Additionally, the assistant could perform some actions on behalf of the user, tipically through APIs. Furthermore, the assistant would be enable to update some parts of the UI using a Plugin.

As I mentioned before, a Plugin is a composed by different functions. Each function is defined mainly by:

- Description: the purpose of the function and when it should be invoked. It will help the model to decide when to call it as we will see in the section function calling.

- Input variables: used to parametrize the function so it can be reusable.

Semantic Kernel supports different types of Plugins. In this post we will focus on two of them: Prompt Plugin and Native Plugin.

Prompt plugin

A Prompt Plugin is basically a specific prompt to be invoked under concrete circumstances. In a typical scenario, we might have a complex System Prompt, where we define the tone, purpose and general behavior of our agent. However, it is possible that we want the agent to perform some concrete actions where we need to define some specific restrictions and rules. For that case, we would try to avoid the System Prompt to grow to the infinite in order to reduce hallucinations and keep the model response relevant and controlled. That's a perfect case for a Prompt Plugin:

- System Prompt: tone, purpose and general behavior.

- Summarization Prompt: including rules and restrictions about how to do a summary. For example, it should not be longer than two paragraphs.

A Prompt Plugin is defined by two files:

- config.json: configuration file including description, variables and execution settings:

{

"schema": 1,

"description": "Plugin description",

"execution_settings": {

"default": {

"max_tokens": 200,

"temperature": 1,

"top_p": 0.0,

"presence_penalty": 0.0,

"frequency_penalty": 0.0

}

},

"input_variables": [

{

"name": "parameter_1",

"description": "Parameter description",

"default": ""

}

]

}

- skprompt.txt: prompt content in plain text. Variables from the configuration file can be accessed using the syntax {{$parameter_1}}.

To add a Prompt Plugin into the Kernel we just need to specify the folder. For example, if we have the folder structure /plugins/plugin_name/skprompt.txt, the plugin is registered as follows:

{

"schema": 1,

"description": "Plugin description",

"execution_settings": {

"default": {

"max_tokens": 200,

"temperature": 1,

"top_p": 0.0,

"presence_penalty": 0.0,

"frequency_penalty": 0.0

}

},

"input_variables": [

{

"name": "parameter_1",

"description": "Parameter description",

"default": ""

}

]

}

Native plugin

A Native Plugin allows the model to invoke native code (python, C# or Java). A plugin is represented as a class, where any function can be defined as invokable from the Agent using annotations. The developer must provide some information to the model with the annotations: name, description and arguments.

To define a Native Plugin we must only create the class and add the corresponding annotations:

self.kernel.add_plugin(parent_directory="./plugins", plugin_name="plugin_name")

To add a Native Plugin into the Kernel we need to create a new instance of the class:

from datetime import datetime

from typing import Annotated

from semantic_kernel.functions.kernel_function_decorator import kernel_function

class MyFormatterPlugin():

@kernel_function(name='format_current_date', description='Call to format current date to specific strftime format') # Define the function as invokable

def formate_current_date(

self,

strftime_format: Annotated[str, 'Format, must follow strftime syntax'] # Describe the arguments

) -> Annotated[str, 'Current date on the specified format']: # Describe the return value

return datetime.today().strftime(strftime_format)

Function calling

Function calling, or planning, in Semantic Kernel is a way for the model to invoke a function registered in the Kernel.

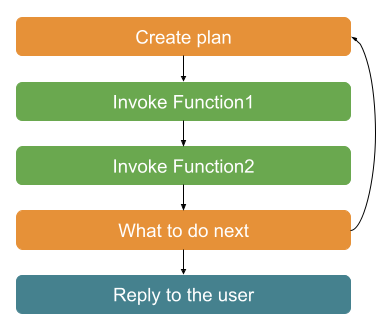

For each user message, the model creates a plan to decide how to reply. First, it uses the chat history and the function's information to decide which function, if any, must be called. Once it has been invoked, it appends the result of the function to the history, and decides if it has completed the task from the user message or requires more steps. In case it is not finished, it starts again from the first step until it has completed the task, or it needs help from the user.

Thanks to this loop, the model can concatenate calls to different functions. For example, we might have a function that returns a user_session (including the id of the user) and another one that requires a current_user_id as argument. The model will make a plan where it calls the first function to retrieve the user session, parses the response and uses the user_id as argument for the second function.

In Semantic Kernel, we must tell the agent to use function calling. This is done by defining an execution settings with the function choice behavior as automatic:

self.kernel.add_plugin(MyFormatterPlugin(), plugin_name="my_formatter_plugin")

It is important to emphasize that the more detailed the descriptions are, the more tokens are being used, so it is more costly. It is key to find a balance between good detailed descriptions and tokens used.

Plugins for our Librarian

Now that it is clear what a function is and its purpose, let's see how we can get the most out of it for our Librarian agent.

For learning purposes, we will define one Native Plugin and one Prompt Plugin:

Book repository plugin: it is a Native Plugin to retrive books from a repository.

Poem creator Plugin: it is a Prompt Plugin to create a poem from the first sentence of a book.

Book repository plugin

We use the Open library API to retrieve the books' information. The plugin returns the top 5 results for the search, including the title, author and the first sentence of the book.

Specifically, we use the following endpoint to retrieve the information: https://openlibrary.org/search.json?q={user-query}&fields=key,title,author_name,first_sentence&limit=5.

First, we define the BookModel that represents a book in our system:

{

"schema": 1,

"description": "Plugin description",

"execution_settings": {

"default": {

"max_tokens": 200,

"temperature": 1,

"top_p": 0.0,

"presence_penalty": 0.0,

"frequency_penalty": 0.0

}

},

"input_variables": [

{

"name": "parameter_1",

"description": "Parameter description",

"default": ""

}

]

}

And now, it is time for the function. We use a clear description of both the function and the argument. In this case, we use a complex object as response, but the model is able to use it later on further responses.

self.kernel.add_plugin(parent_directory="./plugins", plugin_name="plugin_name")

Finally, we can add this plugin to the Kernel:

from datetime import datetime

from typing import Annotated

from semantic_kernel.functions.kernel_function_decorator import kernel_function

class MyFormatterPlugin():

@kernel_function(name='format_current_date', description='Call to format current date to specific strftime format') # Define the function as invokable

def formate_current_date(

self,

strftime_format: Annotated[str, 'Format, must follow strftime syntax'] # Describe the arguments

) -> Annotated[str, 'Current date on the specified format']: # Describe the return value

return datetime.today().strftime(strftime_format)

Poem creator plugin

We will define this plugin as a Prompt Plugin with some specific restrictions. This is how the prompt and its configuration look like:

/plugins/poem-plugin/poem-creator/config.json:

self.kernel.add_plugin(MyFormatterPlugin(), plugin_name="my_formatter_plugin")

/plugins/poem-plugin/poem-creator/skprompt.txt:

# Create the settings

settings = AzureChatPromptExecutionSettings()

# Set the behavior as automatic

settings.function_choice_behavior = FunctionChoiceBehavior.Auto()

# Pass the settings to the agent

self.agent = ChatCompletionAgent(

service_id='chat_completion',

kernel=self.kernel,

name='Assistant',

instructions="The prompt",

execution_settings=settings

)

It is straightfoward to add the plugin to the Kernel:

class BookModel(TypedDict):

author: str

title: str

first_sentence: str

Good practices

Some suggestions based on the existing literature and my own experience:

- Use python syntax to describe your function even in .NET or Java. Models are usually more skilled on python due to the trained data ?

- Keep functions focused, specially the descriptions. One function, one purpose. Don't try to create one function that makes too many things, it will be counter productive ?

- Simple arguments and low number of them. The simpler and fewer they are, the more reliable the call from the models to the functions will be ?

- If you have many functions, review the descriptions carefully to make sure there are no potential conflicts that might make the model get confused ?

- Ask a model (via chatgpt or similar) feedback about the function descriptions. They are usually quite good to find improvements. By the way, this also applies to the development of prompts in general ❓

- Test, test and test. Specially on business software cases, reliablity is key. Make sure the model is able to call the expected functions with the information you have provided to them via annotation ?

Summary

In this chapter, we have enhanced our librarian agent with some specific skills using Plugins and Semantic Kernel Planning.

Remember that all the code is already available on my GitHub repository ? PyChatbot for Semantic Kernel.

In the next chapter, we will include some capabilities in the chat to inspect in real time how our model calls and interacts with our plugins by creating an Inspector.

The above is the detailed content of Building a chatbot with Semantic Kernel - Part Plugins. For more information, please follow other related articles on the PHP Chinese website!