Web Front-end

Web Front-end JS Tutorial

JS Tutorial CKA Full Course Day Static Pods, Manual Scheduling, Labels, and Selectors in Kubernetes

CKA Full Course Day Static Pods, Manual Scheduling, Labels, and Selectors in KubernetesCKA Full Course Day Static Pods, Manual Scheduling, Labels, and Selectors in Kubernetes

Task: Schedule a Pod Manually Without the Scheduler

In this task, we’ll be exploring how to bypass the Kubernetes scheduler by directly assigning a pod to a specific node in a cluster. This can be a useful approach for specific scenarios where you need a pod to run on a particular node without going through the usual scheduling process.

Prerequisites

We assume you have a Kubernetes cluster running, created with a KIND (Kubernetes in Docker) configuration similar to the one described in previous posts. Here, we’ve created a cluster named kind-cka-cluster:

kind create cluster --name kind-cka-cluster --config config.yml

Since we’ve already covered cluster creation with KIND in earlier posts, we won’t go into those details again.

Step 1: Verify the Cluster Nodes

To see the nodes available in this new cluster, run:

kubectl get nodes

You should see output similar to this:

NAME STATUS ROLES AGE VERSION kind-cka-cluster-control-plane Ready control-plane 7m v1.31.0

For this task, we’ll be scheduling our pod on kind-cka-cluster-control-plane.

Step 2: Define the Pod Manifest (node.yml)

Now, let’s create a pod manifest in YAML format. Using the nodeName field in our pod configuration, we can specify the exact node for the pod, bypassing the Kubernetes scheduler entirely.

node.yml:

apiVersion: v1

kind: Pod

metadata:

name: nginx

spec:

containers:

- name: nginx

image: nginx

nodeName: kind-cka-cluster-control-plane

In this manifest:

- We set nodeName to kind-cka-cluster-control-plane, which means the scheduler will skip assigning a node, and the Kubelet on this specific node will handle placement instead.

This approach is a direct method for node selection, overriding other methods like nodeSelector or affinity rules.

According to Kubernetes documentation:

"nodeName is a more direct form of node selection than affinity or nodeSelector. nodeName is a field in the Pod spec. If the nodeName field is not empty, the scheduler ignores the Pod and the kubelet on the named node tries to place the Pod on that node. Using nodeName overrules using nodeSelector or affinity and anti-affinity rules."

For more details, refer to the Kubernetes documentation on node assignment.

Step 3: Apply the Pod Manifest

With our manifest ready, apply it to the cluster:

kubectl apply -f node.yml

This command creates the nginx pod and assigns it directly to the kind-cka-cluster-control-plane node.

Step 4: Verify Pod Placement

Finally, check that the pod is running on the specified node:

kubectl get pods -o wide

The output should confirm that the nginx pod is indeed running on kind-cka-cluster-control-plane:

kind create cluster --name kind-cka-cluster --config config.yml

This verifies that by setting the nodeName field, we successfully bypassed the Kubernetes scheduler and directly scheduled our pod on the control plane node.

Task: Login to the control plane node and go to the directory of default static pod manifests and try to restart the control plane components.

To access the control plane node of our newly created cluster, use the following command:

kubectl get nodes

Navigate to the directory containing the static pod manifests:

NAME STATUS ROLES AGE VERSION kind-cka-cluster-control-plane Ready control-plane 7m v1.31.0

Verify the current manifests:

apiVersion: v1

kind: Pod

metadata:

name: nginx

spec:

containers:

- name: nginx

image: nginx

nodeName: kind-cka-cluster-control-plane

To restart the kube-controller-manager, move its manifest file temporarily:

kubectl apply -f node.yml

After confirming the restart, return the manifest file to its original location:

kubectl get pods -o wide

With these steps, we successfully demonstrated how to access the control plane and manipulate the static pod manifests to manage the lifecycle of control plane components.

Confirming the Restart of kube-controller-manager

After temporarily moving the kube-controller-manager.yaml manifest file to /tmp, we can verify that the kube-controller-manager has restarted. As mentioned in previous posts, I am using k9s, which does clearly show the restart, but for readers without k9s, try the following command

Inspect Events:

To gather more information, use:

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES nginx 1/1 Running 0 28s 10.244.0.5 kind-cka-cluster-control-plane <none> <none> </none></none>

Look for events at the end of the output. A successful restart will show events similar to:

docker exec -it kind-cka-cluster-control-plane bash

The presence of "Killing," "Created," and "Started" events indicates that the kube-controller-manager was stopped and then restarted successfully.

Cleanup

Once you have completed your tasks and confirmed the behavior of your pods, it is important to clean up any resources that are no longer needed. This helps maintain a tidy environment and frees up resources in your cluster.

List Pods:

First, you can check the current pods running in your cluster:

cd /etc/kubernetes/manifests

You might see output like this:

ls

Describe Pod:

To get more information about a specific pod, use the describe command:

mv kube-controller-manager.yaml /tmp

This will give you details about the pod, such as its name, namespace, node, and other configurations:

mv /tmp/kube-controller-manager.yaml /etc/kubernetes/manifests/

Delete the Pod:

If you find that the pod is no longer needed, you can safely delete it with the following command:

kubectl describe pod kube-controller-manager-kind-cka-cluster-control-plane -n kube-system

Verify Deletion:

After executing the delete command, you can verify that the pod has been removed by listing the pods again:

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Killing 4m12s (x2 over 8m32s) kubelet Stopping container kube-controller-manager

Normal Pulled 3m6s (x2 over 7m36s) kubelet Container image "registry.k8s.io/kube-controller-manager:v1.31.0" already present on machine

Normal Created 3m6s (x2 over 7m36s) kubelet Created container kube-controller-manager

Normal Started 3m6s (x2 over 7m36s) kubelet Started container kube-controller-manager

Ensure that the nginx pod no longer appears in the list.

By performing these cleanup steps, you help ensure that your Kubernetes cluster remains organized and efficient.

Creating Multiple Pods with Specific Labels

In this section, we will create three pods based on the nginx image, each with a unique name and specific labels indicating different environments: env:test, env:dev, and env:prod.

Step 1: Create the Script

First, we'll create a script that contains the commands to generate the pods. Use the following command to create the script file:

kind create cluster --name kind-cka-cluster --config config.yml

Next, paste the following code into the file:

kubectl get nodes

Step 2: Make the Script Executable

After saving the file, make the script executable with the following command:

NAME STATUS ROLES AGE VERSION kind-cka-cluster-control-plane Ready control-plane 7m v1.31.0

Step 3: Execute the Script

Run the script to create the pods:

apiVersion: v1

kind: Pod

metadata:

name: nginx

spec:

containers:

- name: nginx

image: nginx

nodeName: kind-cka-cluster-control-plane

You should see output indicating the creation of the pods:

kubectl apply -f node.yml

Step 4: Verify the Created Pods

The script will then display the status of the created pods:

kubectl get pods -o wide

At this point, you can filter the pods based on their labels. For example, to find the pod with the env=dev label, use the following command:

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES nginx 1/1 Running 0 28s 10.244.0.5 kind-cka-cluster-control-plane <none> <none> </none></none>

You should see output confirming the pod is running:

docker exec -it kind-cka-cluster-control-plane bash

Tags and Mentions

- @piyushsachdeva

- Day 13: Video Tutorial

The above is the detailed content of CKA Full Course Day Static Pods, Manual Scheduling, Labels, and Selectors in Kubernetes. For more information, please follow other related articles on the PHP Chinese website!

From C/C to JavaScript: How It All WorksApr 14, 2025 am 12:05 AM

From C/C to JavaScript: How It All WorksApr 14, 2025 am 12:05 AMThe shift from C/C to JavaScript requires adapting to dynamic typing, garbage collection and asynchronous programming. 1) C/C is a statically typed language that requires manual memory management, while JavaScript is dynamically typed and garbage collection is automatically processed. 2) C/C needs to be compiled into machine code, while JavaScript is an interpreted language. 3) JavaScript introduces concepts such as closures, prototype chains and Promise, which enhances flexibility and asynchronous programming capabilities.

JavaScript Engines: Comparing ImplementationsApr 13, 2025 am 12:05 AM

JavaScript Engines: Comparing ImplementationsApr 13, 2025 am 12:05 AMDifferent JavaScript engines have different effects when parsing and executing JavaScript code, because the implementation principles and optimization strategies of each engine differ. 1. Lexical analysis: convert source code into lexical unit. 2. Grammar analysis: Generate an abstract syntax tree. 3. Optimization and compilation: Generate machine code through the JIT compiler. 4. Execute: Run the machine code. V8 engine optimizes through instant compilation and hidden class, SpiderMonkey uses a type inference system, resulting in different performance performance on the same code.

Beyond the Browser: JavaScript in the Real WorldApr 12, 2025 am 12:06 AM

Beyond the Browser: JavaScript in the Real WorldApr 12, 2025 am 12:06 AMJavaScript's applications in the real world include server-side programming, mobile application development and Internet of Things control: 1. Server-side programming is realized through Node.js, suitable for high concurrent request processing. 2. Mobile application development is carried out through ReactNative and supports cross-platform deployment. 3. Used for IoT device control through Johnny-Five library, suitable for hardware interaction.

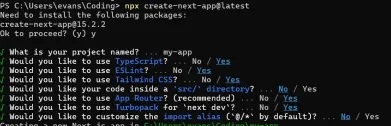

Building a Multi-Tenant SaaS Application with Next.js (Backend Integration)Apr 11, 2025 am 08:23 AM

Building a Multi-Tenant SaaS Application with Next.js (Backend Integration)Apr 11, 2025 am 08:23 AMI built a functional multi-tenant SaaS application (an EdTech app) with your everyday tech tool and you can do the same. First, what’s a multi-tenant SaaS application? Multi-tenant SaaS applications let you serve multiple customers from a sing

How to Build a Multi-Tenant SaaS Application with Next.js (Frontend Integration)Apr 11, 2025 am 08:22 AM

How to Build a Multi-Tenant SaaS Application with Next.js (Frontend Integration)Apr 11, 2025 am 08:22 AMThis article demonstrates frontend integration with a backend secured by Permit, building a functional EdTech SaaS application using Next.js. The frontend fetches user permissions to control UI visibility and ensures API requests adhere to role-base

JavaScript: Exploring the Versatility of a Web LanguageApr 11, 2025 am 12:01 AM

JavaScript: Exploring the Versatility of a Web LanguageApr 11, 2025 am 12:01 AMJavaScript is the core language of modern web development and is widely used for its diversity and flexibility. 1) Front-end development: build dynamic web pages and single-page applications through DOM operations and modern frameworks (such as React, Vue.js, Angular). 2) Server-side development: Node.js uses a non-blocking I/O model to handle high concurrency and real-time applications. 3) Mobile and desktop application development: cross-platform development is realized through ReactNative and Electron to improve development efficiency.

The Evolution of JavaScript: Current Trends and Future ProspectsApr 10, 2025 am 09:33 AM

The Evolution of JavaScript: Current Trends and Future ProspectsApr 10, 2025 am 09:33 AMThe latest trends in JavaScript include the rise of TypeScript, the popularity of modern frameworks and libraries, and the application of WebAssembly. Future prospects cover more powerful type systems, the development of server-side JavaScript, the expansion of artificial intelligence and machine learning, and the potential of IoT and edge computing.

Demystifying JavaScript: What It Does and Why It MattersApr 09, 2025 am 12:07 AM

Demystifying JavaScript: What It Does and Why It MattersApr 09, 2025 am 12:07 AMJavaScript is the cornerstone of modern web development, and its main functions include event-driven programming, dynamic content generation and asynchronous programming. 1) Event-driven programming allows web pages to change dynamically according to user operations. 2) Dynamic content generation allows page content to be adjusted according to conditions. 3) Asynchronous programming ensures that the user interface is not blocked. JavaScript is widely used in web interaction, single-page application and server-side development, greatly improving the flexibility of user experience and cross-platform development.

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

SecLists

SecLists is the ultimate security tester's companion. It is a collection of various types of lists that are frequently used during security assessments, all in one place. SecLists helps make security testing more efficient and productive by conveniently providing all the lists a security tester might need. List types include usernames, passwords, URLs, fuzzing payloads, sensitive data patterns, web shells, and more. The tester can simply pull this repository onto a new test machine and he will have access to every type of list he needs.

SublimeText3 Linux new version

SublimeText3 Linux latest version

Atom editor mac version download

The most popular open source editor

MinGW - Minimalist GNU for Windows

This project is in the process of being migrated to osdn.net/projects/mingw, you can continue to follow us there. MinGW: A native Windows port of the GNU Compiler Collection (GCC), freely distributable import libraries and header files for building native Windows applications; includes extensions to the MSVC runtime to support C99 functionality. All MinGW software can run on 64-bit Windows platforms.

SublimeText3 Mac version

God-level code editing software (SublimeText3)