Outline

- What is the goal of model evaluation?

- What is the purpose of model evaluation, and what are some common evaluation procedures?

- What is the usage of classification accuracy, and what are its limitations?

- How does a confusion matrix describe the performance of a classifier?

- What metrics can be computed from a confusion matrix?

The goal of model evaluation is to answer the question;

how do I choose between different models?

The process of evaluating a machine learning helps determines how well the model is reliable and effective for its application. This involves assessing different factors such as its performance, metrics and accuracy for predictions or decision making.

No matter what model you choose to use, you need a way to choose between models: different model types, tuning parameters, and features. Also you need a model evaluation procedure to estimate how well a model will generalize to unseen data. Lastly you need an evaluation procedure to pair with your procedure in other to quantify your model performance.

Before we proceed, let's review some of the different model evaluation procedures and how they operate.

Model Evaluation Procedures and How They Operate.

-

Training and testing on the same data

- Rewards overly complex models that "overfit" the training data and won't necessarily generalize

-

Train/test split

- Split the dataset into two pieces, so that the model can be trained and tested on different data

- Better estimate of out-of-sample performance, but still a "high variance" estimate

- Useful due to its speed, simplicity, and flexibility

-

K-fold cross-validation

- Systematically create "K" train/test splits and average the results together

- Even better estimate of out-of-sample performance

- Runs "K" times slower than train/test split.

From above, we can deduce that:

Training and testing on the same data is a classic cause of overfitting in which you build an overly complex model that won't generalize to new data and that is not actually useful.

Train_Test_Split provides a much better estimate of out-of-sample performance.

K-fold cross-validation does better by systematically K train test splits and averaging the results together.

In summary, train_tests_split is still profitable to cross validation due to its speed and simplicity, and that's what we will use in this tutorial guide.

Model Evaluation Metrics:

You will always need an evaluation metric to go along with your chosen procedure, and your choice of metric depends on the problem you are addressing. For classification problems, you can use classification accuracy. But we will focus on other important classification evaluation metrics in this guide.

Before we learn any new evaluation metrics' Lets review the classification accuracy, and talk about its strength and weaknesses.

Classification accuracy

We've chosen the Pima Indians Diabetes dataset for this tutorial, which includes the health data and diabetes status of 768 patients.

Let's read the data and print the first 5 rows of the data. The label column indicates 1 if the patients has diabetes and 0 if the patients doesn't have diabetes, and we intend to answer the question:

Question: Can we predict the diabetes status of a patient given their health measurements?

We define our features metrics X and response vector Y. We use train_test_split to split X and Y into training and testing set.

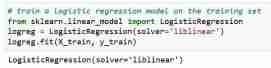

Next, we train a logistic regression model on training set. During then fit step, the logreg model object is learning the relationship between the X_train and Y_train. Finally we make a class predictions for the testing sets.

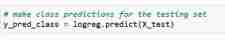

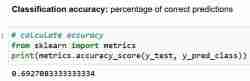

Now , we've made prediction for the testing set, we can calculate the classification accuracy,, which is the simply the percentage of correct predictions.

However, anytime you use classification accuracy as your evaluation metrics, it is important to compare it with Null accuracy, which is the accuracy that could be achieved by always predicting the most frequent class.

Null accuracy answers the question; if my model was to predict the predominant class 100 percent of the time, how often will it be correct? In the scenario above, 32% of the y_test are 1 (ones). In other words, a dumb model that predicts that the patients has diabetes, would be right 68% of the time(which is the zeros).This provides a baseline against which we might want to measure our logistic regression model.

When we compare the Null accuracy of 68% and the model accuracy of 69%, our model doesn't look very good. This demonstrates one weakness of classification accuracy as a model evaluation metric. The classification accuracy doesn't tell us anything about the underlying distribution of the testing test.

In Summary:

- Classification accuracy is the easiest classification metric to understand

- But, it does not tell you the underlying distribution of response values

- And, it does not tell you what "types" of errors your classifier is making.

Let's now look at the confusion matrix.

Confusion matrix

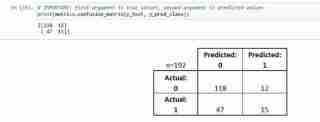

The Confusion matrix is a table that describes the performance of a classification model.

It is useful to help you understand the performance of your classifier, but it is not a model evaluation metric; so you can't tell scikit learn to choose the model with the best confusion matrix. However, there are many metrics that can be calculated from the confusion matrix and those can be directly used to choose between models.

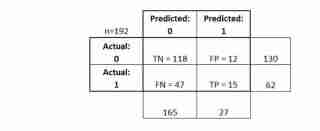

- Every observation in the testing set is represented in exactly one box

- It's a 2x2 matrix because there are 2 response classes

- The format shown here is not universal

Let's explain some of its basic terminologies.

- True Positives (TP): we correctly predicted that they do have diabetes

- True Negatives (TN): we correctly predicted that they don't have diabetes

- False Positives (FP): we incorrectly predicted that they do have diabetes (a "Type I error")

- False Negatives (FN): we incorrectly predicted that they don't have diabetes (a "Type II error")

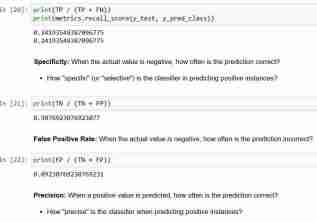

Let’s see how we can calculate the metrics

In Conclusion:

- Confusion matrix gives you a more complete picture of how your classifier is performing

- Also allows you to compute various classification metrics, and these metrics can guide your model selection

The above is the detailed content of Evaluating A Machine Learning Classification Model. For more information, please follow other related articles on the PHP Chinese website!

Python and Time: Making the Most of Your Study TimeApr 14, 2025 am 12:02 AM

Python and Time: Making the Most of Your Study TimeApr 14, 2025 am 12:02 AMTo maximize the efficiency of learning Python in a limited time, you can use Python's datetime, time, and schedule modules. 1. The datetime module is used to record and plan learning time. 2. The time module helps to set study and rest time. 3. The schedule module automatically arranges weekly learning tasks.

Python: Games, GUIs, and MoreApr 13, 2025 am 12:14 AM

Python: Games, GUIs, and MoreApr 13, 2025 am 12:14 AMPython excels in gaming and GUI development. 1) Game development uses Pygame, providing drawing, audio and other functions, which are suitable for creating 2D games. 2) GUI development can choose Tkinter or PyQt. Tkinter is simple and easy to use, PyQt has rich functions and is suitable for professional development.

Python vs. C : Applications and Use Cases ComparedApr 12, 2025 am 12:01 AM

Python vs. C : Applications and Use Cases ComparedApr 12, 2025 am 12:01 AMPython is suitable for data science, web development and automation tasks, while C is suitable for system programming, game development and embedded systems. Python is known for its simplicity and powerful ecosystem, while C is known for its high performance and underlying control capabilities.

The 2-Hour Python Plan: A Realistic ApproachApr 11, 2025 am 12:04 AM

The 2-Hour Python Plan: A Realistic ApproachApr 11, 2025 am 12:04 AMYou can learn basic programming concepts and skills of Python within 2 hours. 1. Learn variables and data types, 2. Master control flow (conditional statements and loops), 3. Understand the definition and use of functions, 4. Quickly get started with Python programming through simple examples and code snippets.

Python: Exploring Its Primary ApplicationsApr 10, 2025 am 09:41 AM

Python: Exploring Its Primary ApplicationsApr 10, 2025 am 09:41 AMPython is widely used in the fields of web development, data science, machine learning, automation and scripting. 1) In web development, Django and Flask frameworks simplify the development process. 2) In the fields of data science and machine learning, NumPy, Pandas, Scikit-learn and TensorFlow libraries provide strong support. 3) In terms of automation and scripting, Python is suitable for tasks such as automated testing and system management.

How Much Python Can You Learn in 2 Hours?Apr 09, 2025 pm 04:33 PM

How Much Python Can You Learn in 2 Hours?Apr 09, 2025 pm 04:33 PMYou can learn the basics of Python within two hours. 1. Learn variables and data types, 2. Master control structures such as if statements and loops, 3. Understand the definition and use of functions. These will help you start writing simple Python programs.

How to teach computer novice programming basics in project and problem-driven methods within 10 hours?Apr 02, 2025 am 07:18 AM

How to teach computer novice programming basics in project and problem-driven methods within 10 hours?Apr 02, 2025 am 07:18 AMHow to teach computer novice programming basics within 10 hours? If you only have 10 hours to teach computer novice some programming knowledge, what would you choose to teach...

How to avoid being detected by the browser when using Fiddler Everywhere for man-in-the-middle reading?Apr 02, 2025 am 07:15 AM

How to avoid being detected by the browser when using Fiddler Everywhere for man-in-the-middle reading?Apr 02, 2025 am 07:15 AMHow to avoid being detected when using FiddlerEverywhere for man-in-the-middle readings When you use FiddlerEverywhere...

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

MantisBT

Mantis is an easy-to-deploy web-based defect tracking tool designed to aid in product defect tracking. It requires PHP, MySQL and a web server. Check out our demo and hosting services.

Atom editor mac version download

The most popular open source editor

SublimeText3 Linux new version

SublimeText3 Linux latest version

DVWA

Damn Vulnerable Web App (DVWA) is a PHP/MySQL web application that is very vulnerable. Its main goals are to be an aid for security professionals to test their skills and tools in a legal environment, to help web developers better understand the process of securing web applications, and to help teachers/students teach/learn in a classroom environment Web application security. The goal of DVWA is to practice some of the most common web vulnerabilities through a simple and straightforward interface, with varying degrees of difficulty. Please note that this software

mPDF

mPDF is a PHP library that can generate PDF files from UTF-8 encoded HTML. The original author, Ian Back, wrote mPDF to output PDF files "on the fly" from his website and handle different languages. It is slower than original scripts like HTML2FPDF and produces larger files when using Unicode fonts, but supports CSS styles etc. and has a lot of enhancements. Supports almost all languages, including RTL (Arabic and Hebrew) and CJK (Chinese, Japanese and Korean). Supports nested block-level elements (such as P, DIV),