Introduction

Web scraping is the process of extracting data from websites and transforming it into a structured format for further analysis. It has become an essential tool for businesses in various industries, such as e-commerce, market research, and data analytics. With the increasing demand for data-driven insights, advanced web scraping techniques have emerged to improve the efficiency and accuracy of the process. In this article, we will discuss the advantages, disadvantages, and features of advanced web scraping techniques.

Advantages of Advanced Web Scraping Techniques

Advanced web scraping techniques offer several advantages over traditional scraping methods. One of the main advantages is the ability to extract data from complex websites and dynamic content. With the use of APIs and advanced algorithms, web scrapers can navigate through different website structures and retrieve data from multiple pages efficiently. This leads to a higher quality and quantity of data, allowing businesses to make better-informed decisions. Additionally, advanced web scraping techniques can handle large datasets without the risk of being blocked by anti-scraping measures.

Disadvantages of Advanced Web Scraping Techniques

Despite its benefits, advanced web scraping techniques also have some drawbacks. One of the major concerns is the legal aspect of web scraping. While scraping public data is generally considered legal, the use of automated tools to extract data from websites can raise ethical and legal issues. Moreover, advanced web scraping requires technical expertise and resources, making it a costly process.

Features of Advanced Web Scraping Techniques

Advanced web scraping techniques offer a range of features to enhance the scraping process. These include the use of proxies and user agents to mimic human behavior, data cleansing and normalization to ensure accuracy, and scheduling and monitoring tools to automate the scraping process. Some advanced web scraping tools also offer AI-powered data extraction and natural language processing capabilities for more efficient and accurate data retrieval.

Example of Using Proxies in Web Scraping

import requests

from bs4 import BeautifulSoup

proxy = {

'http': 'http://10.10.1.10:3128',

'https': 'https://10.10.1.11:1080',

}

url = 'https://example.com'

response = requests.get(url, proxies=proxy)

soup = BeautifulSoup(response.text, 'html.parser')

print(soup.prettify())

This Python script demonstrates how to use proxies with the requests library to scrape a website, helping to avoid detection and blocking by the target site.

Conclusion

The emergence of advanced web scraping techniques has revolutionized the way businesses collect and analyze data from websites. With its advantages of handling complex websites and large datasets, businesses can gain valuable insights and stay competitive in their respective industries. However, it is essential to consider the ethical and legal aspects of web scraping and invest in the right tools and resources for a successful scraping process. Overall, advanced web scraping techniques have opened up new opportunities for businesses to extract and leverage data for growth and success.

The above is the detailed content of Advanced Web Scraping Techniques. For more information, please follow other related articles on the PHP Chinese website!

Python vs. JavaScript: Community, Libraries, and ResourcesApr 15, 2025 am 12:16 AM

Python vs. JavaScript: Community, Libraries, and ResourcesApr 15, 2025 am 12:16 AMPython and JavaScript have their own advantages and disadvantages in terms of community, libraries and resources. 1) The Python community is friendly and suitable for beginners, but the front-end development resources are not as rich as JavaScript. 2) Python is powerful in data science and machine learning libraries, while JavaScript is better in front-end development libraries and frameworks. 3) Both have rich learning resources, but Python is suitable for starting with official documents, while JavaScript is better with MDNWebDocs. The choice should be based on project needs and personal interests.

From C/C to JavaScript: How It All WorksApr 14, 2025 am 12:05 AM

From C/C to JavaScript: How It All WorksApr 14, 2025 am 12:05 AMThe shift from C/C to JavaScript requires adapting to dynamic typing, garbage collection and asynchronous programming. 1) C/C is a statically typed language that requires manual memory management, while JavaScript is dynamically typed and garbage collection is automatically processed. 2) C/C needs to be compiled into machine code, while JavaScript is an interpreted language. 3) JavaScript introduces concepts such as closures, prototype chains and Promise, which enhances flexibility and asynchronous programming capabilities.

JavaScript Engines: Comparing ImplementationsApr 13, 2025 am 12:05 AM

JavaScript Engines: Comparing ImplementationsApr 13, 2025 am 12:05 AMDifferent JavaScript engines have different effects when parsing and executing JavaScript code, because the implementation principles and optimization strategies of each engine differ. 1. Lexical analysis: convert source code into lexical unit. 2. Grammar analysis: Generate an abstract syntax tree. 3. Optimization and compilation: Generate machine code through the JIT compiler. 4. Execute: Run the machine code. V8 engine optimizes through instant compilation and hidden class, SpiderMonkey uses a type inference system, resulting in different performance performance on the same code.

Beyond the Browser: JavaScript in the Real WorldApr 12, 2025 am 12:06 AM

Beyond the Browser: JavaScript in the Real WorldApr 12, 2025 am 12:06 AMJavaScript's applications in the real world include server-side programming, mobile application development and Internet of Things control: 1. Server-side programming is realized through Node.js, suitable for high concurrent request processing. 2. Mobile application development is carried out through ReactNative and supports cross-platform deployment. 3. Used for IoT device control through Johnny-Five library, suitable for hardware interaction.

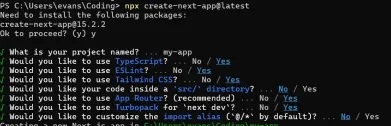

Building a Multi-Tenant SaaS Application with Next.js (Backend Integration)Apr 11, 2025 am 08:23 AM

Building a Multi-Tenant SaaS Application with Next.js (Backend Integration)Apr 11, 2025 am 08:23 AMI built a functional multi-tenant SaaS application (an EdTech app) with your everyday tech tool and you can do the same. First, what’s a multi-tenant SaaS application? Multi-tenant SaaS applications let you serve multiple customers from a sing

How to Build a Multi-Tenant SaaS Application with Next.js (Frontend Integration)Apr 11, 2025 am 08:22 AM

How to Build a Multi-Tenant SaaS Application with Next.js (Frontend Integration)Apr 11, 2025 am 08:22 AMThis article demonstrates frontend integration with a backend secured by Permit, building a functional EdTech SaaS application using Next.js. The frontend fetches user permissions to control UI visibility and ensures API requests adhere to role-base

JavaScript: Exploring the Versatility of a Web LanguageApr 11, 2025 am 12:01 AM

JavaScript: Exploring the Versatility of a Web LanguageApr 11, 2025 am 12:01 AMJavaScript is the core language of modern web development and is widely used for its diversity and flexibility. 1) Front-end development: build dynamic web pages and single-page applications through DOM operations and modern frameworks (such as React, Vue.js, Angular). 2) Server-side development: Node.js uses a non-blocking I/O model to handle high concurrency and real-time applications. 3) Mobile and desktop application development: cross-platform development is realized through ReactNative and Electron to improve development efficiency.

The Evolution of JavaScript: Current Trends and Future ProspectsApr 10, 2025 am 09:33 AM

The Evolution of JavaScript: Current Trends and Future ProspectsApr 10, 2025 am 09:33 AMThe latest trends in JavaScript include the rise of TypeScript, the popularity of modern frameworks and libraries, and the application of WebAssembly. Future prospects cover more powerful type systems, the development of server-side JavaScript, the expansion of artificial intelligence and machine learning, and the potential of IoT and edge computing.

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

SublimeText3 English version

Recommended: Win version, supports code prompts!

Safe Exam Browser

Safe Exam Browser is a secure browser environment for taking online exams securely. This software turns any computer into a secure workstation. It controls access to any utility and prevents students from using unauthorized resources.

ZendStudio 13.5.1 Mac

Powerful PHP integrated development environment

Dreamweaver Mac version

Visual web development tools

Dreamweaver CS6

Visual web development tools