Understanding Positional Embeddings in Transformers: From Absolute to Rotary

A deep dive into absolute, relative, and rotary positional embeddings with code examples

Understanding Positional Embeddings in Transformers: From Absolute to Rotary

A deep dive into absolute, relative, and rotary positional embeddings with code examples

Mina Ghashami

Follow

Towards Data Science

--

Share

One of the key components of transformers are positional embeddings. You may ask: why? Because the self-attention mechanism in transformers is permutation-invariant; that means it computes the amount of `attention` each token in the input receives from other tokens in the sequence, however it does not take the order of the tokens into account. In fact, attention mechanism treats the sequence as a bag of tokens. For this reason, we need to have another component called positional embedding which accounts for the order of tokens and it influences token embeddings. But what are the different types of positional embeddings and how are they implemented?

In this post, we take a look at three major types of positional embeddings and dive deep into their implementation.

Here is the table of content for this post:

1. Context and Background

2. Absolute Positional Embedding

The above is the detailed content of Understanding Positional Embeddings in Transformers: From Absolute to Rotary. For more information, please follow other related articles on the PHP Chinese website!

FloppyPepe (FPPE) Price Could Explode As Bitcoin (BTC) Price Rallies Towards $450,000May 09, 2025 am 11:54 AM

FloppyPepe (FPPE) Price Could Explode As Bitcoin (BTC) Price Rallies Towards $450,000May 09, 2025 am 11:54 AMAccording to a leading finance CEO, the Bitcoin price could be set for a move to $450,000. This Bitcoin price projection comes after a resurgence of good performances, signaling that the bear market may end.

Pi Network Confirms May 14 Launch—Qubetics and OKB Surge as Best Cryptos to Join for Long Term in 2025May 09, 2025 am 11:52 AM

Pi Network Confirms May 14 Launch—Qubetics and OKB Surge as Best Cryptos to Join for Long Term in 2025May 09, 2025 am 11:52 AMExplore why Qubetics, Pi Network, and OKB rank among the Best Cryptos to Join for Long Term. Get updated presale stats, features, and key real-world use cases.

Sun Life Financial Inc. (TSX: SLF) (NYSE: SLF) Declares a Dividend of $0.88 Per ShareMay 09, 2025 am 11:50 AM

Sun Life Financial Inc. (TSX: SLF) (NYSE: SLF) Declares a Dividend of $0.88 Per ShareMay 09, 2025 am 11:50 AMTORONTO, May 8, 2025 /CNW/ - The Board of Directors (the "Board") of Sun Life Financial Inc. (the "Company") (TSX: SLF) (NYSE: SLF) today announced that a dividend of $0.88 per share on the common shares of the Company has been de

Sun Life Announces Intended Renewal of Normal Course Issuer BidMay 09, 2025 am 11:48 AM

Sun Life Announces Intended Renewal of Normal Course Issuer BidMay 09, 2025 am 11:48 AMMay 7, 2025, the Company had purchased on the TSX, other Canadian stock exchanges and/or alternative Canadian trading platforms

The Bitcoin price has hit $100k for the first time since February, trading at $101.3k at press time.May 09, 2025 am 11:46 AM

The Bitcoin price has hit $100k for the first time since February, trading at $101.3k at press time.May 09, 2025 am 11:46 AMBTC's strong correlation with the Global M2 money supply is playing out once again, with the largest cryptocurrency now poised for new all-time highs.

Coinbase (COIN) Q1 CY2025 Highlights: Revenue Falls Short of Expectations, but Sales Rose 24.2% YoY to $2.03BMay 09, 2025 am 11:44 AM

Coinbase (COIN) Q1 CY2025 Highlights: Revenue Falls Short of Expectations, but Sales Rose 24.2% YoY to $2.03BMay 09, 2025 am 11:44 AMBlockchain infrastructure company Coinbase (NASDAQ: COIN) fell short of the market’s revenue expectations in Q1 CY2025, but sales rose 24.2% year

Ripple Labs and the SEC Have Officially Reached a Settlement AgreementMay 09, 2025 am 11:42 AM

Ripple Labs and the SEC Have Officially Reached a Settlement AgreementMay 09, 2025 am 11:42 AMRipple Labs and the U.S. Securities and Exchange Commission (SEC) have officially reached a deal that, if approved by a judge, will bring their years-long legal battle to a close.

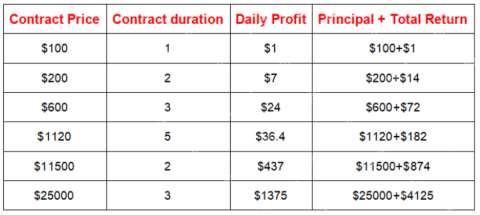

JA Mining Helps Global Users Share the Benefits of the Bitcoin Bull MarketMay 09, 2025 am 11:40 AM

JA Mining Helps Global Users Share the Benefits of the Bitcoin Bull MarketMay 09, 2025 am 11:40 AMBy lowering the threshold for mining and providing compliance protection, JA Mining helps global users share the benefits of the Bitcoin bull market.

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Safe Exam Browser

Safe Exam Browser is a secure browser environment for taking online exams securely. This software turns any computer into a secure workstation. It controls access to any utility and prevents students from using unauthorized resources.

SAP NetWeaver Server Adapter for Eclipse

Integrate Eclipse with SAP NetWeaver application server.

VSCode Windows 64-bit Download

A free and powerful IDE editor launched by Microsoft

Atom editor mac version download

The most popular open source editor

SublimeText3 Mac version

God-level code editing software (SublimeText3)