Technology peripherals

Technology peripherals AI

AI so cool! Old iPhone, iPad, and MacBook devices form a heterogeneous cluster and can run Llama 3

so cool! Old iPhone, iPad, and MacBook devices form a heterogeneous cluster and can run Llama 3so cool! Old iPhone, iPad, and MacBook devices form a heterogeneous cluster and can run Llama 3

If you have idle equipment, maybe you can give it a try.

This time, the hardware device in your hand can also flex its muscles in the field of AI.

By combining iPhone, iPad, and Macbook, you can assemble a "heterogeneous cluster inference solution" and then run the Llama3 model smoothly.

It is worth mentioning that this heterogeneous cluster can be a Windows system, Linux, or iOS system, and support for Android will be coming soon. The heterogeneous cluster is running.

According to the project author @evilsocket, this heterogeneous cluster includes iPhone 15 Pro Max, iPad Pro, MacBook Pro (M1 Max), NVIDIA GeForce 3080, 2x NVIDIA Titan X Pascal. All code has been uploaded to GitHub. Seeing this, netizens expressed that this old man is indeed not simple.

According to the project author @evilsocket, this heterogeneous cluster includes iPhone 15 Pro Max, iPad Pro, MacBook Pro (M1 Max), NVIDIA GeForce 3080, 2x NVIDIA Titan X Pascal. All code has been uploaded to GitHub. Seeing this, netizens expressed that this old man is indeed not simple.

However, some netizens are beginning to worry about energy consumption. Regardless of speed, the electricity bill cannot be afforded. Moving data back and forth causes too much loss.

Project address: https://github.com/evilsocket/cake

The main idea of Cake is to shard transformer blocks to multiple devices to be able to run inference on models that typically do not fit into the GPU memory of a single device . Inference on consecutive transformer blocks on the same worker thread is done in batches to minimize delays caused by data transfer.

cargo build --release

make ios

Use

to run the worker node:cake-cli --model /path/to/Meta-Llama-3-8B \ # model path, read below on how to optimize model size for workers --mode worker \# run as worker --name worker0 \ # worker name in topology file --topology topology.yml \# topology --address 0.0.0.0:10128 # bind address

cake-cli --model /path/to/Meta-Llama-3-8B \ --topology topology.ymlThe topology.yml determines which layers are served by which worker:

linux_server_1:host: 'linux_server.host:10128'description: 'NVIDIA Titan X Pascal (12GB)'layers:- 'model.layers.0-5'linux_server_2:host: 'linux_server2.host:10128'description: 'NVIDIA GeForce 3080 (10GB)'layers:- 'model.layers.6-16'iphone:host: 'iphone.host:10128'description: 'iPhone 15 Pro Max'layers:- 'model.layers.17'ipad:host: 'ipad.host:10128'description: 'iPad'layers:- 'model.layers.18-19'macbook:host: 'macbook.host:10128'description: 'M1 Max'layers: - 'model.layers.20-31'

cake-split-model --model-path path/to/Meta-Llama-3-8B \ # source model to split --topology path/to/topology.yml \# topology file --output output-folder-nameReference link: https://x.com/tuturetom/status/1812654489972973643

The above is the detailed content of so cool! Old iPhone, iPad, and MacBook devices form a heterogeneous cluster and can run Llama 3. For more information, please follow other related articles on the PHP Chinese website!

Newest Annual Compilation Of The Best Prompt Engineering TechniquesApr 10, 2025 am 11:22 AM

Newest Annual Compilation Of The Best Prompt Engineering TechniquesApr 10, 2025 am 11:22 AMFor those of you who might be new to my column, I broadly explore the latest advances in AI across the board, including topics such as embodied AI, AI reasoning, high-tech breakthroughs in AI, prompt engineering, training of AI, fielding of AI, AI re

Europe's AI Continent Action Plan: Gigafactories, Data Labs, And Green AIApr 10, 2025 am 11:21 AM

Europe's AI Continent Action Plan: Gigafactories, Data Labs, And Green AIApr 10, 2025 am 11:21 AMEurope's ambitious AI Continent Action Plan aims to establish the EU as a global leader in artificial intelligence. A key element is the creation of a network of AI gigafactories, each housing around 100,000 advanced AI chips – four times the capaci

Is Microsoft's Straightforward Agent Story Enough To Create More Fans?Apr 10, 2025 am 11:20 AM

Is Microsoft's Straightforward Agent Story Enough To Create More Fans?Apr 10, 2025 am 11:20 AMMicrosoft's Unified Approach to AI Agent Applications: A Clear Win for Businesses Microsoft's recent announcement regarding new AI agent capabilities impressed with its clear and unified presentation. Unlike many tech announcements bogged down in te

Selling AI Strategy To Employees: Shopify CEO's ManifestoApr 10, 2025 am 11:19 AM

Selling AI Strategy To Employees: Shopify CEO's ManifestoApr 10, 2025 am 11:19 AMShopify CEO Tobi Lütke's recent memo boldly declares AI proficiency a fundamental expectation for every employee, marking a significant cultural shift within the company. This isn't a fleeting trend; it's a new operational paradigm integrated into p

IBM Launches Z17 Mainframe With Full AI IntegrationApr 10, 2025 am 11:18 AM

IBM Launches Z17 Mainframe With Full AI IntegrationApr 10, 2025 am 11:18 AMIBM's z17 Mainframe: Integrating AI for Enhanced Business Operations Last month, at IBM's New York headquarters, I received a preview of the z17's capabilities. Building on the z16's success (launched in 2022 and demonstrating sustained revenue grow

5 ChatGPT Prompts To Stop Depending On Others And Trust Yourself FullyApr 10, 2025 am 11:17 AM

5 ChatGPT Prompts To Stop Depending On Others And Trust Yourself FullyApr 10, 2025 am 11:17 AMUnlock unshakeable confidence and eliminate the need for external validation! These five ChatGPT prompts will guide you towards complete self-reliance and a transformative shift in self-perception. Simply copy, paste, and customize the bracketed in

AI Is Dangerously Similar To Your MindApr 10, 2025 am 11:16 AM

AI Is Dangerously Similar To Your MindApr 10, 2025 am 11:16 AMA recent [study] by Anthropic, an artificial intelligence security and research company, begins to reveal the truth about these complex processes, showing a complexity that is disturbingly similar to our own cognitive domain. Natural intelligence and artificial intelligence may be more similar than we think. Snooping inside: Anthropic Interpretability Study The new findings from the research conducted by Anthropic represent significant advances in the field of mechanistic interpretability, which aims to reverse engineer internal computing of AI—not just observe what AI does, but understand how it does it at the artificial neuron level. Imagine trying to understand the brain by drawing which neurons fire when someone sees a specific object or thinks about a specific idea. A

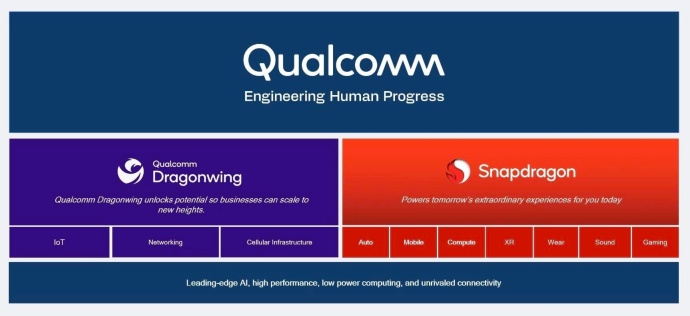

Dragonwing Showcases Qualcomm's Edge MomentumApr 10, 2025 am 11:14 AM

Dragonwing Showcases Qualcomm's Edge MomentumApr 10, 2025 am 11:14 AMQualcomm's Dragonwing: A Strategic Leap into Enterprise and Infrastructure Qualcomm is aggressively expanding its reach beyond mobile, targeting enterprise and infrastructure markets globally with its new Dragonwing brand. This isn't merely a rebran

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

WebStorm Mac version

Useful JavaScript development tools

Zend Studio 13.0.1

Powerful PHP integrated development environment

SublimeText3 English version

Recommended: Win version, supports code prompts!

SublimeText3 Mac version

God-level code editing software (SublimeText3)

DVWA

Damn Vulnerable Web App (DVWA) is a PHP/MySQL web application that is very vulnerable. Its main goals are to be an aid for security professionals to test their skills and tools in a legal environment, to help web developers better understand the process of securing web applications, and to help teachers/students teach/learn in a classroom environment Web application security. The goal of DVWA is to practice some of the most common web vulnerabilities through a simple and straightforward interface, with varying degrees of difficulty. Please note that this software