Technology peripherals

Technology peripherals AI

AI For the first time, neural activation of language has been localized to the cellular level

For the first time, neural activation of language has been localized to the cellular levelFor the first time, neural activation of language has been localized to the cellular level

The highest-resolution neuron map encoding word meaning to date is here.

- Despite increasing understanding of the brain regions that support language and semantic processing, much remains unknown about neurosemantic derivations at the cellular level.

- Recently, a research paper published in Nature magazine discovered the fine cortical representation of semantic information by single neurons by tracking the activity of neurons during natural speech processing.

- The paper is titled "Semantic encoding for language understanding at single-cell resolution".

1. Paper address: https://www.nature.com/articles/s41586-024-07643-2

- This study created the highest-resolution map of neurons to date, which are responsible for Encode the meaning of various words.

- The study recorded single-cell activity in the left language-dominated prefrontal cortex as participants listened to semantically distinct sentences and stories. It turns out that across individuals, the brain uses the same standard categories to categorize words – helping us translate sounds into meaning.

- These neurons selectively respond to specific word meanings and reliably distinguish words from non-words. Furthermore, rather than responding to words as fixed memory representations, their activity is highly dynamic, reflecting the meaning of the word according to its specific sentence context and independent of its phonetic form.

- Overall, this study shows how these cell populations can accurately predict the broad semantic categories of words heard in real time during speech, and how they can track the sentences in which they appear. The study also shows how these hierarchical structures of meaning representations are encoded and how these representations map onto groups of cells. These findings, at the neuronal scale, reveal the fine cortical organization of human semantic representations and begin to elucidate cellular-level meaning processing during language comprehension. fenye one neuron is responsible for everything

The same group of neurons respond to words of similar categories (such as actions or people-related). Research has found that the brain associates certain words with each other (such as "duck" and "egg"), triggering the same neurons. Words with similar meanings (such as "rat" and "rat") trigger more similar patterns than words with different meanings (such as "rat" and "carrot"). Other neurons respond to abstract concepts such as relational words such as "above" and "behind."

The categories assigned to words were similar between participants, suggesting that the human brain groups meanings in the same way.

Prefrontal cortex neurons differentiate words based on their meaning (not their sound). For example, when "Son" is heard, locations associated with family members are activated, but when the homophone "Sun" is heard, these neurons do not respond.

After this theory was proposed, researchers can, to a certain extent, determine what people are hearing by observing their neuron firing. Although they were unable to reproduce the exact sentences, they were able to make judgments. For example, a sentence contains animal, action, and food, in the order animal, action, and food.

“Getting this level of detail and getting a glimpse of what’s happening at the level of individual neurons is very exciting,” said Vikash Gilja, an engineer at the University of California, San Diego and chief scientific officer of brain-computer interface company Paradromics. He was impressed that the researchers could identify not only the neurons that corresponded to words and their categories, but also the order in which they were spoken.

Gilja says recording information from neurons is much faster than using previous imaging methods. Understanding the natural speed of speech will be important for future efforts to develop brain-computer interface devices, new types of devices that could restore the ability to speak to people who have lost it.

Reference link: https://www.nature.com/articles/d41586-024-02146-6

The above is the detailed content of For the first time, neural activation of language has been localized to the cellular level. For more information, please follow other related articles on the PHP Chinese website!

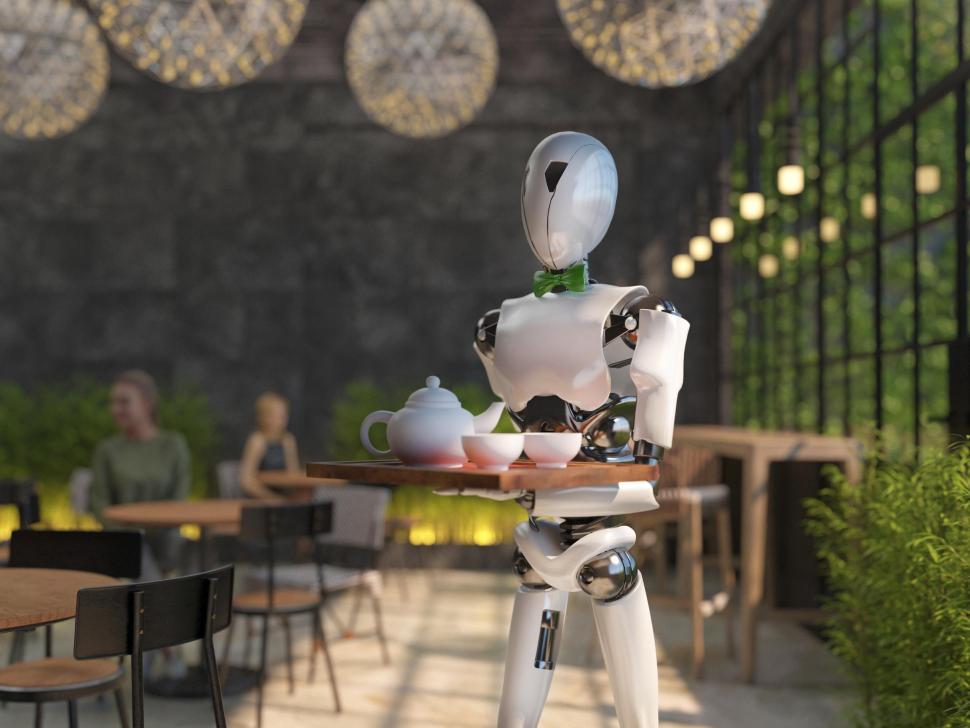

Cooking Up Innovation: How Artificial Intelligence Is Transforming Food ServiceApr 12, 2025 pm 12:09 PM

Cooking Up Innovation: How Artificial Intelligence Is Transforming Food ServiceApr 12, 2025 pm 12:09 PMAI Augmenting Food Preparation While still in nascent use, AI systems are being increasingly used in food preparation. AI-driven robots are used in kitchens to automate food preparation tasks, such as flipping burgers, making pizzas, or assembling sa

Comprehensive Guide on Python Namespaces & Variable ScopesApr 12, 2025 pm 12:00 PM

Comprehensive Guide on Python Namespaces & Variable ScopesApr 12, 2025 pm 12:00 PMIntroduction Understanding the namespaces, scopes, and behavior of variables in Python functions is crucial for writing efficiently and avoiding runtime errors or exceptions. In this article, we’ll delve into various asp

A Comprehensive Guide to Vision Language Models (VLMs)Apr 12, 2025 am 11:58 AM

A Comprehensive Guide to Vision Language Models (VLMs)Apr 12, 2025 am 11:58 AMIntroduction Imagine walking through an art gallery, surrounded by vivid paintings and sculptures. Now, what if you could ask each piece a question and get a meaningful answer? You might ask, “What story are you telling?

MediaTek Boosts Premium Lineup With Kompanio Ultra And Dimensity 9400Apr 12, 2025 am 11:52 AM

MediaTek Boosts Premium Lineup With Kompanio Ultra And Dimensity 9400Apr 12, 2025 am 11:52 AMContinuing the product cadence, this month MediaTek has made a series of announcements, including the new Kompanio Ultra and Dimensity 9400 . These products fill in the more traditional parts of MediaTek’s business, which include chips for smartphone

This Week In AI: Walmart Sets Fashion Trends Before They Ever HappenApr 12, 2025 am 11:51 AM

This Week In AI: Walmart Sets Fashion Trends Before They Ever HappenApr 12, 2025 am 11:51 AM#1 Google launched Agent2Agent The Story: It’s Monday morning. As an AI-powered recruiter you work smarter, not harder. You log into your company’s dashboard on your phone. It tells you three critical roles have been sourced, vetted, and scheduled fo

Generative AI Meets PsychobabbleApr 12, 2025 am 11:50 AM

Generative AI Meets PsychobabbleApr 12, 2025 am 11:50 AMI would guess that you must be. We all seem to know that psychobabble consists of assorted chatter that mixes various psychological terminology and often ends up being either incomprehensible or completely nonsensical. All you need to do to spew fo

The Prototype: Scientists Turn Paper Into PlasticApr 12, 2025 am 11:49 AM

The Prototype: Scientists Turn Paper Into PlasticApr 12, 2025 am 11:49 AMOnly 9.5% of plastics manufactured in 2022 were made from recycled materials, according to a new study published this week. Meanwhile, plastic continues to pile up in landfills–and ecosystems–around the world. But help is on the way. A team of engin

The Rise Of The AI Analyst: Why This Could Be The Most Important Job In The AI RevolutionApr 12, 2025 am 11:41 AM

The Rise Of The AI Analyst: Why This Could Be The Most Important Job In The AI RevolutionApr 12, 2025 am 11:41 AMMy recent conversation with Andy MacMillan, CEO of leading enterprise analytics platform Alteryx, highlighted this critical yet underappreciated role in the AI revolution. As MacMillan explains, the gap between raw business data and AI-ready informat

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

MinGW - Minimalist GNU for Windows

This project is in the process of being migrated to osdn.net/projects/mingw, you can continue to follow us there. MinGW: A native Windows port of the GNU Compiler Collection (GCC), freely distributable import libraries and header files for building native Windows applications; includes extensions to the MSVC runtime to support C99 functionality. All MinGW software can run on 64-bit Windows platforms.

SAP NetWeaver Server Adapter for Eclipse

Integrate Eclipse with SAP NetWeaver application server.

Notepad++7.3.1

Easy-to-use and free code editor

Dreamweaver Mac version

Visual web development tools

SublimeText3 Linux new version

SublimeText3 Linux latest version