Technology peripherals

Technology peripherals AI

AI Da Hongliang's research group at Shanghai Jiao Tong University & Shanghai AI Laboratory team released FSFP, a small sample prediction method for protein function based on language model, which was published in the Nature sub-journal

Da Hongliang's research group at Shanghai Jiao Tong University & Shanghai AI Laboratory team released FSFP, a small sample prediction method for protein function based on language model, which was published in the Nature sub-journal

Recently, the research group of Professor Hong Liang from the Institute of Natural Sciences/School of Physics and Astronomy/Zhangjiang Institute of Advanced Research/School of Pharmacy of Shanghai Jiao Tong University, and young researchers from the Shanghai Artificial Intelligence Laboratory talked about protein mutation - Important breakthroughs were made in property prediction.

This work adopts a new training strategy, which greatly improves the performance of traditional protein pre-trained large models in mutation-property prediction using very little wet experimental data.

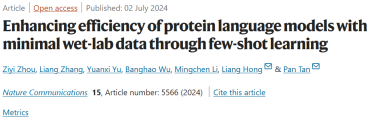

The research results were titled "Enhancing the efficiency of protein language models with minimal wet-lab data through few-shot learning" and was published in "Nature Communications" on July 2, 2024.

- https://www.nature.com/articles/s41467-024-49798-6

Research background

Enzyme engineering requires mutation and screening of proteins to Get a better protein product. Traditional wet experiment methods require repeated experimental iterations, which is time-consuming and labor-intensive.

Deep learning methods can accelerate protein mutation transformation, but require a large amount of protein mutation data to train the model. Obtaining high-quality mutation data is restricted by traditional wet experiments.

There is an urgent need for a method that can accurately predict protein mutation-function without large amounts of wet experimental data.

Research Method

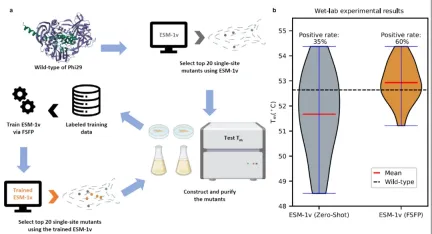

This study proposes the FSFP method, which combines meta-learning, ranking learning and efficient fine-tuning of parameters to train a protein pre-training model using only dozens of wet experimental data, greatly improving the mutation-property prediction effect .

FSFP Method:

- Use the protein pre-trained model to evaluate the similarity between the target protein and the protein in ProteinGym.

- Select the two ProteinGym data sets that are closest to the target protein as meta-learning auxiliary tasks.

- Use GEMME’s scoring data of target proteins as the third auxiliary task.

- Use the ranking learning loss function and Lora training method to train the protein pre-training model on a small amount of wet experimental data.

Test results show that even if the original prediction correlation is lower than 0.1, the FSFP method can increase the correlation to above 0.5 after training the model using only 20 wet experimental data.

Research results

At the same time, in order to study the effectiveness of FSFP. We conducted a wet experiment in a specific case of protein Phi29 modification. FSFP was able to predict the top-20 single point mutations of the original protein pre-trained model ESM-1v when only 20 wet experiment data were used to train the model. The positivity rate increased by 25%, and nearly 10 new positive single point mutations could be found.

Summary

In this work, the author proposed a new fine-tuning training method FSFP based on the protein pre-training model.

FSFP comprehensively utilizes meta-learning, ranking learning and efficient parameter fine-tuning technology to efficiently train a protein pre-training model using only 20 random wet experiment data, and can greatly improve the single-point mutation prediction positivity rate of the model.

The above results show that the FSFP method is of great significance in solving the high experimental cycle and reducing experimental costs in current protein engineering.

Author information

Professor Hong Liang from the Academy of Natural Sciences/School of Physics and Astronomy/Zhangjiang Institute for Advanced Study, and Tan Peng, a young researcher from the Shanghai Artificial Intelligence Laboratory, are the corresponding authors.

Postdoctoral fellow Zhou Ziyi from the School of Physics and Astronomy of Shanghai Jiao Tong University, master student Zhang Liang, doctoral student Yu Yuanxi, and doctoral student Wu Banghao from the School of Life Science and Technology are the co-first authors.

The above is the detailed content of Da Hongliang's research group at Shanghai Jiao Tong University & Shanghai AI Laboratory team released FSFP, a small sample prediction method for protein function based on language model, which was published in the Nature sub-journal. For more information, please follow other related articles on the PHP Chinese website!

DSA如何弯道超车NVIDIA GPU?Sep 20, 2023 pm 06:09 PM

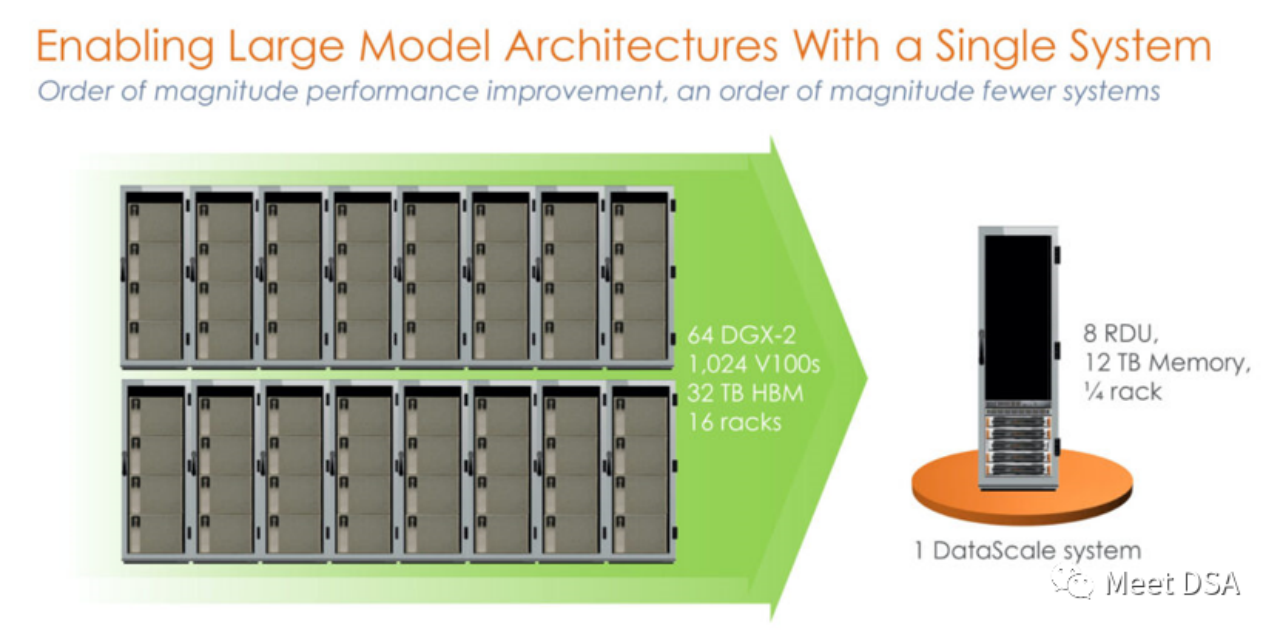

DSA如何弯道超车NVIDIA GPU?Sep 20, 2023 pm 06:09 PM你可能听过以下犀利的观点:1.跟着NVIDIA的技术路线,可能永远也追不上NVIDIA的脚步。2.DSA或许有机会追赶上NVIDIA,但目前的状况是DSA濒临消亡,看不到任何希望另一方面,我们都知道现在大模型正处于风口位置,业界很多人想做大模型芯片,也有很多人想投大模型芯片。但是,大模型芯片的设计关键在哪,大带宽大内存的重要性好像大家都知道,但做出来的芯片跟NVIDIA相比,又有何不同?带着问题,本文尝试给大家一点启发。纯粹以观点为主的文章往往显得形式主义,我们可以通过一个架构的例子来说明Sam

阿里云通义千问14B模型开源!性能超越Llama2等同等尺寸模型Sep 25, 2023 pm 10:25 PM

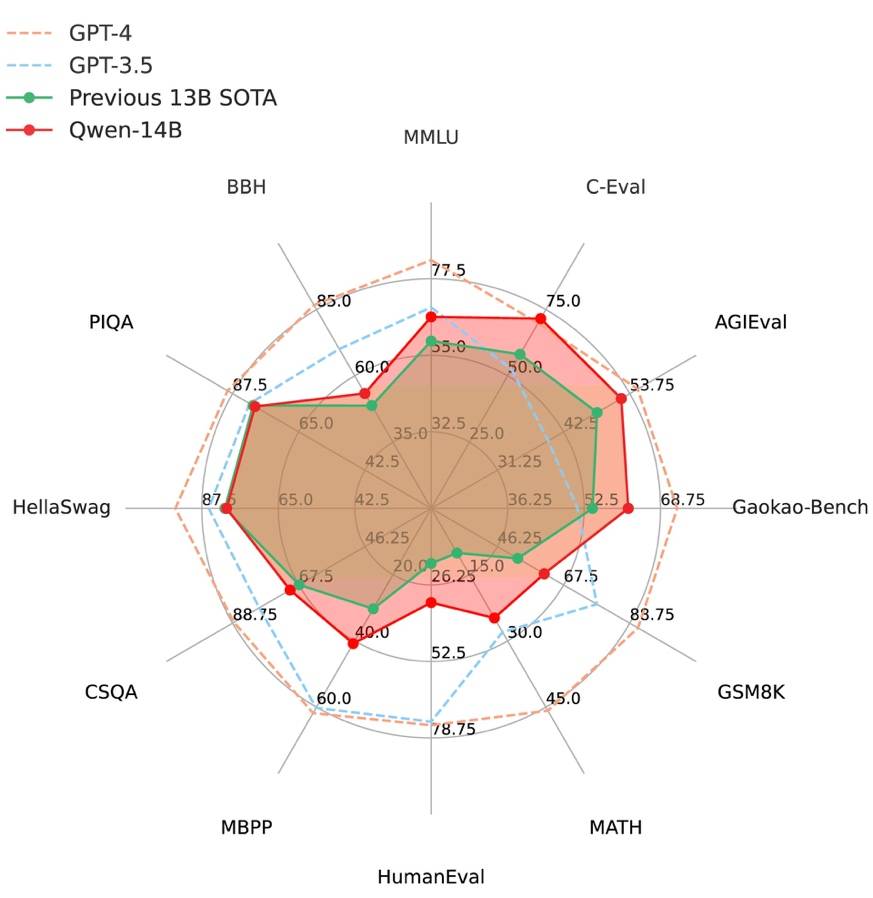

阿里云通义千问14B模型开源!性能超越Llama2等同等尺寸模型Sep 25, 2023 pm 10:25 PM2021年9月25日,阿里云发布了开源项目通义千问140亿参数模型Qwen-14B以及其对话模型Qwen-14B-Chat,并且可以免费商用。Qwen-14B在多个权威评测中表现出色,超过了同等规模的模型,甚至有些指标接近Llama2-70B。此前,阿里云还开源了70亿参数模型Qwen-7B,仅一个多月的时间下载量就突破了100万,成为开源社区的热门项目Qwen-14B是一款支持多种语言的高性能开源模型,相比同类模型使用了更多的高质量数据,整体训练数据超过3万亿Token,使得模型具备更强大的推

ICCV 2023揭晓:ControlNet、SAM等热门论文斩获奖项Oct 04, 2023 pm 09:37 PM

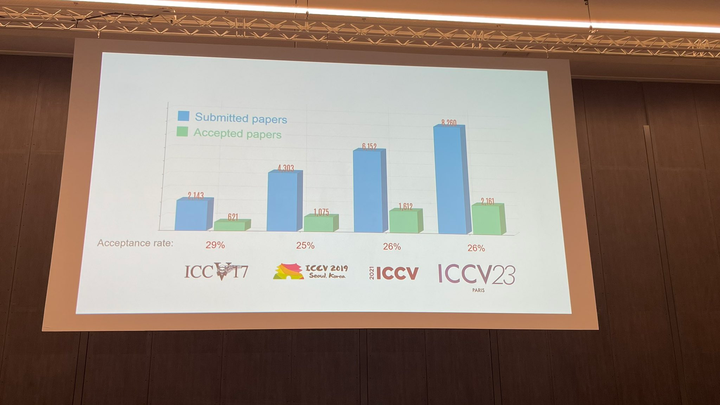

ICCV 2023揭晓:ControlNet、SAM等热门论文斩获奖项Oct 04, 2023 pm 09:37 PM在法国巴黎举行了国际计算机视觉大会ICCV(InternationalConferenceonComputerVision)本周开幕作为全球计算机视觉领域顶级的学术会议,ICCV每两年召开一次。ICCV的热度一直以来都与CVPR不相上下,屡创新高在今天的开幕式上,ICCV官方公布了今年的论文数据:本届ICCV共有8068篇投稿,其中有2160篇被接收,录用率为26.8%,略高于上一届ICCV2021的录用率25.9%在论文主题方面,官方也公布了相关数据:多视角和传感器的3D技术热度最高在今天的开

百度文心一言全面向全社会开放,率先迈出重要一步Aug 31, 2023 pm 01:33 PM

百度文心一言全面向全社会开放,率先迈出重要一步Aug 31, 2023 pm 01:33 PM8月31日,文心一言首次向全社会全面开放。用户可以在应用商店下载“文心一言APP”或登录“文心一言官网”(https://yiyan.baidu.com)进行体验据报道,百度计划推出一系列经过全新重构的AI原生应用,以便让用户充分体验生成式AI的理解、生成、逻辑和记忆等四大核心能力今年3月16日,文心一言开启邀测。作为全球大厂中首个发布的生成式AI产品,文心一言的基础模型文心大模型早在2019年就在国内率先发布,近期升级的文心大模型3.5也持续在十余个国内外权威测评中位居第一。李彦宏表示,当文心

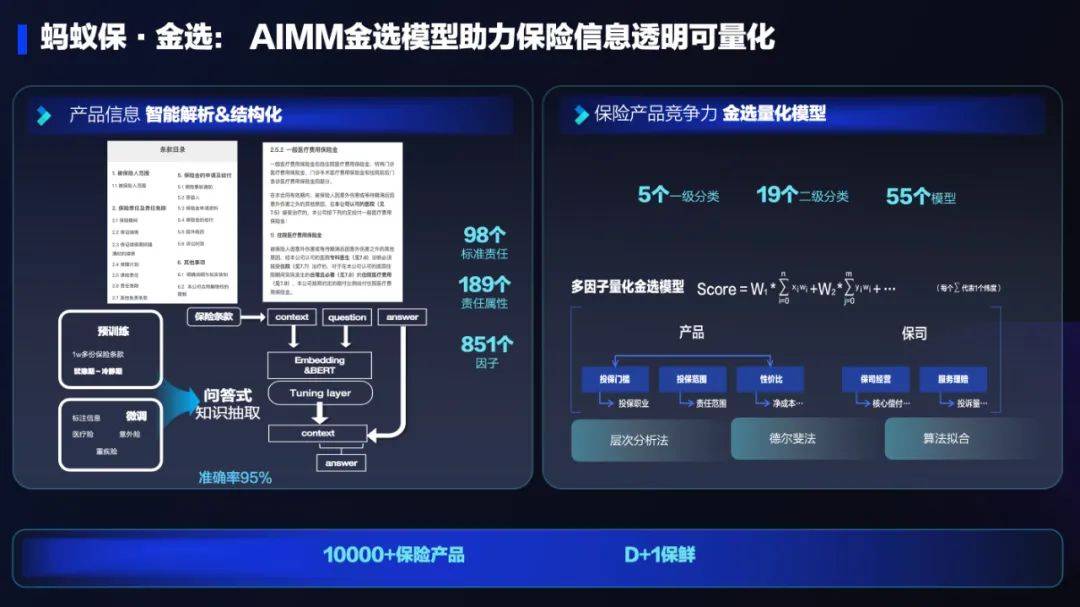

AI技术在蚂蚁集团保险业务中的应用:革新保险服务,带来全新体验Sep 20, 2023 pm 10:45 PM

AI技术在蚂蚁集团保险业务中的应用:革新保险服务,带来全新体验Sep 20, 2023 pm 10:45 PM保险行业对于社会民生和国民经济的重要性不言而喻。作为风险管理工具,保险为人民群众提供保障和福利,推动经济的稳定和可持续发展。在新的时代背景下,保险行业面临着新的机遇和挑战,需要不断创新和转型,以适应社会需求的变化和经济结构的调整近年来,中国的保险科技蓬勃发展。通过创新的商业模式和先进的技术手段,积极推动保险行业实现数字化和智能化转型。保险科技的目标是提升保险服务的便利性、个性化和智能化水平,以前所未有的速度改变传统保险业的面貌。这一发展趋势为保险行业注入了新的活力,使保险产品更贴近人民群众的实际

复旦大学团队发布中文智慧法律系统DISC-LawLLM,构建司法评测基准,开源30万微调数据Sep 29, 2023 pm 01:17 PM

复旦大学团队发布中文智慧法律系统DISC-LawLLM,构建司法评测基准,开源30万微调数据Sep 29, 2023 pm 01:17 PM随着智慧司法的兴起,智能化方法驱动的智能法律系统有望惠及不同群体。例如,为法律专业人员减轻文书工作,为普通民众提供法律咨询服务,为法学学生提供学习和考试辅导。由于法律知识的独特性和司法任务的多样性,此前的智慧司法研究方面主要着眼于为特定任务设计自动化算法,难以满足对司法领域提供支撑性服务的需求,离应用落地有不小的距离。而大型语言模型(LLMs)在不同的传统任务上展示出强大的能力,为智能法律系统的进一步发展带来希望。近日,复旦大学数据智能与社会计算实验室(FudanDISC)发布大语言模型驱动的中

致敬TempleOS,有开发者创建了启动Llama 2的操作系统,网友:8G内存老电脑就能跑Oct 07, 2023 pm 10:09 PM

致敬TempleOS,有开发者创建了启动Llama 2的操作系统,网友:8G内存老电脑就能跑Oct 07, 2023 pm 10:09 PM不得不说,Llama2的「二创」项目越来越硬核、有趣了。自Meta发布开源大模型Llama2以来,围绕着该模型的「二创」项目便多了起来。此前7月,特斯拉前AI总监、重回OpenAI的AndrejKarpathy利用周末时间,做了一个关于Llama2的有趣项目llama2.c,让用户在PyTorch中训练一个babyLlama2模型,然后使用近500行纯C、无任何依赖性的文件进行推理。今天,在Karpathyllama2.c项目的基础上,又有开发者创建了一个启动Llama2的演示操作系统,以及一个

快手黑科技“子弹时间”赋能亚运转播,打造智慧观赛新体验Oct 11, 2023 am 11:21 AM

快手黑科技“子弹时间”赋能亚运转播,打造智慧观赛新体验Oct 11, 2023 am 11:21 AM杭州第19届亚运会不仅是国际顶级体育盛会,更是一场精彩绝伦的中国科技盛宴。本届亚运会中,快手StreamLake与杭州电信深度合作,联合打造智慧观赛新体验,在击剑赛事的转播中,全面应用了快手StreamLake六自由度技术,其中“子弹时间”也是首次应用于击剑项目国际顶级赛事。中国电信杭州分公司智能亚运专班组长芮杰表示,依托快手StreamLake自研的4K3D虚拟运镜视频技术和中国电信5G/全光网,通过赛场内部署的4K专业摄像机阵列实时采集的高清竞赛视频,

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Zend Studio 13.0.1

Powerful PHP integrated development environment

SublimeText3 Chinese version

Chinese version, very easy to use

SublimeText3 Linux new version

SublimeText3 Linux latest version

Notepad++7.3.1

Easy-to-use and free code editor

Dreamweaver CS6

Visual web development tools