Technology peripherals

Technology peripherals AI

AI The latest progress of the Ant Bailing large model: it already has native multi-modal capabilities

The latest progress of the Ant Bailing large model: it already has native multi-modal capabilitiesThe latest progress of the Ant Bailing large model: it already has native multi-modal capabilities

On July 5, at the "Trusted Large Models Help Industrial Innovation and Development" forum at the 2024 World Artificial Intelligence Conference, Ant Group announced the latest development progress of its self-developed Bailing model: the Bailing model has the ability to "see" The native multi-modal capabilities of "listening", "speaking" and "drawing" can directly understand and train multi-modal data such as audio, video, pictures, text and so on. Native multimodality is considered to be the only way to AGI. In China, only a few large model manufacturers have achieved this capability. The reporter saw from the demonstration at the conference that multi-modal technology can make large models perceive and interact more like humans, supporting the upgrade of intelligent body experience. Bailing's multi-modal capabilities have been applied to the "Alipay Intelligent Assistant" and will be used in the future. Support more intelligent agent upgrades on Alipay.

- The multi-modal capabilities of Bailing Large Model have reached the GPT-4o level on the Chinese graphics and text understanding MMBench-CN evaluation set , reached the excellent level (the highest level) in the multi-modal security capability evaluation of the Academy of Information and Communications Technology, has the ability to support large-scale applications, and can support a series of downstream tasks such as AIGC, graphic dialogue, video understanding, and digital humans.

- Multi-modal large model technology can enable AI to better understand the complex information of the human world, and also make AI more consistent with human interaction habits when applied. It has shown great potential in many fields such as intelligent customer service, autonomous driving, and medical diagnosis. application potential.

- Ant Group has a wealth of application scenarios. The multi-modal capabilities of Bailing’s large model have also been applied in life services, search recommendations, interactive entertainment and other scenarios.

- In terms of life services, Ant Group uses multi-modal models to implement ACT technology, allowing the agent to have certain planning and execution capabilities. For example, directly ordering a cup of coffee in the Starbucks applet based on the user's voice specification, this function is currently available on Alipay Intelligent assistant is online.

- In the medical field, multi-modal capabilities enable users to operate complex tasks. It can identify and interpret more than 100 complex medical test reports, and can also detect hair health and hair loss to provide assistance in treatment.

(Audiences experienced using Alipay intelligent assistant to order coffee on-site in the Ant exhibition hall)

At the launch site, Xu Peng, Vice President of Ant Group, demonstrated more application scenarios that the newly upgraded multi-modal technology can achieve:

- Passed In the natural form of video conversation, the AI assistant can identify the user's clothing and give matching suggestions for dates;

- Make different recipe combinations from a bunch of ingredients according to the user's different intentions;

- According to the physical symptoms described by the user , select potentially suitable medicines from a batch of medicines, and read out the taking instructions for users' reference.

Based on the multi-modal capabilities of Bailing’s large model, Ant Group has been exploring the practice of large-scale application landing in the industry.

The "Alipay Multi-modal Medical Model" simultaneously released on the forum is the practice of this exploration. It is understood that Alipay’s multi-modal medical model has added tens of billions of Chinese and English graphics and texts including reports, images, medicines and other multi-modal information, hundreds of billions of medical text corpus, and tens of millions of high-quality medical knowledge maps. , has professional medical knowledge, and ranked first on the A list and second on the B list on promptCBLUE, the Chinese medical LLM evaluation list.

Based on the multi-modal capabilities of the Bailing large model, SkySense, a remote sensing model jointly developed by Ant Group and Wuhan University, also announced an open source plan on the forum. SkySense is currently the multi-modal remote sensing basic model with the largest parameter scale, the most comprehensive task coverage, and the highest recognition accuracy.

"From single text semantic understanding to multi-modal capabilities, it is a key iteration of artificial intelligence technology, and the application scenarios of 'watching, listening, writing, and drawing' spawned by multi-modal technology will make AI performance more realistic, To be closer to humans, Ant will continue to invest in the research and development of native multi-modality technology,” Xu Peng said.

The above is the detailed content of The latest progress of the Ant Bailing large model: it already has native multi-modal capabilities. For more information, please follow other related articles on the PHP Chinese website!

10 Generative AI Coding Extensions in VS Code You Must ExploreApr 13, 2025 am 01:14 AM

10 Generative AI Coding Extensions in VS Code You Must ExploreApr 13, 2025 am 01:14 AMHey there, Coding ninja! What coding-related tasks do you have planned for the day? Before you dive further into this blog, I want you to think about all your coding-related woes—better list those down. Done? – Let’

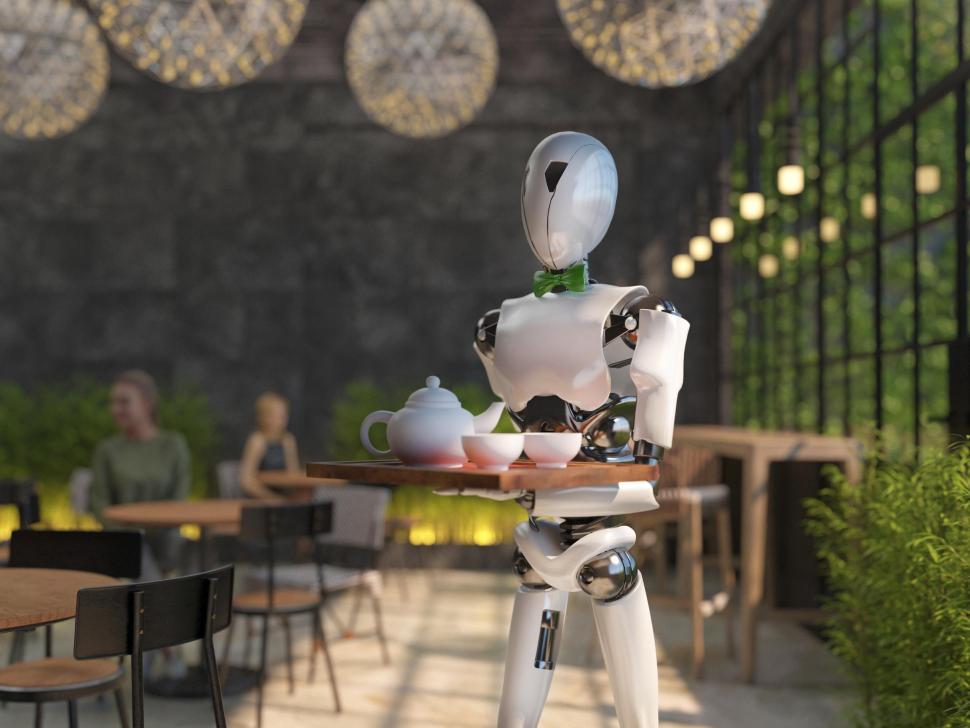

Cooking Up Innovation: How Artificial Intelligence Is Transforming Food ServiceApr 12, 2025 pm 12:09 PM

Cooking Up Innovation: How Artificial Intelligence Is Transforming Food ServiceApr 12, 2025 pm 12:09 PMAI Augmenting Food Preparation While still in nascent use, AI systems are being increasingly used in food preparation. AI-driven robots are used in kitchens to automate food preparation tasks, such as flipping burgers, making pizzas, or assembling sa

Comprehensive Guide on Python Namespaces & Variable ScopesApr 12, 2025 pm 12:00 PM

Comprehensive Guide on Python Namespaces & Variable ScopesApr 12, 2025 pm 12:00 PMIntroduction Understanding the namespaces, scopes, and behavior of variables in Python functions is crucial for writing efficiently and avoiding runtime errors or exceptions. In this article, we’ll delve into various asp

A Comprehensive Guide to Vision Language Models (VLMs)Apr 12, 2025 am 11:58 AM

A Comprehensive Guide to Vision Language Models (VLMs)Apr 12, 2025 am 11:58 AMIntroduction Imagine walking through an art gallery, surrounded by vivid paintings and sculptures. Now, what if you could ask each piece a question and get a meaningful answer? You might ask, “What story are you telling?

MediaTek Boosts Premium Lineup With Kompanio Ultra And Dimensity 9400Apr 12, 2025 am 11:52 AM

MediaTek Boosts Premium Lineup With Kompanio Ultra And Dimensity 9400Apr 12, 2025 am 11:52 AMContinuing the product cadence, this month MediaTek has made a series of announcements, including the new Kompanio Ultra and Dimensity 9400 . These products fill in the more traditional parts of MediaTek’s business, which include chips for smartphone

This Week In AI: Walmart Sets Fashion Trends Before They Ever HappenApr 12, 2025 am 11:51 AM

This Week In AI: Walmart Sets Fashion Trends Before They Ever HappenApr 12, 2025 am 11:51 AM#1 Google launched Agent2Agent The Story: It’s Monday morning. As an AI-powered recruiter you work smarter, not harder. You log into your company’s dashboard on your phone. It tells you three critical roles have been sourced, vetted, and scheduled fo

Generative AI Meets PsychobabbleApr 12, 2025 am 11:50 AM

Generative AI Meets PsychobabbleApr 12, 2025 am 11:50 AMI would guess that you must be. We all seem to know that psychobabble consists of assorted chatter that mixes various psychological terminology and often ends up being either incomprehensible or completely nonsensical. All you need to do to spew fo

The Prototype: Scientists Turn Paper Into PlasticApr 12, 2025 am 11:49 AM

The Prototype: Scientists Turn Paper Into PlasticApr 12, 2025 am 11:49 AMOnly 9.5% of plastics manufactured in 2022 were made from recycled materials, according to a new study published this week. Meanwhile, plastic continues to pile up in landfills–and ecosystems–around the world. But help is on the way. A team of engin

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

MantisBT

Mantis is an easy-to-deploy web-based defect tracking tool designed to aid in product defect tracking. It requires PHP, MySQL and a web server. Check out our demo and hosting services.

MinGW - Minimalist GNU for Windows

This project is in the process of being migrated to osdn.net/projects/mingw, you can continue to follow us there. MinGW: A native Windows port of the GNU Compiler Collection (GCC), freely distributable import libraries and header files for building native Windows applications; includes extensions to the MSVC runtime to support C99 functionality. All MinGW software can run on 64-bit Windows platforms.

ZendStudio 13.5.1 Mac

Powerful PHP integrated development environment

EditPlus Chinese cracked version

Small size, syntax highlighting, does not support code prompt function

Zend Studio 13.0.1

Powerful PHP integrated development environment