Technology peripherals

Technology peripherals AI

AI Runway and Luma are fighting again! Yann LeCun bombards: No matter how good you are, you are not a 'world model'

Runway and Luma are fighting again! Yann LeCun bombards: No matter how good you are, you are not a 'world model'Runway and Luma are fighting again! Yann LeCun bombards: No matter how good you are, you are not a 'world model'

Machine Power Report

Editor: Yang Wen

The wave of artificial intelligence represented by large models and AIGC has quietly changed the way we live and work, but Most people still don't know how to use it.

Therefore, we have launched the "AI in Use" column to introduce the use of AI in detail through intuitive, interesting and concise artificial intelligence use cases and stimulate everyone's thinking.

We also welcome readers to submit innovative use cases that they have personally practiced.

The AI video industry is "fighting" again!

On June 29, the well-known generative AI platform Runway announced that its latest model Gen-3 Alpha has started testing for some users.

On the same day, Luma launched a new keyframe function and is open to all users for free.

It can be said that "you have a good plan, I have a ladder", the two are fighting endlessly.

This made netizens extremely happy, "June, what a wonderful month!"

"Crazy May, crazy June, so crazy that I can't stop!"

-1-

Runway kills Hollywood

Two weeks ago, when the AI video "King" Runway launched a new video generation model Gen-3 Alpha, it Preview -

will first be available to paying users "within a few days", and the free version will also be open to all users at some point in the future.

On June 29, Runway fulfilled its promise and announced that its latest Gen-3 Alpha has started testing for some users.

Gen-3 Alpha is highly sought after because compared with the previous generation, it has achieved significant improvements in terms of light and shadow, quality, composition, text semantic restoration, physical simulation, and action consistency. Even the slogan is "For artists, by artists (born for artists, born by artists)".

What is the effect of Gen-3 Alpha? Netizens who are involved in flower arrangements have always had the most say. Next, please enjoy -

Movie footage of a terrifying monster rising from the Thames River in London:

A sad teddy bear is crying, crying until he is sad and blowing his nose with a tissue:

A British girl in a gorgeous dress is walking on the street where the castle stands, with speeding vehicles and slow horses beside her:

A huge lizard, studded with gorgeous jewelry and pearls, walks through Dense vegetation. The lizards sparkle in the light, and the footage is as realistic as a documentary.

There is also a diamond-encrusted toad covered in rubies and sapphires:

In the city streets at night, the rain creates the reflection of neon lights.

The camera starts from the light reflected in the puddle, slowly rises to show the glowing neon billboard, and then continues to zoom back to show the entire street soaked by rain.

The movement of the camera: first aiming at the reflection in the puddle, then lifting it up and pulling it back in one go to show the urban scenery on this rainy night.

Video link: https://www.php.cn/link/dbf138511ed1d9278bde43cc0000e49a

The yellow mold growing in the petri dish, under the dim and mysterious light, shows a cool color and full of dynamics.

In the autumn forest, the ground is covered with various orange, yellow and red fallen leaves.

A gentle breeze blew by, and the camera moved forward close to the ground. A whirlwind began to form, picking up the fallen leaves and forming a spiral. The camera rises with the fallen leaves and revolves around the rotating column of leaves.

Video link: https://www.php.cn/link/dbf138511ed1d9278bde43cc0000e49a

Starting from a low perspective of a graffiti-covered tunnel, the camera advances steadily along the road, through a short, dark section tunnel, the camera quickly rises as it appears on the other side, showing a large field of colorful wildflowers surrounded by snow-capped mountains.

Video link: https://www.php.cn/link/dbf138511ed1d9278bde43cc0000e49a

A close-up shot of playing the piano, fingers jumping on the keys, no hand distortion, smooth movements, the only shortcoming is , there is no ring on the ring finger, but the shadow "comes out of nothing".

The netizens also exposed Runway co-founder Cristóbal Valenzuela, who generated a video for his homemade bee camera.

Put the camera on the back of the bee, and the scene captured is like this:

Video link: https://www.php.cn/link/dbf138511ed1d9278bde43cc0000e49a

Put it on the face of the bee It’s purple:

Video link: https://www.php.cn/link/dbf138511ed1d9278bde43cc0000e49a

So, what does this pocket camera look like?

If AI continues to evolve like this, Hollywood actors will go on strike again.

-2-

Luma’s new keyframe function, smooth picture transition

On June 29, Luma AI launched the keyframe function, and with a wave of your hand, it was directly open to all users for free.

Users only need to upload the starting and ending images and add text descriptions, and Luma can generate Hollywood-level special effects videos.

For example, X netizen @hungrydonke uploaded two keyframe photos:

|

|

Then enter the prompt word: A bunch of black confetti suddenly falls (Suddenly, a bunch of black confetti suddenly falls) The effect is as follows -

Netizen @JonathanSolder3 first used midjourney to generate two pictures:

|

|

Then use the Luma keyframe function to generate an animation of Super Saiyan transformation. According to the author, Luma does not need a power-up prompt, just enter "Super Saiyan".

Video link: https://www.php.cn/link/dbf138511ed1d9278bde43cc0000e49a

Some netizens use this function to complete the transition of each shot, thereby mixing and matching classic fairy tales to generate a segment called "The Wolf" , The Warrior, and The Wardrobe” animation.

Video link: https://www.php.cn/link/dbf138511ed1d9278bde43cc0000e49a

Devil turns into angel:

Orange turns into chick:

Starbucks logo transformation:

Video link: https://www.php.cn/link/dbf138511ed1d9278bde43cc0000e49a

The AI video industry is so anxious. God knows how Sora can keep his composure and not show up until now.-3-

Yann LeCun "Bombardment": They don't understand physics at all

When Sora was released at the beginning of the year, "world model" suddenly became a hot concept.

Later, Google’s Genie also used the banner of “world model”. When Runway launched Gen-3 Alpha this time, the official said it “took an important step towards building a universal world model.”

What exactly is a world model?

In fact, there is no standard definition for this, but AI scientists believe that humans and animals will subtly grasp the operating rules of the world, so that they can "predict" what will happen next and take action. The study of world models is to let AI learn this ability.

Many people believe that the videos generated by applications such as Sora, Luma, and Runway are quite realistic and can also generate new video content in chronological order. They seem to have learned the ability to "predict" the development of things. This coincides with the goal pursued by world model research.

However, Turing Award winner Yann LeCun has been "pouring cold water".

He believes, "Producing the most realistic-looking videos based on prompts does not mean that the system understands the physical world, and generating causal predictions from world models is very different."

On July 1, Yann LeCun posted 6 posts in a row. Generative models for bombardment videos.

He retweeted a video of AI-generated gymnastics. The characters in the video either had their heads disappear out of thin air, or four legs suddenly appeared, and all kinds of weird pictures were everywhere.

Video link: https://www.php.cn/link/dbf138511ed1d9278bde43cc0000e49a

Yann LeCun said that the video generation model does not understand basic physical principles, let alone the structure of the human body.

"Sora and other video generative models have similar problems. There is no doubt that video generation technology will become more advanced over time, but a good world model that truly understands physics will not be generative "All birds and mammals understand physics better than any video generation model, yet none of them can generate detailed videos," said Yann LeCun.

Some netizens questioned: Don’t humans constantly generate detailed “videos” in their minds based on their understanding of physics?

Yann LeCun answered questions online, "We envision abstract scenarios that may occur, rather than generating pixel images. This is the point I want to express."

Yann LeCun retorts: No, they don’t. They just generate abstract scenarios of what might happen, which is very different from generating detailed videos.  In the future, we will bring more AIGC case demonstrations through new columns, and everyone is welcome to join the group for communication.

In the future, we will bring more AIGC case demonstrations through new columns, and everyone is welcome to join the group for communication.

The above is the detailed content of Runway and Luma are fighting again! Yann LeCun bombards: No matter how good you are, you are not a 'world model'. For more information, please follow other related articles on the PHP Chinese website!

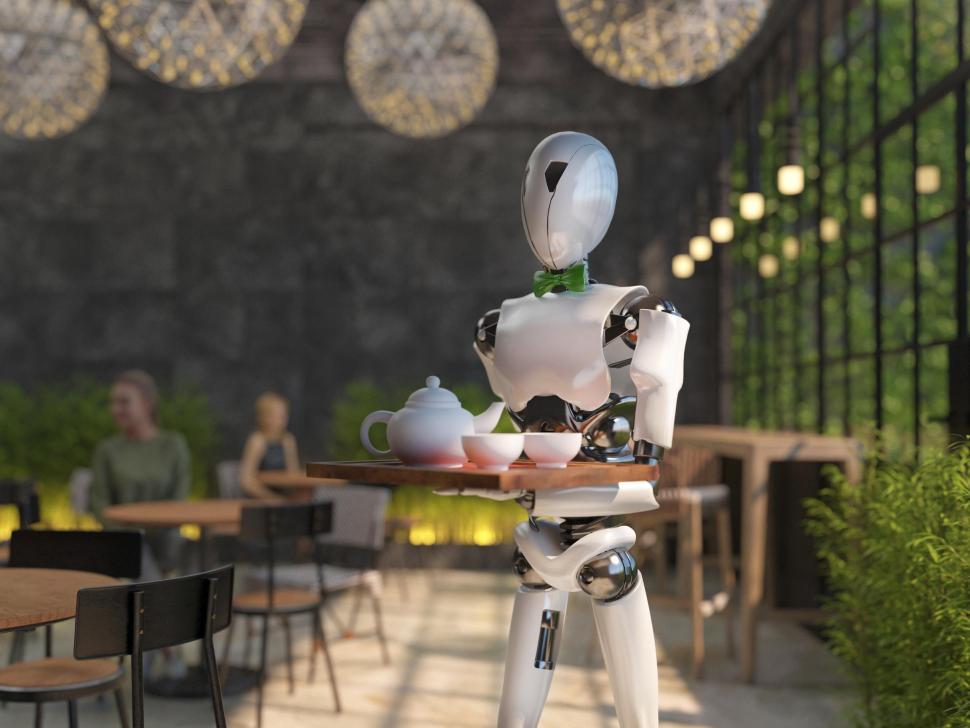

Cooking Up Innovation: How Artificial Intelligence Is Transforming Food ServiceApr 12, 2025 pm 12:09 PM

Cooking Up Innovation: How Artificial Intelligence Is Transforming Food ServiceApr 12, 2025 pm 12:09 PMAI Augmenting Food Preparation While still in nascent use, AI systems are being increasingly used in food preparation. AI-driven robots are used in kitchens to automate food preparation tasks, such as flipping burgers, making pizzas, or assembling sa

Comprehensive Guide on Python Namespaces & Variable ScopesApr 12, 2025 pm 12:00 PM

Comprehensive Guide on Python Namespaces & Variable ScopesApr 12, 2025 pm 12:00 PMIntroduction Understanding the namespaces, scopes, and behavior of variables in Python functions is crucial for writing efficiently and avoiding runtime errors or exceptions. In this article, we’ll delve into various asp

A Comprehensive Guide to Vision Language Models (VLMs)Apr 12, 2025 am 11:58 AM

A Comprehensive Guide to Vision Language Models (VLMs)Apr 12, 2025 am 11:58 AMIntroduction Imagine walking through an art gallery, surrounded by vivid paintings and sculptures. Now, what if you could ask each piece a question and get a meaningful answer? You might ask, “What story are you telling?

MediaTek Boosts Premium Lineup With Kompanio Ultra And Dimensity 9400Apr 12, 2025 am 11:52 AM

MediaTek Boosts Premium Lineup With Kompanio Ultra And Dimensity 9400Apr 12, 2025 am 11:52 AMContinuing the product cadence, this month MediaTek has made a series of announcements, including the new Kompanio Ultra and Dimensity 9400 . These products fill in the more traditional parts of MediaTek’s business, which include chips for smartphone

This Week In AI: Walmart Sets Fashion Trends Before They Ever HappenApr 12, 2025 am 11:51 AM

This Week In AI: Walmart Sets Fashion Trends Before They Ever HappenApr 12, 2025 am 11:51 AM#1 Google launched Agent2Agent The Story: It’s Monday morning. As an AI-powered recruiter you work smarter, not harder. You log into your company’s dashboard on your phone. It tells you three critical roles have been sourced, vetted, and scheduled fo

Generative AI Meets PsychobabbleApr 12, 2025 am 11:50 AM

Generative AI Meets PsychobabbleApr 12, 2025 am 11:50 AMI would guess that you must be. We all seem to know that psychobabble consists of assorted chatter that mixes various psychological terminology and often ends up being either incomprehensible or completely nonsensical. All you need to do to spew fo

The Prototype: Scientists Turn Paper Into PlasticApr 12, 2025 am 11:49 AM

The Prototype: Scientists Turn Paper Into PlasticApr 12, 2025 am 11:49 AMOnly 9.5% of plastics manufactured in 2022 were made from recycled materials, according to a new study published this week. Meanwhile, plastic continues to pile up in landfills–and ecosystems–around the world. But help is on the way. A team of engin

The Rise Of The AI Analyst: Why This Could Be The Most Important Job In The AI RevolutionApr 12, 2025 am 11:41 AM

The Rise Of The AI Analyst: Why This Could Be The Most Important Job In The AI RevolutionApr 12, 2025 am 11:41 AMMy recent conversation with Andy MacMillan, CEO of leading enterprise analytics platform Alteryx, highlighted this critical yet underappreciated role in the AI revolution. As MacMillan explains, the gap between raw business data and AI-ready informat

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Notepad++7.3.1

Easy-to-use and free code editor

MinGW - Minimalist GNU for Windows

This project is in the process of being migrated to osdn.net/projects/mingw, you can continue to follow us there. MinGW: A native Windows port of the GNU Compiler Collection (GCC), freely distributable import libraries and header files for building native Windows applications; includes extensions to the MSVC runtime to support C99 functionality. All MinGW software can run on 64-bit Windows platforms.

EditPlus Chinese cracked version

Small size, syntax highlighting, does not support code prompt function

SublimeText3 Linux new version

SublimeText3 Linux latest version