Technology peripherals

Technology peripherals AI

AI Come quickly! Luchen Open-Sora can collect wool, and you can easily start video generation for 10 yuan.

Come quickly! Luchen Open-Sora can collect wool, and you can easily start video generation for 10 yuan.Recently, the video generation model track is booming, with Vincent videos, Tu videos, and so on. However, even though there are many models on the market, most people still cannot experience them because they do not have the qualifications for internal testing, so they can only look at the "models" and sigh. Not long ago, we reported on Luchen Technology's Open-Sora model. As the world's first open source Sora-like model, it not only performs well on multiple types of videos, but is also low-cost and available to everyone. Does it work? how to use? Let’s take a look at the review of this site.

The recent open-source version 1.2 of Open-Sora can generate 720p high-definition videos up to 16 seconds long. The official video effect is as follows:

The generated effect is really amazing. No wonder so many readers in the background want to get started. experience.

Compared with many closed source software, which require long queues to wait for internal testing qualifications, this completely open source Open-Sora is obviously more accessible. However, the official Github of Open-Sora is full of technology and code. If you want to deploy the experience yourself, not to mention the high hardware requirements of the model, it is also a big challenge for the user's coding skills when configuring the environment.

So is there any way to make it easy for even novice AI users to use Open-Sora?

First the conclusion: Yes, and it can be deployed with one click. After startup, it can also control the video length, frame, lens and other parameters with zero code.

Are you excited? Then let us take a look at how to implement Open-Sora deployment. At the end of the article, there are nanny-level detailed tutorials and usage addresses, which can be operated without any technical background.

Visualization solution based on Gradio

Regarding the latest technical details of Open-Sora, We have done an in-depth report. In the report, we focused on the core architecture of the OpenSora model and its innovative video compression network (VAE). At the end of that article, we mentioned that the Luchen Open-Sora team provides Gradio applications that can be deployed with one click. So, what exactly does this Gradio application look like?

Gradio itself is a Python package designed for rapid deployment of machine learning models. It allows developers to automatically generate a web interface by defining the input and output of the model, thereby simplifying the online display and interaction process of the model.

We carefully read the GitHub homepage of Open-Sora and found that the application organically combines the Open-Sora model with Gradio, providing an elegant and concise interaction solution.

It uses a graphical interface to make the operation easier. In the interface, users can freely modify basic parameters such as the duration, aspect ratio, and resolution of the generated video. They can also independently adjust the motion amplitude, aesthetic score, and more advanced lens movement methods of the generated video. It also supports calling GPT-4 to optimize prompt, so it can support both Chinese and English text input.

After deploying the application, users do not need to write any code when using the Open-Sora model. They only need to enter prompt and click to replace parameters to try different parameter combinations to generate videos. The generated video will also be displayed directly in the Gradio interface and can be downloaded directly on the web page without the need for complicated paths.

Image source: https://github.com/hpcaitech/Open-Sora/blob/main/assets/readme/gradio_basic.png

The Open-Sora team has The script to adapt the model to Gradio is provided in Github, and the command line code for deployment is also provided. However, we still need to go through complex environment configuration to successfully run the deployed code. If we want to fully experience the functions of Open-Sora, especially to generate long-term high-resolution videos (such as 720P 16 seconds), we need a graphics card with good performance and large video memory (the official one is H800). Gradio's solution doesn't seem to mention how to solve these two problems.

These two problems may seem very difficult at first glance, but they can be perfectly solved by Luchen Cloud, truly achieving easy deployment without the need for technology. How to get started? There is a super simple tutorial here on this site.

Super simple one-click deployment tutorial

How easy is it to deploy Open-Sora on Luchen Cloud?

First of all, Luchenyun provides multiple types of graphics cards, among which high-end graphics cards such as A800 and H800 can also be easily rented. After our testing, this 80GB video memory card can meet the inference requirements of the Open-Sora project with a single card.

Secondly, Luchen Cloud has equipped a dedicated image for the Open-Sora project. This image is like a finely decorated room that you can move into with your luggage. The entire operating environment can be started with one click, eliminating the need for complex environment configuration links.

Finally, Luchenyun also has super favorable prices and super personalized services. The price of an A800 card is less than 10 yuan per hour, and the time for initializing the image is not billed. The cloud host is shut down at any time and billing stops. In other words, for less than 10 yuan/hour, you can fully enjoy the surprising experience brought by Open-Sora! In addition, we have also included a method to obtain a 100 yuan coupon at the end of the article. Hurry up and register an account to get the coupon and follow our tutorial!

Luchenyun website: https://cloud.luchentech.com/

First, enter the website to register an account on Luchenyun. As soon as you enter the main page, you can directly see the machines available for rent in the computing power market. Get a coupon or recharge 10 yuan, and you can follow Luchenyun's user guide to start building a cloud host.

The first step is to choose a mirror. As soon as you open the public image, the first one you click on is OpenSora (1.2), which is really convenient.

The second step is to choose the billing method. There are two billing methods, tide billing and pay-as-you-go billing. We tried it and found that tide metering saves money and the A800 is even cheaper during idle periods!

For Open-Sora inference, an A800 is enough, we chose a 1-card configuration, and allowed SSH connection, storage persistence, and mounted public data (including model weights). These functions are free of charge, provide more convenience, and are super conscience.

After selecting, click Create. The startup time of the cloud host is very short, and the machine will be up within tens of seconds. This period of time is not billed, so if you encounter a relatively large image that takes a long time, you don’t have to worry about the cost.

In the third step, we click JupyerLab from the cloud host page to enter the web page. As soon as we entered, a terminal was opened for us.

We enter ls to view the files of the cloud host. We can see that the Open-Sora folder is at the initial path.

Since we are using Open-Sora exclusive image, we do not need to install any additional environment. The most time-consuming step was solved perfectly.

At this time, we can directly enter the command to run Gradio to quickly start Gradio and truly achieve one-click deployment.

Bashpython gradio/app.py

The speed is very fast, it only takes more than ten seconds for Gradio to start running.

However, we found that this gradio runs on the server's http://0.0.0.0:7860 by default. If you want to use it in your local browser, you must first add your ssh public key to Luchen Cloud's in the machine. This step is also very simple. Just enter the file below and paste the secret key of the local machine into it.

Next, we also need to write the local completion port mapping instructions. We can follow the instructions in this screenshot. When you use it, you need to replace it with the specific address and port of your own cloud host.

Then, open the corresponding web page, and a visual operation interface will soon appear.

We first randomly entered an English prompt and clicked to start generating (the default 480p was used, which will be faster).

a river flowing through a rich landscape of trees and mountains (一条河流流经茂密的树木和山脉)

很快生成就完成了,耗时约 40 秒。生成结果整体还不错,有河有山有树木,和指令符合。但是我们期待的是雄鹰从高处俯瞰的效果。

没关系,调整了指令再来一次:

a bird's eye view of a river flowing through a rich landscape of trees and mountains (鸟瞰河流流经树木和山脉的丰富景观)

这次生成的内容果然带上了鸟瞰效果。不错,这个模型还是很听话的。

如前文所说,gradio 界面上还有很多其他选项,比如调整分辨率、画幅长宽比、视频时长,甚至还能控制视频的动态效果幅度等,可玩性非常强,我们测试时使用的是 480P 分辨率,而最高可支持 720P,大家可以逐个尝试,看看不同选项搭配的效果。

想要进阶?微调也能轻松上手

此外,继续深挖 Open-Sora 的网页,我们发现他们还提供了继续微调模型的代码指令。使用自己喜欢的类型的视频微调模型的话,就能让这个模型生成更符合我的审美要求的视频了!

让我们用潞晨云的公开数据中提供的视频数据来验证一下。

由于环境全都是配置好的,我们只需复制粘贴训练指令。

torchrun --standalone --nproc_per_node 1 scripts/train.py configs/opensora-v1-2/train/stage1.py --data-path /root/commonData/Inter4K/meta/meta_inter4k_ready.csv

这边输出了一连串模型训练的信息。

训练已经正常启动了,居然只要单卡就能训!

( 踩坑提示:在此之前我们遭遇了一次 OOM, 结果发现程序挂了以后显存依旧被占用,然后发现是忘记关闭上一步 Gradio 的推理了 ORZ,所以大家用单卡训的时候一定要记得关掉 Gradio,因为 Gradio 上面加载了模型一直在等待用户输入来进行推理)。

以下是我们训练的时候 GPU 资源占用情况:

简单算一笔账,训练一步大约耗时约 20 秒,根据 Open-Sora 提供的数据,训练 70k 步(如下图所示),那他们耗时大约在 16 天左右,和他们文档中声称的 2 周左右相近(假设他们的所有机器各完成一个 step 的时间和我们这台机器相似)。

在这 70k 步中,第一阶段占 30k 步,第二阶段占 23k 步,那第三阶段其实只训练了 17k 步。而这个第三阶段,就是用高质量视频进行微调,用来大幅度提升模型质量,也就是我们现在想要做的事情。

不过,从报告中看,他们的训练使用了 12 台 8 卡机器,所以如果我们用潞晨云平台训练和第三阶段相同的数据量,大约需要:

95 小时 * 8 卡 * 12 台 * 10 元 / 小时 = 91200 元

This number is still a bit threshold for evaluation, but it is also very cost-effective for creating an exclusive Vincent video model. Especially for enterprises, there is basically no preparatory work required. By following the step-by-step tutorial, you can complete a fine-tuning for less than 100,000 yuan or even less. Really looking forward to seeing more enhanced versions of Open-Sora in the professional field!

Finally, let’s add the 100 yuan coupon benefit event we mentioned earlier ~ Although the cost of our review is less than 10 yuan, we still have to save the money!

From the official information of Luchen Cloud, we can see that users share their experience on social media and professional forums (such as Zhihu, Xiaohongshu, Weibo, CSDN, etc.) (with #Luchenyun or @潞chen Technology ), you can get a 100 yuan voucher (valid for one week) by sharing it effectively once, which is equivalent to five or six hundred videos generated during our evaluation~

Finally, we have compiled relevant resource links Put it below so that everyone can get started quickly. Friends who want to try it immediately, click to read the original text to send it with one click and start your AI video journey!

Related resource links:

Lu Chenyun platform: https://cloud.luchentech.com/

Open-Sora code base: https://github.com/hpcaitech /Open-Sora/tree/main?tab=readme-ov-file#inference

Bilibili tutorial: https://www.bilibili.com/video/BV1ow4m1e7PX/?vd_source=c6b752764cd36ff0e535a768e35d98d2

The above is the detailed content of Come quickly! Luchen Open-Sora can collect wool, and you can easily start video generation for 10 yuan.. For more information, please follow other related articles on the PHP Chinese website!

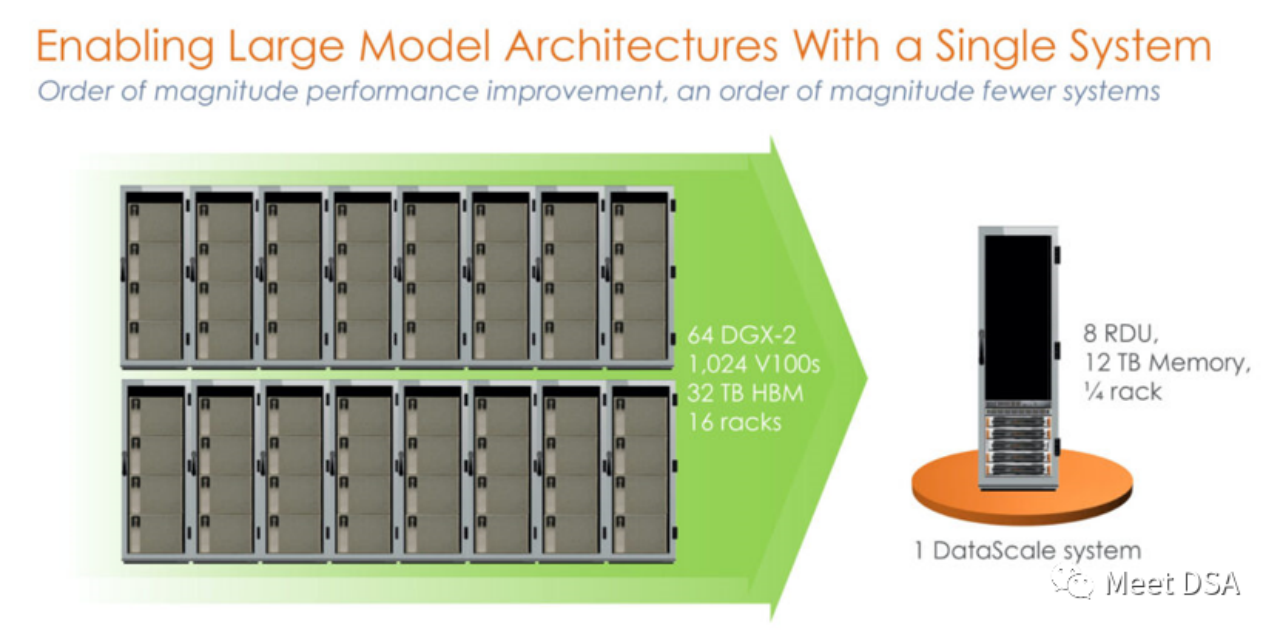

DSA如何弯道超车NVIDIA GPU?Sep 20, 2023 pm 06:09 PM

DSA如何弯道超车NVIDIA GPU?Sep 20, 2023 pm 06:09 PM你可能听过以下犀利的观点:1.跟着NVIDIA的技术路线,可能永远也追不上NVIDIA的脚步。2.DSA或许有机会追赶上NVIDIA,但目前的状况是DSA濒临消亡,看不到任何希望另一方面,我们都知道现在大模型正处于风口位置,业界很多人想做大模型芯片,也有很多人想投大模型芯片。但是,大模型芯片的设计关键在哪,大带宽大内存的重要性好像大家都知道,但做出来的芯片跟NVIDIA相比,又有何不同?带着问题,本文尝试给大家一点启发。纯粹以观点为主的文章往往显得形式主义,我们可以通过一个架构的例子来说明Sam

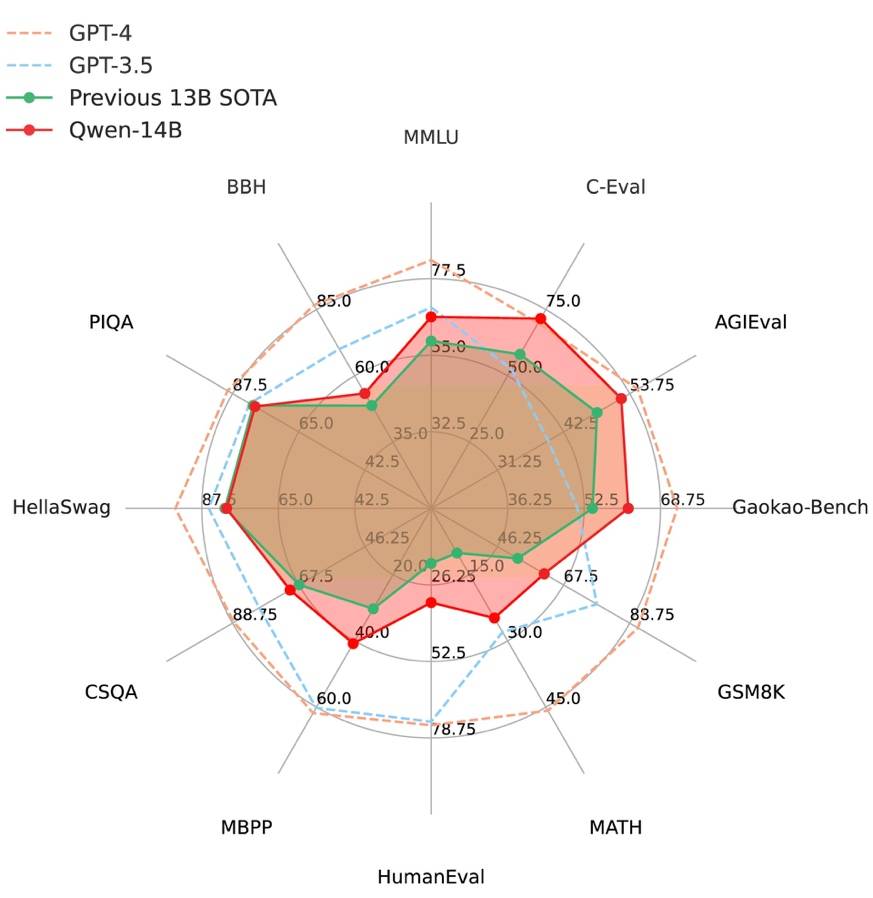

阿里云通义千问14B模型开源!性能超越Llama2等同等尺寸模型Sep 25, 2023 pm 10:25 PM

阿里云通义千问14B模型开源!性能超越Llama2等同等尺寸模型Sep 25, 2023 pm 10:25 PM2021年9月25日,阿里云发布了开源项目通义千问140亿参数模型Qwen-14B以及其对话模型Qwen-14B-Chat,并且可以免费商用。Qwen-14B在多个权威评测中表现出色,超过了同等规模的模型,甚至有些指标接近Llama2-70B。此前,阿里云还开源了70亿参数模型Qwen-7B,仅一个多月的时间下载量就突破了100万,成为开源社区的热门项目Qwen-14B是一款支持多种语言的高性能开源模型,相比同类模型使用了更多的高质量数据,整体训练数据超过3万亿Token,使得模型具备更强大的推

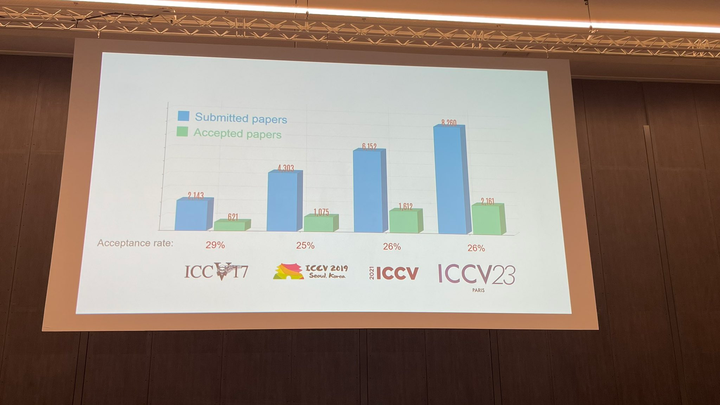

ICCV 2023揭晓:ControlNet、SAM等热门论文斩获奖项Oct 04, 2023 pm 09:37 PM

ICCV 2023揭晓:ControlNet、SAM等热门论文斩获奖项Oct 04, 2023 pm 09:37 PM在法国巴黎举行了国际计算机视觉大会ICCV(InternationalConferenceonComputerVision)本周开幕作为全球计算机视觉领域顶级的学术会议,ICCV每两年召开一次。ICCV的热度一直以来都与CVPR不相上下,屡创新高在今天的开幕式上,ICCV官方公布了今年的论文数据:本届ICCV共有8068篇投稿,其中有2160篇被接收,录用率为26.8%,略高于上一届ICCV2021的录用率25.9%在论文主题方面,官方也公布了相关数据:多视角和传感器的3D技术热度最高在今天的开

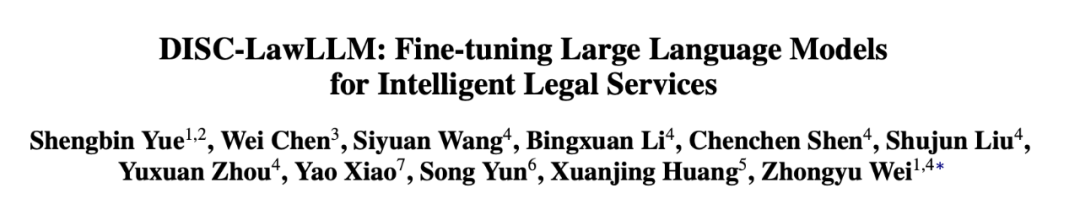

复旦大学团队发布中文智慧法律系统DISC-LawLLM,构建司法评测基准,开源30万微调数据Sep 29, 2023 pm 01:17 PM

复旦大学团队发布中文智慧法律系统DISC-LawLLM,构建司法评测基准,开源30万微调数据Sep 29, 2023 pm 01:17 PM随着智慧司法的兴起,智能化方法驱动的智能法律系统有望惠及不同群体。例如,为法律专业人员减轻文书工作,为普通民众提供法律咨询服务,为法学学生提供学习和考试辅导。由于法律知识的独特性和司法任务的多样性,此前的智慧司法研究方面主要着眼于为特定任务设计自动化算法,难以满足对司法领域提供支撑性服务的需求,离应用落地有不小的距离。而大型语言模型(LLMs)在不同的传统任务上展示出强大的能力,为智能法律系统的进一步发展带来希望。近日,复旦大学数据智能与社会计算实验室(FudanDISC)发布大语言模型驱动的中

百度文心一言全面向全社会开放,率先迈出重要一步Aug 31, 2023 pm 01:33 PM

百度文心一言全面向全社会开放,率先迈出重要一步Aug 31, 2023 pm 01:33 PM8月31日,文心一言首次向全社会全面开放。用户可以在应用商店下载“文心一言APP”或登录“文心一言官网”(https://yiyan.baidu.com)进行体验据报道,百度计划推出一系列经过全新重构的AI原生应用,以便让用户充分体验生成式AI的理解、生成、逻辑和记忆等四大核心能力今年3月16日,文心一言开启邀测。作为全球大厂中首个发布的生成式AI产品,文心一言的基础模型文心大模型早在2019年就在国内率先发布,近期升级的文心大模型3.5也持续在十余个国内外权威测评中位居第一。李彦宏表示,当文心

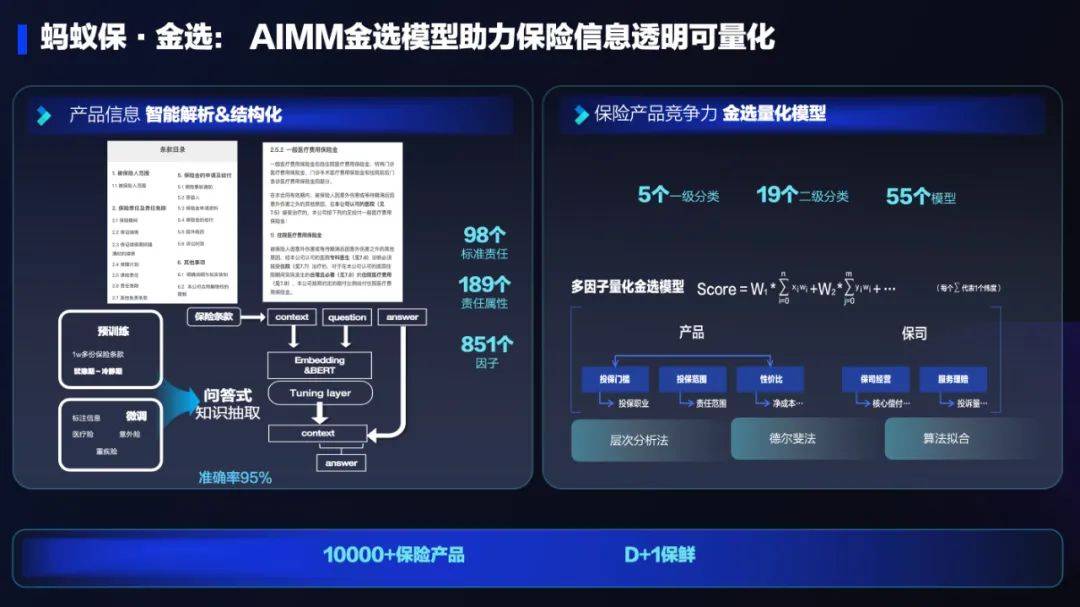

AI技术在蚂蚁集团保险业务中的应用:革新保险服务,带来全新体验Sep 20, 2023 pm 10:45 PM

AI技术在蚂蚁集团保险业务中的应用:革新保险服务,带来全新体验Sep 20, 2023 pm 10:45 PM保险行业对于社会民生和国民经济的重要性不言而喻。作为风险管理工具,保险为人民群众提供保障和福利,推动经济的稳定和可持续发展。在新的时代背景下,保险行业面临着新的机遇和挑战,需要不断创新和转型,以适应社会需求的变化和经济结构的调整近年来,中国的保险科技蓬勃发展。通过创新的商业模式和先进的技术手段,积极推动保险行业实现数字化和智能化转型。保险科技的目标是提升保险服务的便利性、个性化和智能化水平,以前所未有的速度改变传统保险业的面貌。这一发展趋势为保险行业注入了新的活力,使保险产品更贴近人民群众的实际

致敬TempleOS,有开发者创建了启动Llama 2的操作系统,网友:8G内存老电脑就能跑Oct 07, 2023 pm 10:09 PM

致敬TempleOS,有开发者创建了启动Llama 2的操作系统,网友:8G内存老电脑就能跑Oct 07, 2023 pm 10:09 PM不得不说,Llama2的「二创」项目越来越硬核、有趣了。自Meta发布开源大模型Llama2以来,围绕着该模型的「二创」项目便多了起来。此前7月,特斯拉前AI总监、重回OpenAI的AndrejKarpathy利用周末时间,做了一个关于Llama2的有趣项目llama2.c,让用户在PyTorch中训练一个babyLlama2模型,然后使用近500行纯C、无任何依赖性的文件进行推理。今天,在Karpathyllama2.c项目的基础上,又有开发者创建了一个启动Llama2的演示操作系统,以及一个

腾讯与中国宋庆龄基金会发布“AI编程第一课”,教育部等四部门联合推荐Sep 16, 2023 am 09:29 AM

腾讯与中国宋庆龄基金会发布“AI编程第一课”,教育部等四部门联合推荐Sep 16, 2023 am 09:29 AM腾讯与中国宋庆龄基金会合作,于9月1日发布了名为“AI编程第一课”的公益项目。该项目旨在为全国零基础的青少年提供AI和编程启蒙平台。只需在微信中搜索“腾讯AI编程第一课”,即可通过官方小程序免费体验该项目由北京师范大学任学术指导单位,邀请全球顶尖高校专家联合参研。“AI编程第一课”首批上线内容结合中国航天、未来交通两项国家重大科技议题,原创趣味探索故事,通过剧本式、“玩中学”的方式,让青少年在1小时的学习实践中认识A

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

SAP NetWeaver Server Adapter for Eclipse

Integrate Eclipse with SAP NetWeaver application server.

MinGW - Minimalist GNU for Windows

This project is in the process of being migrated to osdn.net/projects/mingw, you can continue to follow us there. MinGW: A native Windows port of the GNU Compiler Collection (GCC), freely distributable import libraries and header files for building native Windows applications; includes extensions to the MSVC runtime to support C99 functionality. All MinGW software can run on 64-bit Windows platforms.

Dreamweaver CS6

Visual web development tools

WebStorm Mac version

Useful JavaScript development tools