Technology peripherals

Technology peripherals AI

AI Specifically customized for five major scientific fields, NASA and IBM cooperate to develop a large language model INDUS

Specifically customized for five major scientific fields, NASA and IBM cooperate to develop a large language model INDUSSpecifically customized for five major scientific fields, NASA and IBM cooperate to develop a large language model INDUS

Editor | KX

Large language models (LLM) trained on large amounts of data perform well on natural language understanding and generation tasks. Most popular LLMs are trained using general corpora such as Wikipedia, but distributional changes in vocabulary lead to poor performance in specific domains.

Inspired by this, NASA collaborated with IBM to develop INDUS, a comprehensive set of LLMs tailored for and used in the fields of Earth science, biology, physics, heliophysics, planetary science and astrophysics Train on curated scientific corpora from different data sources.

INDUS contains two types of models: encoder and sentence Transformer. The encoder converts natural language text into a numerical encoding that the LLM can process. The INDUS encoder is trained on a corpus of 60 billion tokens containing astrophysics, planetary science, earth science, heliophysics, biological and physical science data.

Related research titled "INDUS: Effective and Efficient Language Models for Scientific Applications" was published on the arXiv preprint platform.

LLM trained on a general domain corpus performs well on natural language processing (NLP) tasks. However, previous studies have shown that LLMs trained using domain-specific corpora perform better on specialized tasks.

For example, some researchers have developed LLMs in several specific fields, such as SCIBERT, BIOBERT, MATBERT, BATTERYBERT and SCHOLARBERT, with the purpose of improving the accuracy of NLP tasks in the field.

INDUS: A Comprehensive Set of LLMs

In this study, the researchers focused specifically on interdisciplinary areas such as physics, earth sciences, astrophysics, Solar physics, planetary science and biology.

INDUS is a set of encoder-based LLMs focused on these areas of interest, trained with carefully curated corpora from diverse sources. More than half of the 50,000 words included in INDUS are unique to the specific scientific field used for training. The INDUS Encoder model fine-tunes the Sentence Transformer model on approximately 268 million text pairs, including title/summary and question/answer.

Specifically:

1. A custom tokenizer INDUSBPE was built from a curated scientific corpus using a byte pair encoding algorithm.

2. Pre-trained multiple encoder-only LLMs using selected scientific corpora and the INDUSBPE tagger. We further create a sentence embedding model by fine-tuning the encoder-only model with a contrastive learning objective to learn “universal” sentence embeddings. Smaller, more efficient versions of these models were trained using knowledge extraction techniques.

3. Created three new scientific benchmark datasets, CLIMATE-CHANGE NER (entity recognition task), NASA-QA (extraction question answering task) and NASA-IR (retrieval task) to further accelerate this multi-disciplinary field Research.

4. Through experimental results, we demonstrate the model’s excellent performance on these benchmark tasks as well as existing domain-specific benchmarks, surpassing general models such as RoBERTa and scientific domain encoders such as SCIBERT.

Perform better than non-domain-specific LLM

By providing INDUS with domain-specific vocabulary, the research team outperformed open, non-domain-specific LLM on biomedical task benchmarks, scientific question answering benchmarks, and earth science entity recognition tests better.

Compared the INDUS model with similarly sized open source models RoBERTaBASE, SCIBERT, MINILM and TINYBERT.

In the natural language understanding task, among the base models, INDUSBASE significantly outperforms the general RoBERTa model on micro/macro averages, while also achieving competitive performance in the biological domain-specific corresponding model SCIBERT.

Table: BLURB evaluation results. (Source: Paper)

BLURB significantly outperforms corresponding baseline models on the climate change NER task, demonstrating the effectiveness of training on large domain-specific data.

Table: Climate change NER benchmark results. (Source: paper)

In NASA-QA (extraction question answering task), fine-tuning the augmented training set using relevant SQuAD. All models were fine-tuned for 15 epochs, and it was observed that INDUSBASE outperformed all similarly sized models, while INDUSSMALL performed relatively strongly.

Table: NASA-QA benchmark results. (Source: Paper)

In retrieval tasks, the INDUS model is evaluated on the NASA-IR dataset and the BEIR benchmark, which consists of 12 retrieval tasks covering various domains.

As shown in the table below, both sentence embedding models perform significantly better than the baseline on the NASA-IR task, while still maintaining good performance on several BEIR tasks.

Table: NASA-IR and BEIR evaluation results. (Source: Paper)

The researchers also measured the average retrieval time for each of 4,202 test queries on the BEIR natural problem set on a single A100 GPU. This time includes the time to code the query, the corpus, and the time to retrieve relevant documents. Notably, INDUS-RETRIEVERSMALL outperforms INDUS-RETRIEVERBASE on both NASA-IR and BEIR, while being approximately 4.6 times faster.

IBM researcher Bishwaranjan Bhattacharjee commented on the overall approach: "Not only do we have a custom vocabulary, but we also have a large professional corpus for training the encoder model and a good training strategy, which achieves excellent performance. For smaller , faster version, we use neural architecture search to obtain the model architecture and use knowledge distillation to train it while supervising the larger model."

Dr. Sylvain Costes, NASA Biological and Physical Sciences (BPS) Division, discussed. Benefits of integrating INDUS: “Integrating INDUS with the Open Science Data Repository (OSDR) application programming interface (API) allows us to develop and pilot chatbots that provide more intuitive search capabilities for browsing individual datasets. We are currently exploring Methods to improve OSDR’s internal curatorial data system, using INDUS to increase the efficiency of the curatorial team and reduce the amount of manual work required every day.”

Reference content:https://techxplore.com/news/2024-06. -nasa-ibm-collaboration-indus-large.html

The above is the detailed content of Specifically customized for five major scientific fields, NASA and IBM cooperate to develop a large language model INDUS. For more information, please follow other related articles on the PHP Chinese website!

Cooking Up Innovation: How Artificial Intelligence Is Transforming Food ServiceApr 12, 2025 pm 12:09 PM

Cooking Up Innovation: How Artificial Intelligence Is Transforming Food ServiceApr 12, 2025 pm 12:09 PMAI Augmenting Food Preparation While still in nascent use, AI systems are being increasingly used in food preparation. AI-driven robots are used in kitchens to automate food preparation tasks, such as flipping burgers, making pizzas, or assembling sa

Comprehensive Guide on Python Namespaces & Variable ScopesApr 12, 2025 pm 12:00 PM

Comprehensive Guide on Python Namespaces & Variable ScopesApr 12, 2025 pm 12:00 PMIntroduction Understanding the namespaces, scopes, and behavior of variables in Python functions is crucial for writing efficiently and avoiding runtime errors or exceptions. In this article, we’ll delve into various asp

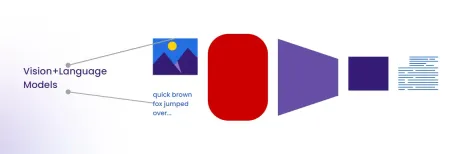

A Comprehensive Guide to Vision Language Models (VLMs)Apr 12, 2025 am 11:58 AM

A Comprehensive Guide to Vision Language Models (VLMs)Apr 12, 2025 am 11:58 AMIntroduction Imagine walking through an art gallery, surrounded by vivid paintings and sculptures. Now, what if you could ask each piece a question and get a meaningful answer? You might ask, “What story are you telling?

MediaTek Boosts Premium Lineup With Kompanio Ultra And Dimensity 9400Apr 12, 2025 am 11:52 AM

MediaTek Boosts Premium Lineup With Kompanio Ultra And Dimensity 9400Apr 12, 2025 am 11:52 AMContinuing the product cadence, this month MediaTek has made a series of announcements, including the new Kompanio Ultra and Dimensity 9400 . These products fill in the more traditional parts of MediaTek’s business, which include chips for smartphone

This Week In AI: Walmart Sets Fashion Trends Before They Ever HappenApr 12, 2025 am 11:51 AM

This Week In AI: Walmart Sets Fashion Trends Before They Ever HappenApr 12, 2025 am 11:51 AM#1 Google launched Agent2Agent The Story: It’s Monday morning. As an AI-powered recruiter you work smarter, not harder. You log into your company’s dashboard on your phone. It tells you three critical roles have been sourced, vetted, and scheduled fo

Generative AI Meets PsychobabbleApr 12, 2025 am 11:50 AM

Generative AI Meets PsychobabbleApr 12, 2025 am 11:50 AMI would guess that you must be. We all seem to know that psychobabble consists of assorted chatter that mixes various psychological terminology and often ends up being either incomprehensible or completely nonsensical. All you need to do to spew fo

The Prototype: Scientists Turn Paper Into PlasticApr 12, 2025 am 11:49 AM

The Prototype: Scientists Turn Paper Into PlasticApr 12, 2025 am 11:49 AMOnly 9.5% of plastics manufactured in 2022 were made from recycled materials, according to a new study published this week. Meanwhile, plastic continues to pile up in landfills–and ecosystems–around the world. But help is on the way. A team of engin

The Rise Of The AI Analyst: Why This Could Be The Most Important Job In The AI RevolutionApr 12, 2025 am 11:41 AM

The Rise Of The AI Analyst: Why This Could Be The Most Important Job In The AI RevolutionApr 12, 2025 am 11:41 AMMy recent conversation with Andy MacMillan, CEO of leading enterprise analytics platform Alteryx, highlighted this critical yet underappreciated role in the AI revolution. As MacMillan explains, the gap between raw business data and AI-ready informat

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

MinGW - Minimalist GNU for Windows

This project is in the process of being migrated to osdn.net/projects/mingw, you can continue to follow us there. MinGW: A native Windows port of the GNU Compiler Collection (GCC), freely distributable import libraries and header files for building native Windows applications; includes extensions to the MSVC runtime to support C99 functionality. All MinGW software can run on 64-bit Windows platforms.

SublimeText3 Linux new version

SublimeText3 Linux latest version

DVWA

Damn Vulnerable Web App (DVWA) is a PHP/MySQL web application that is very vulnerable. Its main goals are to be an aid for security professionals to test their skills and tools in a legal environment, to help web developers better understand the process of securing web applications, and to help teachers/students teach/learn in a classroom environment Web application security. The goal of DVWA is to practice some of the most common web vulnerabilities through a simple and straightforward interface, with varying degrees of difficulty. Please note that this software

Atom editor mac version download

The most popular open source editor

Safe Exam Browser

Safe Exam Browser is a secure browser environment for taking online exams securely. This software turns any computer into a secure workstation. It controls access to any utility and prevents students from using unauthorized resources.