Perhaps from the day it was born, LangChain was destined to be a product with polarizing reputations.

Those who are optimistic about LangChain appreciate its rich tools and components and its ease of integration. Those who are not optimistic about LangChain believe that it is doomed to fail - in this era when technology changes so fast, it is simply not feasible to build everything with LangChain.

Some exaggeration:

"In my consulting work, I spend 70% of my energy convincing people not to use langchain or llamaindex. This solves 90% of their problems."

Recently, an article LangChain’s complaints have once again become the focus of hot discussion:

The author, Fabian Both, is a deep learning engineer at the AI testing tool Octomind. The Octomind team uses an AI Agent with multiple LLMs to automatically create and fix end-to-end tests in Playwright.

This is a story that lasted for more than a year, starting from the choice of LangChain, and then entering the stage of tenacious struggle with LangChain. In 2024, they finally decided to say goodbye to LangChain.

Let's see what they went through:

"LangChain was the best choice"

We were using LangChain in production for over 12 months, starting in early 2023 and then removing it in 2024 .

In 2023, LangChain seems to be our best choice. It has an impressive array of components and tools, and its popularity has skyrocketed. LangChain promised to "allow developers to go from an idea to runnable code in an afternoon," but as our needs became more and more complex, problems began to surface.

LangChain becomes a source of resistance rather than a source of productivity.

As LangChain’s inflexibility began to show, we began to delve deeper into LangChain’s internals to improve the underlying behavior of the system. However, because LangChain intentionally makes many details abstract, we cannot easily write the required underlying code.

As we all know, AI and LLM are rapidly changing fields, with new concepts and ideas emerging every week. However, the framework design of LangChain, an abstract concept created around multiple emerging technologies, is difficult to stand the test of time.

Why LangChain is so abstract

Initially, LangChain can also help when our simple needs match LangChain’s usage assumptions. But its high-level abstractions quickly made our code more difficult to understand and frustrating to maintain. It’s not a good sign when the team spends as much time understanding and debugging LangChain as it does building features.

The problem with LangChain’s abstract approach can be illustrated by the trivial example of “translating an English word into Italian”.

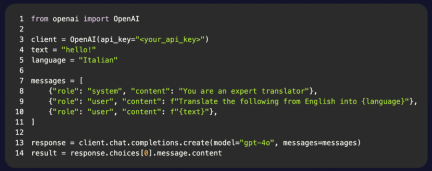

Here is a Python example using only the OpenAI package:

This is a simple and easy-to-understand code containing only one class and one function call. The rest is standard Python code.

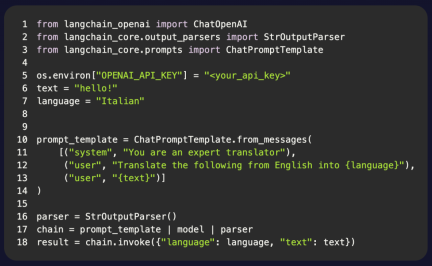

Compare this to LangChain’s version:

The code is roughly the same, but that’s where the similarity ends.

We now have three classes and four function calls. But what is worrying is that LangChain introduces three new abstract concepts:

Prompt template: Provides Prompt for LLM;

Output parser: Processes the output from LLM;

Chain: LangChain The "LCEL syntax" covers Python's | operator.

All LangChain does is increase the complexity of the code without any obvious benefits.

This kind of code may be fine for early prototypes. But for production use, each component must be reasonably understood so that it does not crash unexpectedly under actual use conditions. You must adhere to the given data structures and design your application around these abstractions.

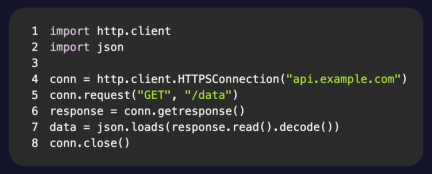

Let’s look at another abstract comparison in Python, this time getting JSON from an API.

Use the built-in http package:

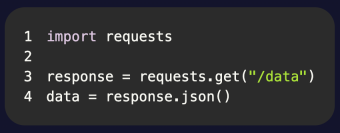

Use the requests package:

The difference is obvious. This is what good abstraction feels like.

Of course, these are trivial examples. But what I'm trying to say is that good abstractions simplify code and reduce the cognitive load required to understand it.

LangChain tries to make your life easier by hiding the details and doing more with less code. However, if this comes at the expense of simplicity and flexibility, then abstraction loses value.

LangChain is also used to using abstractions on top of other abstractions, so you often have to think in terms of nested abstractions to use the API correctly. This inevitably leads to understanding huge stack traces and debugging internal framework code you didn't write, rather than implementing new features.

Impact of LangChain on Development Teams

Generally speaking, applications heavily use AI Agents to perform different types of tasks such as discovering test cases, generating Playwright tests, and automatic fixes.

When we want to move from a single Sequential Agent architecture to a more complex architecture, LangChain becomes a limiting factor. For example, generate Sub-Agents and have them interact with the original Agent. Or multiple professional Agents interact with each other.

In another example, we need to dynamically change the availability of tools that the Agent can access based on business logic and the output of the LLM. However, LangChain does not provide a method to observe the Agent state from the outside, which resulted in us having to reduce the scope of implementation to adapt to the limited functionality of LangChain Agent.

Once we remove it, we no longer need to translate our needs into a solution suitable for LangChain. We just need to write code.

So, if not using LangChain, what framework should you use? Maybe you don't need a framework at all.

Do we really need a framework for building AI applications?

LangChain provided us with LLM functionality in the early days, allowing us to focus on building applications. But in hindsight, we would have been better off in the long term without the framework.

LangChain The long list of components gives the impression that building an LLM-powered application is very complex. But the core components required for most applications are usually as follows:

Client for LLM communication

Functions/tools for function calls

Vector database for RAG

Observability platform for tracking, assessment, and more.

The Agent space is evolving rapidly, bringing exciting possibilities and interesting use cases, but our advice - keep it simple for now until the usage patterns of Agents are solidified. Much development work in the field of artificial intelligence is driven by experimentation and prototyping.

The above is Fabian Both’s personal experience over the past year, but LangChain is not entirely without merit.

Another developer Tim Valishev said that he will stick with LangChain for a while longer:

I really like Langsmith:

Visual logging out of the box

Prompt playground, You can instantly fix prompts from the logs and see how it performs under the same inputs

Easily build test datasets directly from the logs, with the option to run simple test sets in prompts with one click (or do it in code) End-to-end testing)

Test score history

Prompt version control

and it provides good support for streaming of the entire chain, it takes some time to implement this manually.

What’s more, relying solely on APIs is not enough. The APIs of each large model manufacturer are different, and "seamless switching" is not possible.

What do you think?

Original link: https://www.octomind.dev/blog/why-we-no-longer-use-langchain-for-building-our-ai-agents

The above is the detailed content of Why have you given up on LangChain?. For more information, please follow other related articles on the PHP Chinese website!

How Powerful Nations Are Using Visas To Win The Global AI Talent RaceMay 16, 2025 am 02:13 AM

How Powerful Nations Are Using Visas To Win The Global AI Talent RaceMay 16, 2025 am 02:13 AMThe globe's leading nations are fiercely competing for a shrinking group of elite AI researchers. They are employing accelerated visa procedures and fast-tracked citizenship to draw in the top international talent. This international race is turning

Do I need a phone number to register for ChatGPT? We also explain what to do if you can't registerMay 16, 2025 am 01:24 AM

Do I need a phone number to register for ChatGPT? We also explain what to do if you can't registerMay 16, 2025 am 01:24 AMNo mobile number is required for ChatGPT registration? This article will explain in detail the latest changes in the ChatGPT registration process, including the advantages of no longer mandatory mobile phone numbers, as well as scenarios where mobile phone number authentication is still required in special circumstances such as API usage and multi-account creation. In addition, we will also discuss the security of mobile phone number registration and provide solutions to common errors during the registration process. ChatGPT registration: Mobile phone number is no longer required In the past, registering for ChatGPT required mobile phone number verification. But an update in December 2023 canceled the requirement. Now, you can easily register for ChatGPT by simply having an email address or Google, Microsoft, or Apple account. It should be noted that although it is not necessary

Top Ten Uses Of AI Puts Therapy And Companionship At The #1 SpotMay 16, 2025 am 12:43 AM

Top Ten Uses Of AI Puts Therapy And Companionship At The #1 SpotMay 16, 2025 am 12:43 AMLet's delve into the fascinating world of AI and its top uses as outlined in the latest analysis.This exploration of a groundbreaking AI development is a continuation of my ongoing Forbes column, where I delve into the latest advancements in AI, incl

![Can't use ChatGPT! Explaining the causes and solutions that can be tested immediately [Latest 2025]](https://img.php.cn/upload/article/001/242/473/174717025174979.jpg?x-oss-process=image/resize,p_40) Can't use ChatGPT! Explaining the causes and solutions that can be tested immediately [Latest 2025]May 14, 2025 am 05:04 AM

Can't use ChatGPT! Explaining the causes and solutions that can be tested immediately [Latest 2025]May 14, 2025 am 05:04 AMChatGPT is not accessible? This article provides a variety of practical solutions! Many users may encounter problems such as inaccessibility or slow response when using ChatGPT on a daily basis. This article will guide you to solve these problems step by step based on different situations. Causes of ChatGPT's inaccessibility and preliminary troubleshooting First, we need to determine whether the problem lies in the OpenAI server side, or the user's own network or device problems. Please follow the steps below to troubleshoot: Step 1: Check the official status of OpenAI Visit the OpenAI Status page (status.openai.com) to see if the ChatGPT service is running normally. If a red or yellow alarm is displayed, it means Open

Calculating The Risk Of ASI Starts With Human MindsMay 14, 2025 am 05:02 AM

Calculating The Risk Of ASI Starts With Human MindsMay 14, 2025 am 05:02 AMOn 10 May 2025, MIT physicist Max Tegmark told The Guardian that AI labs should emulate Oppenheimer’s Trinity-test calculus before releasing Artificial Super-Intelligence. “My assessment is that the 'Compton constant', the probability that a race to

An easy-to-understand explanation of how to write and compose lyrics and recommended tools in ChatGPTMay 14, 2025 am 05:01 AM

An easy-to-understand explanation of how to write and compose lyrics and recommended tools in ChatGPTMay 14, 2025 am 05:01 AMAI music creation technology is changing with each passing day. This article will use AI models such as ChatGPT as an example to explain in detail how to use AI to assist music creation, and explain it with actual cases. We will introduce how to create music through SunoAI, AI jukebox on Hugging Face, and Python's Music21 library. Through these technologies, everyone can easily create original music. However, it should be noted that the copyright issue of AI-generated content cannot be ignored, and you must be cautious when using it. Let’s explore the infinite possibilities of AI in the music field together! OpenAI's latest AI agent "OpenAI Deep Research" introduces: [ChatGPT]Ope

What is ChatGPT-4? A thorough explanation of what you can do, the pricing, and the differences from GPT-3.5!May 14, 2025 am 05:00 AM

What is ChatGPT-4? A thorough explanation of what you can do, the pricing, and the differences from GPT-3.5!May 14, 2025 am 05:00 AMThe emergence of ChatGPT-4 has greatly expanded the possibility of AI applications. Compared with GPT-3.5, ChatGPT-4 has significantly improved. It has powerful context comprehension capabilities and can also recognize and generate images. It is a universal AI assistant. It has shown great potential in many fields such as improving business efficiency and assisting creation. However, at the same time, we must also pay attention to the precautions in its use. This article will explain the characteristics of ChatGPT-4 in detail and introduce effective usage methods for different scenarios. The article contains skills to make full use of the latest AI technologies, please refer to it. OpenAI's latest AI agent, please click the link below for details of "OpenAI Deep Research"

Explaining how to use the ChatGPT app! Japanese support and voice conversation functionMay 14, 2025 am 04:59 AM

Explaining how to use the ChatGPT app! Japanese support and voice conversation functionMay 14, 2025 am 04:59 AMChatGPT App: Unleash your creativity with the AI assistant! Beginner's Guide The ChatGPT app is an innovative AI assistant that handles a wide range of tasks, including writing, translation, and question answering. It is a tool with endless possibilities that is useful for creative activities and information gathering. In this article, we will explain in an easy-to-understand way for beginners, from how to install the ChatGPT smartphone app, to the features unique to apps such as voice input functions and plugins, as well as the points to keep in mind when using the app. We'll also be taking a closer look at plugin restrictions and device-to-device configuration synchronization

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Dreamweaver CS6

Visual web development tools

ZendStudio 13.5.1 Mac

Powerful PHP integrated development environment

SublimeText3 Linux new version

SublimeText3 Linux latest version

Safe Exam Browser

Safe Exam Browser is a secure browser environment for taking online exams securely. This software turns any computer into a secure workstation. It controls access to any utility and prevents students from using unauthorized resources.

VSCode Windows 64-bit Download

A free and powerful IDE editor launched by Microsoft