Large models are also large and small, and their size is measured by the number of parameters. GPT-3 has 17.5 billion parameters, and Grok-1 is even more impressive, with 31.4 billion parameters. Of course, there are also slimmer ones like Llama, whose number of parameters is only between 7 billion and 70 billion.

The 70B mentioned here may not refer to the amount of training data, but to the densely packed parameters in the model. These parameters are like small "brain cells". The more they are, the smarter the model can be and the better it can understand the intricate relationships in the data. With these "brain cells," models may perform better at tasks. However, many times these parameters, especially in large-scale models, can cause problems. These "brain cells" may interact with each other when processing tasks, making it difficult for the model to understand the complex relationships in the data. With these "brain cells," models may perform better at tasks. Therefore, we need to find a way to manage the relationship between these parameters when working on the task. A common method is through regularization. The parameters of these large models are like the "architects" inside the model. Through complex algorithms and training processes, this huge language is built bit by bit. world. Each parameter has its role, and they work together to allow the model to more accurately understand our language and give more appropriate answers.

So, how are the parameters in the large model composed?

1. Parameters in the large model

The parameters of the large model are its "internal parts". Each of these parts has its own purpose, usually including but not limited to the following categories:

Weights: Weights are like "wires" in a neural network, connecting each neuron. They are responsible for adjusting the "volume" of signal transmission, allowing important information to be transmitted farther and less important information to be quieter. For example, in the fully connected layer, the weight matrix W is a "map" that tells us which input features are most closely related to the output features.- Biases: Biases are like the "little assistants" of neurons, responsible for setting a baseline for the response of neurons. With it, neurons know at what level they should be active.

- Parameters of the attention mechanism (Attention Parameters): In the Transformer-based model, these parameters are like a "compass", telling the model which information is most worthy of attention. They include query matrices, key matrices, value matrices, etc., which are like finding the most critical "clues" in a large amount of information.

- Embedding Matrices: When processing text data, the embedding matrix is the "dictionary" of the model. Each column represents a word, and a number is used to represent the word. In this way, the model can understand the meaning of the text.

- Hidden State Initialization Parameters (Initial Hidden State Parameters): These parameters are used to set the initial hidden state of the model, just like setting a tone for the model so that it knows where to start "thinking".

- ......

- These parameters generally use 4 expression and storage formats:

- Half/BF16: 16-bit floating point number, that is, 2 bytes

- Int8: 8-bit integer, that is, 1 byte

- Int4: 4-bit integer, that is, 0.5 bytes

- Generally speaking, the number of parameters is the main factor affecting the performance of large models. For example, the 13B-int8 model is generally better than the 7B-BF16 model of the same architecture.

2. Memory requirements for large model parameters

For engineers, what they are faced with is how much memory resources will be used during large model training or inference. Even though the V100 (with 32GB of GPU memory) or the A100 (with 40GB of GPU memory) are very powerful, large models still cannot be trained on a single GPU, such as with Tensorflow or PyTorch.

2.1 Memory requirements during the training phase

During model training, it is mainly reflected in the memory storage requirements for model status and activity processes. The model state consists of tensors consisting of optimizer state, gradients, and parameters. Included in the active process are any tensors created in the forward channel that are required for gradient calculations in the backward channel. In order to optimize memory usage, you can consider the following aspects: 1. Reduce the number of model parameters: You can reduce the number of parameters and reduce memory usage by reducing the model size or using techniques such as sparse matrices. 2. Storage of optimizer state: You can choose to store only the necessary optimizer state instead of saving all states. Optimizer state can be selectively updated and stored as needed. 3. Modify the data type of the tensor:

At any time during training, for each model parameter, there always needs to be enough GPU memory to store:

- The number of bytes copied by model parameters x

- The number of bytes copied by gradient y

- The optimizer status is generally 12 bytes, mainly the copy of parameters, variance, etc. , all optimizer states will be saved in FP32 to maintain stable training and avoid numerical anomalies.

This means that the following memory is required to store all model status and process data during training: (x+y+12) * model_size

2.2 Memory requirements in the inference phase

The inference phase uses pre-trained LLM to complete tasks such as text generation or translation. Here, memory requirements are typically lower, with the main influencing factors being:

- Limited context: Inference typically handles shorter input sequences, requiring less memory to store associated with smaller chunks of text of activation.

- No backpropagation: During inference, LLM does not need to preserve the intermediate values of backpropagation, a technique used for training to adjust parameters. This eliminates a lot of memory overhead.

The inference phase requires no more memory than a quarter of the memory required by the training phase for the same parameter count and type. For example, for a 7B model, in general, using floating point precision requires 28GB of memory, using BF16 precision requires 14GB of memory, and using int8 precision requires 7GB of memory. This rough estimation method can be applied to other versions of the model accordingly.

Also, when tuning LLM for a specific task, fine-tuning requires a higher memory footprint. Fine-tuning typically involves longer training sequences to capture the nuances of the target task. This will lead to larger activations as the LLM processes more text data. The backpropagation process requires the storage of intermediate values for gradient calculations, which are used to update the model's weights during training. This adds a significant memory load compared to inference.

2.3 Memory estimation of large models based on Transformer

Specifically, corresponding to the large model based on Transformer, try to calculate the memory required for training, where set:

- l: Number of layers of transformer

- a: Head number of attention

- b: Batch size

- s: Sequence length

- h : Dimension size of the hidden layer

- p: Accuracy

Here, bshp = b * s * h * p represents the size of the input data. In the linear layer part of the transformer, approximately 9bshp+bsh of space is needed for subsequent activations. In the attention part, self-attention can be expressed as: softmax((XQ)(XK)^T)XV

Then, XQ, XK, and XV all require bshp-sized space. In standard self-attention, the result of multiplying (XQ) * (XK) ^ T is just a b * s * s matrix containing logit. However, in practice, due to the use of a multi-head attention mechanism, a separate s * s storage space needs to be established for each head. This means that abssp bytes of space are required, and storing the output of the softmax also requires abssp bytes. After softmax, additional abss bytes are generally needed to store the mask, so the attention part requires 2abssp+abss storage space.

In addition, there are two Norm layers in the transformer, each of which still requires bshp storage space, for a total of 2 bshp.

So, the memory required for large model training based on Transformer is approximately: L(9bshp+bsh+2abssp+abss +2bshp) = Lbshp[16+2/p+(as/h)(2+1/ p)]

Explain that the memory required to train a large model based on Transformer is approximately: the number of layers of the model x the size of the training batch x sequence length x the dimension of the hidden layer x accuracy x an integer greater than 16

This may be a theoretical lower bound for the memory requirements of large model parameters based on Transfromer during training.

3. GPU requirements for large model parameters

With the memory requirements for large model parameters, we can further estimate the number of GPUs required for training and inference of large models. However, since the estimation of the number of GPUs relies on slightly more parameters, someone (Dr. Walid Soula, https://medium.com/u/e41a20d646a8) gave a simple formula for rough estimation, which also has certain reference significance in engineering.

Picture

Picture

Among them,

- Model's parameters in billions is the number of model parameters in B;

- 18 is the memory usage factor of different components during training;

- 1.25 represents the memory quantity factor required for the activation process. Activation is a dynamic data structure that changes as the model processes input data.

- GPU Size in GB is the total amount of available GPU memory

As a practical example, assuming that an NVIDIA RTX 4090 GPU is used, which has 24GB of VRAM, calculate the training The number of GPUs required by the 'Llama3 7B' model is approximately:

The total number of GPUs≈(7 * 18 * 1.25)/24, which is approximately equal to 7

For inference, it can be simplified to 1/8~1/9 of the training stage. Of course, these are only rough estimates in a general sense.

4. From large model parameters to distributed training

Understanding the composition of large model parameters and their requirements for memory and GPU will help to deeply understand the role of distributed training in engineering practice. challenges faced.

The implementation process of distributed training strategies can be significantly simplified by adopting frameworks designed for distributed training, such as TensorFlow or PyTorch, which provide rich tools and APIs. By using techniques such as gradient accumulation before updating the model, or using techniques such as gradient compression to reduce the amount of data exchange between nodes, communication costs can be effectively reduced. It is crucial to determine the optimal batch size for distributed training (the parameter b mentioned above); a b value that is too small may increase communication overhead, while a value that is too large may result in insufficient memory.

The importance of LLMOps has become increasingly prominent. Regularly monitoring the performance indicators configured for distributed training and adjusting hyperparameters, partitioning strategies, and communication settings to optimize performance are key to improving training efficiency. Implementing a checkpointing mechanism for the model and efficient recovery in the event of failure ensures that the training process continues without having to start from scratch.

In other words, the training/inference of large models is essentially a complex distributed system architecture engineering challenge, such as:

- Communication overhead: when performing gradient calculations and data updates The time required for communication may affect the overall acceleration effect.

- Synchronization complexity: When multiple machines are trained in parallel, the complexity of synchronization needs to be carefully designed.

- Fault tolerance and resource management: The impact of single point failure on model training and inference, as well as resource allocation and scheduling strategies for CPU and GPU.

- ......

However, in fact, most engineers may not be directly involved in specific training work, but focus on how to leverage large models when building applications parameters.

Picture

Picture

5. Parameters used in large model applications

The main focus here is on how to configure the parameters when using a large model to output text. Three parameters: Temperature, Top-K and Top-P.

The Temperature parameter is often misunderstood as a switch that only controls the creativity of the model, but in fact its deeper role is to adjust the "softness" of the probability distribution. When the Temperature value is set higher, the probability distribution becomes softer and more uniform, which encourages the model to generate more diverse and creative output. Conversely, lower Temperature values will make the distribution sharper and have more obvious peaks, thus tending to produce output similar to the training data.

The Top-K parameter is used to limit the model to output the most likely Top-K tokens at each step. In this way, incoherent or meaningless content in the output can be reduced. This strategy creates a balance between maintaining the best possible consistency of output while allowing a certain degree of creative sampling.

Top-P is another decoding method that selects a minimum set of words whose cumulative probability exceeds the P value as output based on the set P value (0≤P≤1). This method allows the number of selected words to be dynamically increased or decreased based on the probability distribution of the next word. In particular, when the P value is 1, Top-P will select all words, which is equivalent to sampling from the entire distribution, thereby producing a more diverse output; while when the P value is 0, Top-P only selects the words with the highest probability , similar to greedy decoding, makes the output more focused and consistent.

These three parameters work together to affect the behavior of the model. For example, when setting Temperature=0.8, Top-K=36, and Top-P=0.7, the model first calculates the complete unnormalized log probability distribution of the entire vocabulary based on context. Temperature=0.8 means that each log probability is divided by 0.8, which effectively increases the model's confidence in its predictions before normalization. Top-K=36 means selecting the 36 markers with the highest frequency proportional logarithmic probability. Then, Top-P=0.7 applies filtering in this Top-K=36 set, keeping sorting from high to low probability until the cumulative probability reaches 0.7. Finally, this filtered set is renormalized and used in the subsequent sampling process.

6. Summary

In engineering practice, it is meaningful to understand the parameters of large models. Parameters play a decisive role in large models. They define the behavior, performance, implementation costs, and resource requirements of large models. Understanding the parameters of a large model in engineering means grasping the relationship between the complexity, performance, and capabilities of the model. Properly configuring and optimizing these parameters from the perspective of storage and computing can better select and optimize models in practical applications to adapt to different task requirements and resource constraints.

【Reference Materials】

- ZeRO: Memory Optimizations Toward Training Trillion Parameter Models ,https://arxiv.org/pdf/1910.02054v3.pdf

- Reducing Activation Recomputation in Large Transformer Models,https://arxiv.org/pdf/2205.05198.pdf

- https://timdettmers.com/2023/01/30/which-gpu-for-deep-learning/

- https://blog.eleuther.ai/transformer-math/

The above is the detailed content of 7B? 13B? 175B? Interpret parameters of large models. For more information, please follow other related articles on the PHP Chinese website!

从VAE到扩散模型:一文解读以文生图新范式Apr 08, 2023 pm 08:41 PM

从VAE到扩散模型:一文解读以文生图新范式Apr 08, 2023 pm 08:41 PM1 前言在发布DALL·E的15个月后,OpenAI在今年春天带了续作DALL·E 2,以其更加惊艳的效果和丰富的可玩性迅速占领了各大AI社区的头条。近年来,随着生成对抗网络(GAN)、变分自编码器(VAE)、扩散模型(Diffusion models)的出现,深度学习已向世人展现其强大的图像生成能力;加上GPT-3、BERT等NLP模型的成功,人类正逐步打破文本和图像的信息界限。在DALL·E 2中,只需输入简单的文本(prompt),它就可以生成多张1024*1024的高清图像。这些图像甚至

找不到中文语音预训练模型?中文版 Wav2vec 2.0和HuBERT来了Apr 08, 2023 pm 06:21 PM

找不到中文语音预训练模型?中文版 Wav2vec 2.0和HuBERT来了Apr 08, 2023 pm 06:21 PMWav2vec 2.0 [1],HuBERT [2] 和 WavLM [3] 等语音预训练模型,通过在多达上万小时的无标注语音数据(如 Libri-light )上的自监督学习,显著提升了自动语音识别(Automatic Speech Recognition, ASR),语音合成(Text-to-speech, TTS)和语音转换(Voice Conversation,VC)等语音下游任务的性能。然而这些模型都没有公开的中文版本,不便于应用在中文语音研究场景。 WenetSpeech [4] 是

普林斯顿陈丹琦:如何让「大模型」变小Apr 08, 2023 pm 04:01 PM

普林斯顿陈丹琦:如何让「大模型」变小Apr 08, 2023 pm 04:01 PM“Making large models smaller”这是很多语言模型研究人员的学术追求,针对大模型昂贵的环境和训练成本,陈丹琦在智源大会青源学术年会上做了题为“Making large models smaller”的特邀报告。报告中重点提及了基于记忆增强的TRIME算法和基于粗细粒度联合剪枝和逐层蒸馏的CofiPruning算法。前者能够在不改变模型结构的基础上兼顾语言模型困惑度和检索速度方面的优势;而后者可以在保证下游任务准确度的同时实现更快的处理速度,具有更小的模型结构。陈丹琦 普

解锁CNN和Transformer正确结合方法,字节跳动提出有效的下一代视觉TransformerApr 09, 2023 pm 02:01 PM

解锁CNN和Transformer正确结合方法,字节跳动提出有效的下一代视觉TransformerApr 09, 2023 pm 02:01 PM由于复杂的注意力机制和模型设计,大多数现有的视觉 Transformer(ViT)在现实的工业部署场景中不能像卷积神经网络(CNN)那样高效地执行。这就带来了一个问题:视觉神经网络能否像 CNN 一样快速推断并像 ViT 一样强大?近期一些工作试图设计 CNN-Transformer 混合架构来解决这个问题,但这些工作的整体性能远不能令人满意。基于此,来自字节跳动的研究者提出了一种能在现实工业场景中有效部署的下一代视觉 Transformer——Next-ViT。从延迟 / 准确性权衡的角度看,

Stable Diffusion XL 现已推出—有什么新功能,你知道吗?Apr 07, 2023 pm 11:21 PM

Stable Diffusion XL 现已推出—有什么新功能,你知道吗?Apr 07, 2023 pm 11:21 PM3月27号,Stability AI的创始人兼首席执行官Emad Mostaque在一条推文中宣布,Stable Diffusion XL 现已可用于公开测试。以下是一些事项:“XL”不是这个新的AI模型的官方名称。一旦发布稳定性AI公司的官方公告,名称将会更改。与先前版本相比,图像质量有所提高与先前版本相比,图像生成速度大大加快。示例图像让我们看看新旧AI模型在结果上的差异。Prompt: Luxury sports car with aerodynamic curves, shot in a

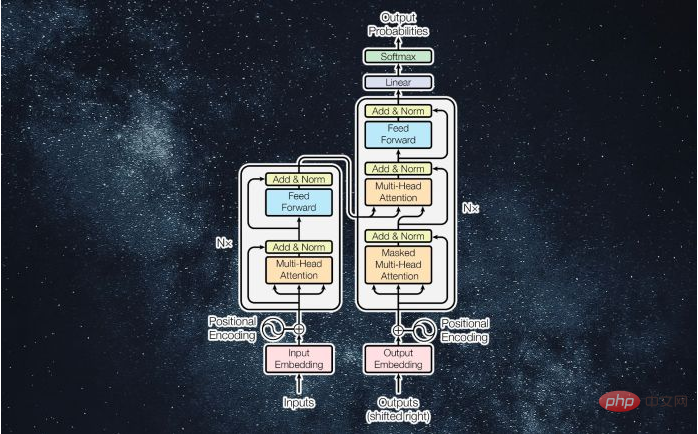

什么是Transformer机器学习模型?Apr 08, 2023 pm 06:31 PM

什么是Transformer机器学习模型?Apr 08, 2023 pm 06:31 PM译者 | 李睿审校 | 孙淑娟近年来, Transformer 机器学习模型已经成为深度学习和深度神经网络技术进步的主要亮点之一。它主要用于自然语言处理中的高级应用。谷歌正在使用它来增强其搜索引擎结果。OpenAI 使用 Transformer 创建了著名的 GPT-2和 GPT-3模型。自从2017年首次亮相以来,Transformer 架构不断发展并扩展到多种不同的变体,从语言任务扩展到其他领域。它们已被用于时间序列预测。它们是 DeepMind 的蛋白质结构预测模型 AlphaFold

五年后AI所需算力超100万倍!十二家机构联合发表88页长文:「智能计算」是解药Apr 09, 2023 pm 07:01 PM

五年后AI所需算力超100万倍!十二家机构联合发表88页长文:「智能计算」是解药Apr 09, 2023 pm 07:01 PM人工智能就是一个「拼财力」的行业,如果没有高性能计算设备,别说开发基础模型,就连微调模型都做不到。但如果只靠拼硬件,单靠当前计算性能的发展速度,迟早有一天无法满足日益膨胀的需求,所以还需要配套的软件来协调统筹计算能力,这时候就需要用到「智能计算」技术。最近,来自之江实验室、中国工程院、国防科技大学、浙江大学等多达十二个国内外研究机构共同发表了一篇论文,首次对智能计算领域进行了全面的调研,涵盖了理论基础、智能与计算的技术融合、重要应用、挑战和未来前景。论文链接:https://spj.scien

AI模型告诉你,为啥巴西最可能在今年夺冠!曾精准预测前两届冠军Apr 09, 2023 pm 01:51 PM

AI模型告诉你,为啥巴西最可能在今年夺冠!曾精准预测前两届冠军Apr 09, 2023 pm 01:51 PM说起2010年南非世界杯的最大网红,一定非「章鱼保罗」莫属!这只位于德国海洋生物中心的神奇章鱼,不仅成功预测了德国队全部七场比赛的结果,还顺利地选出了最终的总冠军西班牙队。不幸的是,保罗已经永远地离开了我们,但它的「遗产」却在人们预测足球比赛结果的尝试中持续存在。在艾伦图灵研究所(The Alan Turing Institute),随着2022年卡塔尔世界杯的持续进行,三位研究员Nick Barlow、Jack Roberts和Ryan Chan决定用一种AI算法预测今年的冠军归属。预测模型图

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

mPDF

mPDF is a PHP library that can generate PDF files from UTF-8 encoded HTML. The original author, Ian Back, wrote mPDF to output PDF files "on the fly" from his website and handle different languages. It is slower than original scripts like HTML2FPDF and produces larger files when using Unicode fonts, but supports CSS styles etc. and has a lot of enhancements. Supports almost all languages, including RTL (Arabic and Hebrew) and CJK (Chinese, Japanese and Korean). Supports nested block-level elements (such as P, DIV),

SecLists

SecLists is the ultimate security tester's companion. It is a collection of various types of lists that are frequently used during security assessments, all in one place. SecLists helps make security testing more efficient and productive by conveniently providing all the lists a security tester might need. List types include usernames, passwords, URLs, fuzzing payloads, sensitive data patterns, web shells, and more. The tester can simply pull this repository onto a new test machine and he will have access to every type of list he needs.

Zend Studio 13.0.1

Powerful PHP integrated development environment

Safe Exam Browser

Safe Exam Browser is a secure browser environment for taking online exams securely. This software turns any computer into a secure workstation. It controls access to any utility and prevents students from using unauthorized resources.

PhpStorm Mac version

The latest (2018.2.1) professional PHP integrated development tool