Technology peripherals

Technology peripherals AI

AI Ilya's first action after leaving his job: Liked this paper, and netizens rushed to read it

Ilya's first action after leaving his job: Liked this paper, and netizens rushed to read itSince Ilya Sutskever officially announced his resignation from OpenAI, his next move has become the focus of everyone's attention.

Some people even pay close attention to his every move.

No, Ilya just liked ❤️ a new paper——

Neural networks are trained on different data and modalities with different goals, andare tending to form a shared real-world statistical model in their representation space .

Platonic Representation Hypothesis, in reference to Plato's Allegory of the Cave and his ideas about the nature of ideal reality .

representation convergence of the AI system (Representational Convergence) , that is, the representation methods of data points in different neural network models are becoming more and more similar. This similarity spans different model architectures, training objectives and even data modalities.

What drives this convergence? Will this trend continue? Where is its final destination? After a series of analyzes and experiments, the researchers speculated that this convergence does have an endpoint and a driving principle:Different models strive to achieve an accurate representation of reality.

A picture to explain:

(X) and text (Y) are Different projections of a common underlying reality (Z). The researchers speculate that representation learning algorithms will converge to a unified representation of Z, and that the increase in model size and the diversity of data and tasks are key factors driving this convergence.

I can only say that it is indeed a question that Ilya is interested in. It is too profound and we don’t understand it. Let’s ask AI to help explain it and share it with everyone~

Ps: This research focuses on vector embedding representation, that is, data is converted into vector form, and the similarity or distance between data points is described by the kernel function. The concept of "representation alignment" in this article means that if two different representation methods reveal similar data structures, then the two representations are considered to be aligned.

1. Convergence of different models. Models with different architectures and goals tend to be consistent in their underlying representation.

At present, the number of systems built based on pre-trained basic models is gradually increasing, and some models are becoming the standard core architecture for multi-tasking. This wide applicability in a variety of applications reflects their certain versatility in data representation methods.

While this trend suggests that AI systems are converging toward a smaller set of base models, it does not prove that different base models will form the same representation.

However, some recent research related to model stitching found that the middle layer representations of image classification models can be well aligned even when trained on different datasets.

For example, some studies have found that the early layers of convolutional networks trained on the ImageNet and Places365 datasets can be interchanged, indicating that they learned similar initial visual representations. Other studies have discovered a large number of "Rosetta Neurons", that is, neurons with highly similar activation patterns in different visual models...

2. The larger the model size and performance, the higher the degree of representation alignment.The researchers measured the alignment of 78 models using the mutual nearest neighbor method on the Places-365 dataset

and evaluated their performance downstream of the vision task adaptation benchmark VTAB task performance.

It was found that the representation alignment between model clusters with stronger generalization ability was significantly higher.

3. Model representation convergence in different modes.

The researchers used the mutual nearest neighbor method to measure alignment on the Wikipedia image dataset WIT.

The results reveal a linear relationship between language-visual alignment and language modeling scores, with the general trend being that more capable language models align better with more capable visual models. 4. The model and brain representation also show a certain degree of consistency, possibly due to facing similar data and task constraints.

4. The model and brain representation also show a certain degree of consistency, possibly due to facing similar data and task constraints.

In 2014, a study found that the activation of the middle layer of the neural network is highly correlated with the activation pattern of the visual area of the brain, possibly due to facing similar visual tasks and data constraints.

Since then, studies have further found that using different training data will affect the alignment of the brain and model representations. Psychological research has also found that the way humans perceive visual similarity is highly consistent with neural network models.5. The degree of alignment of model representations is positively correlated with the performance of downstream tasks.

The researchers used two downstream tasks to evaluate the model's performance: Hellaswag (common sense reasoning)

and GSM8K(mathematics) . And use the DINOv2 model as a reference to measure the alignment of other language models with the visual model. Experimental results show that language models that are more aligned with the visual model also perform better on Hellaswag and GSM8K tasks. The visualization results show that there is a clear positive correlation between the degree of alignment and downstream task performance.

#I will not go into detail about the previous research here. Interested family members can check out the original paper.

1. Convergence via Task Generality

1. Convergence via Task Generality

(Convergence via Task Generality)As the model is To train to solve more tasks, they need to find representations that can meet the requirements of all tasks:

The number of representations that can handle N tasks is less than the number of representations that can handle M (M

A similar principle has been proposed before. The illustration is as follows:

Moreover, the easy tasks are Multiple solutions, while difficult tasks have fewer solutions. Therefore, as task difficulty increases, the model's representation tends to converge to better, fewer solutions.

2. Model capacity leads to convergence(Convergence via Model Capacity)

The researchers pointed out the capacity assumption. If there is a global optimal representation, then a larger model is more likely to approach the optimal solution if the data is sufficient.

Therefore, larger models using the same training objectives, regardless of their architecture, will tend to converge towards this optimal solution. When different training objectives have similar minima, larger models are more efficient at finding these minima and tend to similar solutions across training tasks.

The diagram is like this:

3. Simplicity bias leads to convergence (Convergence via Simplicity Bias)

Regarding the reason for convergence, the researchers also proposed a hypothesis. Deep networks tend to look for simple fits to the data. This inherent simplicity bias makes large models tend to be simplified in representation, leading to convergence.

#That is, larger models have broader coverage and are able to fit the same data in all possible ways. However, the implicit simplicity preference of deep networks encourages larger models to find the simplest of these solutions.

Endpoint of Convergence

After a series of analyzes and experiments, as mentioned at the beginning, the researchers proposed Plato Representation Hypothesis, The end point of this convergence is speculated.

That is, different AI models, although trained on different data and targets, their representation spaces are converging on a common statistical model that represents the real world that generates the data we observe.

They first constructed an idealized discrete event world model. The world contains a series of discrete events Z, each event is sampled from an unknown distribution P(Z). Each event can be observed in different ways through the observation function obs, such as pixels, sounds, text, etc.

Next, the author considered a class of contrastive learning algorithms that attempt to learn a representation fX such that the inner product of fX(xa) and fX(xb) approximates xa and xb as a positive sample pair# The ratio of the log odds of ## (from nearby observations) to the log odds of as a negative sample pair (randomly sampled).

After mathematical derivation, the author found that if the data is smooth enough, this type of algorithm will converge to a kernel function that is the point mutual information of xa and xb

After mathematical derivation, the author found that if the data is smooth enough, this type of algorithm will converge to a kernel function that is the point mutual information of xa and xb

Representation of kernel fX.

Since the study considers an idealized discrete world, the observation function obs is bijective, so the PMI kernel of xa and xb is equal to the PMI of the corresponding events za and zb nuclear.

Since the study considers an idealized discrete world, the observation function obs is bijective, so the PMI kernel of xa and xb is equal to the PMI of the corresponding events za and zb nuclear.

This means that whether learning representations from visual data X or language data Y, they will eventually converge to the same kernel function representing P(Z), that is, events PMI core between pairs.

This means that whether learning representations from visual data X or language data Y, they will eventually converge to the same kernel function representing P(Z), that is, events PMI core between pairs.

The researchers tested this theory through an empirical study on color. Whether color representation is learned from pixel co-occurrence statistics of images or word co-occurrence statistics of text, the resulting color distances are similar to human perception, and as the model size increases, this similarity becomes higher and higher. .

This is consistent with theoretical analysis, that is, greater model capability can more accurately model the statistics of observation data, thereby obtaining PMI kernels that are closer to ideal event representations.

Some final thoughts

At the end of the paper, the author summarizes the potential impact of representation convergence on the field of AI and future research directions, as well as potential limitations and exceptions to the Platonic representation assumption.

They pointed out that as the model size increases, the possible effects of convergence of representation include but are not limited to:

- Although simply scaling up can improve performance, different methods There are differences in scaling efficiency.

- If there is a modality-independent Platonic representation, then data from different modalities should be jointly trained to find this shared representation. This explains why it is beneficial to add visual data to language model training and vice versa.

- Conversion between aligned representations should be relatively simple, which may explain why conditional generation is easier than unconditional generation, and cross-modal conversion can be achieved without paired data.

- Increased model size may reduce the tendency of language models to fabricate content and some of their biases, making them more accurately reflect the biases in the training data rather than exacerbating them.

The author emphasizes that the premise of the above impact is that the training data of future models must be sufficiently diverse and lossless to truly converge to a representation that reflects the statistical laws of the actual world.

At the same time, the author also stated that data of different modalities may contain unique information, which may make it difficult to achieve complete representation convergence even as the model size increases. In addition, not all representations are currently converging. For example, there is no standardized way of representing states in the field of robotics. Researcher and community preferences may lead models to converge toward human representations, thereby ignoring other possible forms of intelligence.

And intelligent systems designed specifically for specific tasks may not converge to the same representations as general intelligence.

The authors also highlight that methods of measuring representation alignment are controversial, and different measurement methods may lead to different conclusions. Even if the representations of different models are similar, gaps remain to be explained, and it is currently impossible to determine whether this gap is important.

For more details and argumentation methods, please put the paper here~

Paper link: https://arxiv.org/abs/2405.07987

The above is the detailed content of Ilya's first action after leaving his job: Liked this paper, and netizens rushed to read it. For more information, please follow other related articles on the PHP Chinese website!

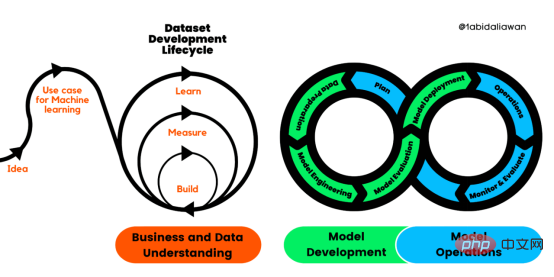

解读CRISP-ML(Q):机器学习生命周期流程Apr 08, 2023 pm 01:21 PM

解读CRISP-ML(Q):机器学习生命周期流程Apr 08, 2023 pm 01:21 PM译者 | 布加迪审校 | 孙淑娟目前,没有用于构建和管理机器学习(ML)应用程序的标准实践。机器学习项目组织得不好,缺乏可重复性,而且从长远来看容易彻底失败。因此,我们需要一套流程来帮助自己在整个机器学习生命周期中保持质量、可持续性、稳健性和成本管理。图1. 机器学习开发生命周期流程使用质量保证方法开发机器学习应用程序的跨行业标准流程(CRISP-ML(Q))是CRISP-DM的升级版,以确保机器学习产品的质量。CRISP-ML(Q)有六个单独的阶段:1. 业务和数据理解2. 数据准备3. 模型

人工智能的环境成本和承诺Apr 08, 2023 pm 04:31 PM

人工智能的环境成本和承诺Apr 08, 2023 pm 04:31 PM人工智能(AI)在流行文化和政治分析中经常以两种极端的形式出现。它要么代表着人类智慧与科技实力相结合的未来主义乌托邦的关键,要么是迈向反乌托邦式机器崛起的第一步。学者、企业家、甚至活动家在应用人工智能应对气候变化时都采用了同样的二元思维。科技行业对人工智能在创建一个新的技术乌托邦中所扮演的角色的单一关注,掩盖了人工智能可能加剧环境退化的方式,通常是直接伤害边缘人群的方式。为了在应对气候变化的过程中充分利用人工智能技术,同时承认其大量消耗能源,引领人工智能潮流的科技公司需要探索人工智能对环境影响的

找不到中文语音预训练模型?中文版 Wav2vec 2.0和HuBERT来了Apr 08, 2023 pm 06:21 PM

找不到中文语音预训练模型?中文版 Wav2vec 2.0和HuBERT来了Apr 08, 2023 pm 06:21 PMWav2vec 2.0 [1],HuBERT [2] 和 WavLM [3] 等语音预训练模型,通过在多达上万小时的无标注语音数据(如 Libri-light )上的自监督学习,显著提升了自动语音识别(Automatic Speech Recognition, ASR),语音合成(Text-to-speech, TTS)和语音转换(Voice Conversation,VC)等语音下游任务的性能。然而这些模型都没有公开的中文版本,不便于应用在中文语音研究场景。 WenetSpeech [4] 是

条形统计图用什么呈现数据Jan 20, 2021 pm 03:31 PM

条形统计图用什么呈现数据Jan 20, 2021 pm 03:31 PM条形统计图用“直条”呈现数据。条形统计图是用一个单位长度表示一定的数量,根据数量的多少画成长短不同的直条,然后把这些直条按一定的顺序排列起来;从条形统计图中很容易看出各种数量的多少。条形统计图分为:单式条形统计图和复式条形统计图,前者只表示1个项目的数据,后者可以同时表示多个项目的数据。

自动驾驶车道线检测分类的虚拟-真实域适应方法Apr 08, 2023 pm 02:31 PM

自动驾驶车道线检测分类的虚拟-真实域适应方法Apr 08, 2023 pm 02:31 PMarXiv论文“Sim-to-Real Domain Adaptation for Lane Detection and Classification in Autonomous Driving“,2022年5月,加拿大滑铁卢大学的工作。虽然自主驾驶的监督检测和分类框架需要大型标注数据集,但光照真实模拟环境生成的合成数据推动的无监督域适应(UDA,Unsupervised Domain Adaptation)方法则是低成本、耗时更少的解决方案。本文提出对抗性鉴别和生成(adversarial d

数据通信中的信道传输速率单位是bps,它表示什么Jan 18, 2021 pm 02:58 PM

数据通信中的信道传输速率单位是bps,它表示什么Jan 18, 2021 pm 02:58 PM数据通信中的信道传输速率单位是bps,它表示“位/秒”或“比特/秒”,即数据传输速率在数值上等于每秒钟传输构成数据代码的二进制比特数,也称“比特率”。比特率表示单位时间内传送比特的数目,用于衡量数字信息的传送速度;根据每帧图像存储时所占的比特数和传输比特率,可以计算数字图像信息传输的速度。

数据分析方法有哪几种Dec 15, 2020 am 09:48 AM

数据分析方法有哪几种Dec 15, 2020 am 09:48 AM数据分析方法有4种,分别是:1、趋势分析,趋势分析一般用于核心指标的长期跟踪;2、象限分析,可依据数据的不同,将各个比较主体划分到四个象限中;3、对比分析,分为横向对比和纵向对比;4、交叉分析,主要作用就是从多个维度细分数据。

聊一聊Python 实现数据的序列化操作Apr 12, 2023 am 09:31 AM

聊一聊Python 实现数据的序列化操作Apr 12, 2023 am 09:31 AM在日常开发中,对数据进行序列化和反序列化是常见的数据操作,Python提供了两个模块方便开发者实现数据的序列化操作,即 json 模块和 pickle 模块。这两个模块主要区别如下:json 是一个文本序列化格式,而 pickle 是一个二进制序列化格式;json 是我们可以直观阅读的,而 pickle 不可以;json 是可互操作的,在 Python 系统之外广泛使用,而 pickle 则是 Python 专用的;默认情况下,json 只能表示 Python 内置类型的子集,不能表示自定义的

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

ZendStudio 13.5.1 Mac

Powerful PHP integrated development environment

EditPlus Chinese cracked version

Small size, syntax highlighting, does not support code prompt function

MantisBT

Mantis is an easy-to-deploy web-based defect tracking tool designed to aid in product defect tracking. It requires PHP, MySQL and a web server. Check out our demo and hosting services.

SublimeText3 Linux new version

SublimeText3 Linux latest version

mPDF

mPDF is a PHP library that can generate PDF files from UTF-8 encoded HTML. The original author, Ian Back, wrote mPDF to output PDF files "on the fly" from his website and handle different languages. It is slower than original scripts like HTML2FPDF and produces larger files when using Unicode fonts, but supports CSS styles etc. and has a lot of enhancements. Supports almost all languages, including RTL (Arabic and Hebrew) and CJK (Chinese, Japanese and Korean). Supports nested block-level elements (such as P, DIV),