Technology peripherals

Technology peripherals AI

AI Simple and universal: 3 times lossless training acceleration of visual basic network, Tsinghua EfficientTrain++ selected for TPAMI 2024

Simple and universal: 3 times lossless training acceleration of visual basic network, Tsinghua EfficientTrain++ selected for TPAMI 2024The author of this discussion paper, Wang Yulin, is a 2019 direct doctoral student in the Department of Automation, Tsinghua University. He studied under Academician Wu Cheng and Associate Professor Huang Gao. His main research directions are efficient deep learning, computer vision, etc. He has published discussion papers as the first author in journals and conferences such as TPAMI, NeurIPS, ICLR, ICCV, CVPR, ECCV, etc. He has received Baidu Scholarship, Microsoft Scholar, CCF-CV Academic Emerging Award, ByteDance Scholarship and other honors. Personal homepage: wyl.cool.

This article mainly introduces an article that has just been accepted by IEEE Transactions on Pattern Analysis and Machine Intelligence (TPAMI): EfficientTrain++: Generalized Curriculum Learning for Efficient Visual Backbone Training.

- ##Paper link: https://arxiv.org/pdf/2405.08768

- The code and pre-trained model have been open source: https://github.com/LeapLabTHU/EfficientTrain ##Conference version paper (ICCV 2023) :

- https://arxiv.org/pdf/2211.09703

However, "scaling" often brings prohibitive

high model training overhead, which significantly hinders the further development and industrial application of basic vision models.

To solve this problem, the research team of Tsinghua University proposed a generalized curriculum learning algorithm:EfficientTrain++. The core idea is to promote the traditional course learning paradigm of "screening and using data from easy to difficult, and gradually training the model" to "not filtering data dimensions, always using all training data, but gradually revealing each feature during the training process" Characteristics or patterns (pattern) from easy to difficult of each data sample."

EfficientTrain++ has several important highlights:

- Plug and play implementation of visual basic network 1.5−3.0× Lossless training acceleration. Neither upstream nor downstream model performance is lost. The measured speed is consistent with the theoretical results. Commonly applicable to

- different training data sizes (such as ImageNet-1K/22K, the effect of 22K is even more obvious). Commonly used for supervised learning and self-supervised learning (such as MAE). Common to different training costs (e.g. corresponding to 0-300 or more epochs). Commonly used in

- ViT, ConvNet and other network structures (More than twenty models of different sizes and types have been tested in this article, and they are consistent and effective). For smaller models, in addition to training acceleration, it can also significantly improve performance (for example, without the help of additional information and without additional training overhead, we obtained on ImageNet-1K

- 81.3% DeiT-S, comparable to the original Swin-Tiny). Developed

- specialized practical efficiency optimization technology## for two challenging common practical situations #: 1) The CPU/hard disk is not powerful enough, and the data preprocessing efficiency cannot keep up with the GPU; 2) Large-scale parallel training, such as using 64 or more GPUs to train large models on ImageNet-22K. Next, let’s take a look at the details of the study.

one. Research motivation

In recent years, the vigorous development of large-scale foundation models has promoted the progress of artificial intelligence and deep learning. In the field of computer vision, representative works such as Vision Transformer (ViT), CLIP, SAM, and DINOv2 have proven that scaling up the size of neural networks and the scale of training data can significantly expand important visual tasks such as cognition, detection, and segmentation. performance boundaries.

However, large basic models often have high training overhead. Figure 1 gives two typical examples. Taking 8 NVIDIA V100 or higher-performance GPUs as an example, it would take years or even decades to complete just one training session for GPT-3 and ViT-G. Such high training costs are a huge expense that is difficult to afford for both academia and industry. Often only a few high-level institutions consume large amounts of resources to advance the progress of deep learning. Therefore, an urgent problem to be solved is: how to effectively improve the training efficiency of large-scale deep learning models?

Figure 1 Example: High training overhead of large deep learning basic models

Figure 1 Example: High training overhead of large deep learning basic models

For computer vision models, a classic The idea is curriculum learning, as shown in Figure 2, which imitates the progressive and highly structured learning process of humans. During the model training process, we start with the "simplest" training data and gradually introduce it from easy to difficult. The data.

Figure 2 Classic Curriculum Learning Paradigm (Picture Source: "A Survey on Curriculum Learning", TPAMI'22)

Figure 2 Classic Curriculum Learning Paradigm (Picture Source: "A Survey on Curriculum Learning", TPAMI'22)

However ,Although the motivation is relatively natural, course learning ,has not been widely applied as a general method for training ,visual basic models. The main reason is that there are ,two key bottlenecks, as shown in Figure 3. First, designing an effective training curriculum (curriculum) is not easy. Distinguishing between "simple" and "difficult" samples often requires the help of additional pre-training models, designing more complex AutoML algorithms, introducing reinforcement learning, etc., and has poor versatility. Second, the modeling of course learning itself is somewhat unreasonable. Visual data in natural distribution often has a high degree of diversity. An example is given below in Figure 3 (parrot pictures randomly selected from ImageNet). The model training data contains a large number of parrots with different movements, parrots at different distances from the camera, Parrots from different perspectives and backgrounds, as well as the diverse interactions between parrots and people or objects, etc., it is actually a relatively rough method to distinguish such diverse data only by single-dimensional indicators of "simple" and "difficult" and far-fetched modeling methods.

Figure 3 Two key bottlenecks that hinder large-scale application of course learning in training visual basic models

Figure 3 Two key bottlenecks that hinder large-scale application of course learning in training visual basic models

2. Method Introduction

Inspired by the above challenges, this paper proposes a generalized curriculum learning paradigm. The core idea is to make "filtering and use easy The traditional course learning paradigm of "obtaining difficult data and gradually training the model" has been extended to "does not filter the data dimensions and always uses all training data, but gradually reveals the reasons for each data sample during the training process. Difficult features or patterns", thus effectively avoiding the limitations and sub-optimal designs caused by the data screening paradigm, as shown in Figure 4.

Figure 4 Traditional curriculum learning (sample dimension) vs. generalized curriculum learning (feature dimension)

Figure 4 Traditional curriculum learning (sample dimension) vs. generalized curriculum learning (feature dimension)

The main reasons for this paradigm Based on an interesting phenomenon: In the training process of a natural visual model, although the model can always obtain all the information contained in the data at any time, the model will always naturally learn to recognize some simpler information contained in the data first. The discriminant features (pattern), and then gradually learn to identify more difficult discriminant features on this basis. Moreover, this rule is relatively universal, "relatively simple" discriminant features can be found more easily in both the frequency domain and the spatial domain. This paper designed a series of interesting experiments to demonstrate the above findings, as described below.

From a frequency domain perspective, "low-frequency features" are "relatively simple" for the model. In Figure 5, the author of this article trained a DeiT-S model using standard ImageNet-1K training data, and used low-pass filters with different bandwidths to filter the verification set, retaining only the low-frequency components of the verification image, and reports on this basis. The accuracy of DeiT-S on the low-pass filtered verification data during the training process. The curve of the obtained accuracy relative to the training process is shown on the right side of Figure 5.

We can see an interesting phenomenon: in the early stages of training, using only low-pass filtered validation data will not significantly reduce the accuracy, and the curve is consistent with the normal validation set accuracy. The separation point gradually moves to the right as the filter bandwidth increases. This phenomenon shows that although the model always has access to the low- and high-frequency parts of the training data, its learning process naturally starts by focusing only on low-frequency information, and the ability to identify higher-frequency features is gradually acquired later in the training (this phenomenon For more evidence, please refer to the original text).

Figure 5 From a frequency domain perspective, the model naturally tends to learn to identify low-frequency features first

Figure 5 From a frequency domain perspective, the model naturally tends to learn to identify low-frequency features first

This finding leads to an interesting Question: Can we design a training curriculum that starts with low-frequency information that only provides visual input to the model, and then gradually introduces high-frequency information?

Figure 6 investigates the idea of performing low-pass filtering on the training data only during an early training phase of a specific length, leaving the rest of the training process unchanged. It can be observed from the results that although the final performance improvement is limited, it is interesting to note that the final accuracy of the model can be preserved to a large extent even if only low-frequency components are provided to the model for a considerable period of early training phase, which It also coincides with the observation in Figure 5 that "the model mainly focuses on learning to identify low-frequency features in the early stages of training".

This discovery inspired the author of this article to think about training efficiency: Since the model only needs low-frequency components in the data in the early stages of training, and the information contained in the low-frequency components is smaller than the original data, then it can Can the model efficiently learn from only low-frequency components at less computational cost than processing the original input?

Figure 6 Providing only low-frequency components to the model for a long period of early training does not significantly affect the final performance

Figure 6 Providing only low-frequency components to the model for a long period of early training does not significantly affect the final performance

In fact, this idea is completely feasible. As shown on the left side of Figure 7, the author of this article introduces a cropping operation in the Fourier spectrum of the image to crop out the low-frequency part and map it back to the pixel space. This low-frequency cropping operation accurately preserves all low-frequency information while reducing the size of the image input, so the computational cost of the model learning from the input can be exponentially reduced.

If you use this low-frequency cropping operation to process the model input in the early stages of training, you can significantly save the overall training cost, but because the information necessary for model learning is maximally retained, The final model with almost no performance loss can still be obtained, and the experimental results are shown in the lower right corner of Figure 7.

Figure 7 Low-frequency cropping: Make the model efficiently learn only from low-frequency information

Figure 7 Low-frequency cropping: Make the model efficiently learn only from low-frequency information

In addition to frequency domain operations, from the perspective of spatial domain transformation, "relatively simple" features for the model can also be found. For example, natural image information contained in raw visual input that has not undergone strong data enhancement or distortion processing is often "simpler" for the model and easier for the model to learn because it is derived from real-world distributions. , and the additional information, invariance, etc. introduced by preprocessing techniques such as data enhancement are often difficult for the model to learn (a typical example is given on the left side of Figure 8).

In fact, existing research has also observed that data augmentation mainly plays a role in the later stages of training (such as "Improving Auto-Augment via Augmentation-Wise Weight Sharing", NeurIPS' 20).

在这一维度上,为实现广义课程学习的范式,可以简单地通过改变数据增强的强度方便地实现在训练早期阶段仅向模型提供训练数据中较容易学习的自然图像信息。图 8 右侧使用 RandAugment 作为代表性示例来验证了这个思路,RandAugment 包含了一系列常见的空域数据增强变换(例如随机旋转、更改锐度、仿射变换、更改曝光度等)。

可以观察到,从较弱的数据增强开始训练模型可以有效提高模型最终表现,同时这一技术与低频裁切兼容。

图 8 从空域的角度寻找模型 “较容易学习” 的特征:一个数据增强的视角

图 8 从空域的角度寻找模型 “较容易学习” 的特征:一个数据增强的视角

到此处为止,本文提出了广义课程学习的核心框架与假设,并通过揭示频域、空域的两个关键现象证明了广义课程学习的合理性和有效性。在此基础上,本文进一步完成了一系列系统性工作,在下面列出。由于篇幅所限,关于更多研究细节,可参考原论文。

- 融合频域、空域的两个核心发现,提出和改进了专门设计的优化算法,建立了一个统一、整合的 EfficientTrain++ 广义课程学习方案。

- 探讨了低频裁切操作在实际硬件上高效实现的具体方法,并从理论和实验两个角度比较了两种提取低频信息的可行方法:低频裁切和图像降采样,的区别和联系。

- 对两种有挑战性的常见实际情形开发了专门的实际效率优化技术:1)CPU / 硬盘不够强力,数据预处理效率跟不上 GPU;2)大规模并行训练,例如在 ImageNet-22K 上使用 64 或以上的 GPUs 训练大型模型。

本文最终得到的 EfficientTrain++ 广义课程学习方案如图 9 所示。EfficientTrain++ 以模型训练总计算开销的消耗百分比为依据,动态调整频域低频裁切的带宽和空域数据增强的强度。

值得注意的是,作为一种即插即用的方法,EfficientTrain++ 无需进一步的超参数调整或搜索即可直接应用于多种视觉基础网络和多样化的模型训练场景,效果比较稳定、显著。

图 9 统一、整合的广义课程学习方案:EfficientTrain++

图 9 统一、整合的广义课程学习方案:EfficientTrain++

三.实验结果

作为一种即插即用的方法,EfficientTrain++ 在 ImageNet-1K 上,在基本不损失或提升性能的条件下,将多种视觉基础网络的实际训练开销降低了 1.5 倍左右。

图 10 ImageNet-1K 实验结果:EfficientTrain++ 在多种视觉基础网络上的表现

图 10 ImageNet-1K 实验结果:EfficientTrain++ 在多种视觉基础网络上的表现

EfficientTrain++ 的增益通用于不同的训练开销预算,严格相同表现的情况下,DeiT/Swin 在 ImageNet-1K 上的训加速比在 2-3 倍左右。

图 11 ImageNet-1K 实验结果:EfficientTrain++ 在不同训练开销预算下的表现

图 11 ImageNet-1K 实验结果:EfficientTrain++ 在不同训练开销预算下的表现

EfficientTrain++ 在 ImageNet-22k 上可以取得 2-3 倍的性能无损预训练加速。

图 12 ImageNet-22K 实验结果:EfficientTrain++ 在更大规模训练数据上的表现

图 12 ImageNet-22K 实验结果:EfficientTrain++ 在更大规模训练数据上的表现

对于较小的模型,EfficientTrain++ 可以实现显著的性能上界提升。

图13 ImageNet-1K 实验结果:EfficientTrain++ 可以显着提升较小模型的性能上界

图13 ImageNet-1K 实验结果:EfficientTrain++ 可以显着提升较小模型的性能上界

EfficientTrain++ 对于自监督学习算法(如MAE)同样有效。

图14 EfficientTrain++ 可以应用于自监督学习(如MAE)

图14 EfficientTrain++ 可以应用于自监督学习(如MAE)

EfficientTrain++ 训得的模型在目标检测、实例分割、语义分割等下游任务上同样不损失性能。

图 15 COCO 目标检测、COCO 实例分割、ADE20K 语义分割实验结果

图 15 COCO 目标检测、COCO 实例分割、ADE20K 语义分割实验结果

The above is the detailed content of Simple and universal: 3 times lossless training acceleration of visual basic network, Tsinghua EfficientTrain++ selected for TPAMI 2024. For more information, please follow other related articles on the PHP Chinese website!

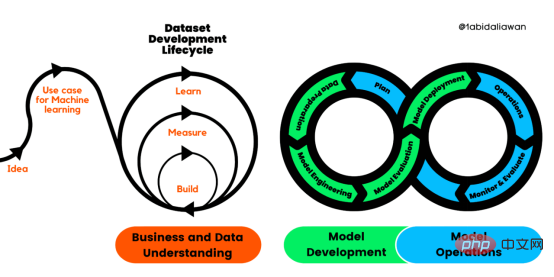

解读CRISP-ML(Q):机器学习生命周期流程Apr 08, 2023 pm 01:21 PM

解读CRISP-ML(Q):机器学习生命周期流程Apr 08, 2023 pm 01:21 PM译者 | 布加迪审校 | 孙淑娟目前,没有用于构建和管理机器学习(ML)应用程序的标准实践。机器学习项目组织得不好,缺乏可重复性,而且从长远来看容易彻底失败。因此,我们需要一套流程来帮助自己在整个机器学习生命周期中保持质量、可持续性、稳健性和成本管理。图1. 机器学习开发生命周期流程使用质量保证方法开发机器学习应用程序的跨行业标准流程(CRISP-ML(Q))是CRISP-DM的升级版,以确保机器学习产品的质量。CRISP-ML(Q)有六个单独的阶段:1. 业务和数据理解2. 数据准备3. 模型

人工智能的环境成本和承诺Apr 08, 2023 pm 04:31 PM

人工智能的环境成本和承诺Apr 08, 2023 pm 04:31 PM人工智能(AI)在流行文化和政治分析中经常以两种极端的形式出现。它要么代表着人类智慧与科技实力相结合的未来主义乌托邦的关键,要么是迈向反乌托邦式机器崛起的第一步。学者、企业家、甚至活动家在应用人工智能应对气候变化时都采用了同样的二元思维。科技行业对人工智能在创建一个新的技术乌托邦中所扮演的角色的单一关注,掩盖了人工智能可能加剧环境退化的方式,通常是直接伤害边缘人群的方式。为了在应对气候变化的过程中充分利用人工智能技术,同时承认其大量消耗能源,引领人工智能潮流的科技公司需要探索人工智能对环境影响的

找不到中文语音预训练模型?中文版 Wav2vec 2.0和HuBERT来了Apr 08, 2023 pm 06:21 PM

找不到中文语音预训练模型?中文版 Wav2vec 2.0和HuBERT来了Apr 08, 2023 pm 06:21 PMWav2vec 2.0 [1],HuBERT [2] 和 WavLM [3] 等语音预训练模型,通过在多达上万小时的无标注语音数据(如 Libri-light )上的自监督学习,显著提升了自动语音识别(Automatic Speech Recognition, ASR),语音合成(Text-to-speech, TTS)和语音转换(Voice Conversation,VC)等语音下游任务的性能。然而这些模型都没有公开的中文版本,不便于应用在中文语音研究场景。 WenetSpeech [4] 是

条形统计图用什么呈现数据Jan 20, 2021 pm 03:31 PM

条形统计图用什么呈现数据Jan 20, 2021 pm 03:31 PM条形统计图用“直条”呈现数据。条形统计图是用一个单位长度表示一定的数量,根据数量的多少画成长短不同的直条,然后把这些直条按一定的顺序排列起来;从条形统计图中很容易看出各种数量的多少。条形统计图分为:单式条形统计图和复式条形统计图,前者只表示1个项目的数据,后者可以同时表示多个项目的数据。

自动驾驶车道线检测分类的虚拟-真实域适应方法Apr 08, 2023 pm 02:31 PM

自动驾驶车道线检测分类的虚拟-真实域适应方法Apr 08, 2023 pm 02:31 PMarXiv论文“Sim-to-Real Domain Adaptation for Lane Detection and Classification in Autonomous Driving“,2022年5月,加拿大滑铁卢大学的工作。虽然自主驾驶的监督检测和分类框架需要大型标注数据集,但光照真实模拟环境生成的合成数据推动的无监督域适应(UDA,Unsupervised Domain Adaptation)方法则是低成本、耗时更少的解决方案。本文提出对抗性鉴别和生成(adversarial d

数据通信中的信道传输速率单位是bps,它表示什么Jan 18, 2021 pm 02:58 PM

数据通信中的信道传输速率单位是bps,它表示什么Jan 18, 2021 pm 02:58 PM数据通信中的信道传输速率单位是bps,它表示“位/秒”或“比特/秒”,即数据传输速率在数值上等于每秒钟传输构成数据代码的二进制比特数,也称“比特率”。比特率表示单位时间内传送比特的数目,用于衡量数字信息的传送速度;根据每帧图像存储时所占的比特数和传输比特率,可以计算数字图像信息传输的速度。

数据分析方法有哪几种Dec 15, 2020 am 09:48 AM

数据分析方法有哪几种Dec 15, 2020 am 09:48 AM数据分析方法有4种,分别是:1、趋势分析,趋势分析一般用于核心指标的长期跟踪;2、象限分析,可依据数据的不同,将各个比较主体划分到四个象限中;3、对比分析,分为横向对比和纵向对比;4、交叉分析,主要作用就是从多个维度细分数据。

聊一聊Python 实现数据的序列化操作Apr 12, 2023 am 09:31 AM

聊一聊Python 实现数据的序列化操作Apr 12, 2023 am 09:31 AM在日常开发中,对数据进行序列化和反序列化是常见的数据操作,Python提供了两个模块方便开发者实现数据的序列化操作,即 json 模块和 pickle 模块。这两个模块主要区别如下:json 是一个文本序列化格式,而 pickle 是一个二进制序列化格式;json 是我们可以直观阅读的,而 pickle 不可以;json 是可互操作的,在 Python 系统之外广泛使用,而 pickle 则是 Python 专用的;默认情况下,json 只能表示 Python 内置类型的子集,不能表示自定义的

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

SublimeText3 English version

Recommended: Win version, supports code prompts!

VSCode Windows 64-bit Download

A free and powerful IDE editor launched by Microsoft

MantisBT

Mantis is an easy-to-deploy web-based defect tracking tool designed to aid in product defect tracking. It requires PHP, MySQL and a web server. Check out our demo and hosting services.

WebStorm Mac version

Useful JavaScript development tools

EditPlus Chinese cracked version

Small size, syntax highlighting, does not support code prompt function