Technology peripherals

Technology peripherals AI

AI The open source version of GLM-4 is finally here: surpassing Llama3, multi-modality comparable to GPT4V, and the MaaS platform has also been greatly upgraded.

The open source version of GLM-4 is finally here: surpassing Llama3, multi-modality comparable to GPT4V, and the MaaS platform has also been greatly upgraded.The open source version of GLM-4 is finally here: surpassing Llama3, multi-modality comparable to GPT4V, and the MaaS platform has also been greatly upgraded.

The latest version of the large model costs 6 cents and 1 million Tokens.

This morning, at the AI Open Day, Zhipu AI, a large model company that has attracted much attention, announced a series of industry implementation figures:

According to the latest statistics, Zhipu AI large model open platform has currently obtained 300,000 registered users, and the average daily call volume has reached 40 billion Tokens, of which APIs have been used in the past 6 months. Daily consumption has increased by more than 50 times, and the most powerful GLM-4 model has increased by more than 90 times in the past 4 months.

In the recent Qingtan App, more than 300,000 agents have been active in the agent center, including many excellent productivity tools, such as mind maps, document assistants, schedulers, and more.

On the new technology side, the latest version of GLM-4, GLM-4-9B, surpasses Llama 3 8B in all aspects. The multi-modal model GLM-4V-9B is also online, and all large models remain open source. .

A series of commercial achievements and technological breakthroughs are eye-catching.

MaaS platform upgrade to version 2.0

Laying the threshold for large model application

Recently, domestic large models are setting off a new round competition.

In early May, Zhipu AI took the lead in reducing the price of the large model GLM-3-Turbo service to 1/5 of the original price, which also inspired many players in the large model field to "join the war." From the rush to establish start-up companies, the "Battle of 100 Models" to the price war, competition in the large model industry has spiraled upward.

Reducing the cost of large model services can allow more enterprises and developers to obtain new technologies, thereby generating sufficient usage. This will not only accelerate technological breakthroughs, but also allow large models to be used in various industries. Various industries are rapidly penetrating and commercializing the layout.

It is worth mentioning that at the current point, the price of large models has been pushed very low, but Zhipu said that it is not afraid of price war.

"I believe that everyone is familiar with the recent large-scale model price war, and is also very concerned about Zhipu's commercialization strategy. We are proud to say that through model core technology iteration and efficiency improvement, through technology Innovation enables continuous reduction of application costs while ensuring continuous upgrade of customer value," said Zhang Peng, CEO of Zhipu AI.

According to the different application scales of enterprises, Zhipu announced a series of latest price adjustments. The maximum API discount reaches 40% off, and the GLM-4-9B version can be used for only 6 cents / 1 million tokens. Looking back at the beginning of last year, the price of the large models of the GLM series has been reduced by 10,000 times.

As the first startup to invest in generative AI, Zhipu AI’s commercialization speed is faster than that of many competitors. Build a product matrix based on hundreds of billions of multi-modal pre-trained models. It has launched a GLMs personalized agent customization tool for the C-side, allowing users to create their own GLM agents with simple prompt word instructions without any programming knowledge. For business-end customers, the latest generation of GLM-4 large models has been launched on the MaaS (Model as a Service) platform, providing API access.

# This wisdom spectrum AI open platform.

On today’s Open Day, Zhipu launched the MaaS open platform 2.0, which has achieved improvements in new models, costs, security and other aspects. At the event, Zhipu AI introduced the latest progress of its open platform. The upgraded model fine-tuning platform can help enterprises greatly simplify the process of building private models. The entire range of GLM-4 large models now supports deployment in just three steps.

GLM-4 9B comprehensively surpasses Llama3

Multi-modal parity with GPT-4V, open source and free

###For Zhipu AI, which regards building AGI as its goal, continuous iteration of large model technical capabilities is also a top priority.

Since the all In big model in 2020, Zhipu has been at the forefront of the artificial intelligence wave. Its research involves all aspects of large model technology, from the original pre-training framework GLM, domestic computing power adaptation, general base large models, to semantic reasoning, multi-modal generation, to long context, visual understanding, and Agent intelligence capabilities. In all aspects, Zhipu has invested considerable resources to promote original innovation in technology.

In the past year, Zhipu has successively launched four generations of general-purpose large models: ChatGLM in March 2023, ChatGLM2 in June, and ChatGLM3 in October last year; in January this year, the latest generation of base models Model GLM-4 officially released. At Open Day, Zhipu AI introduced to the outside world the latest open source achievement of the large base model GLM-4 - GLM-4-9B.

#It is the open source version of the latest generation pre-training model GLM-4 series. GLM-4-9B has stronger basic capabilities, longer context, implements more precise function calls and All Tools capabilities, and has multi-modal capabilities for the first time.

Based on the powerful pre-training base, the comprehensive performance of GLM-4-9B in Chinese and English is 40% higher than that of ChatGLM3-6B, in Chinese alignment capability AlignBench, command compliance IFeval, engineering code Natural Code Bench, etc. Significant improvements have been achieved in benchmark data. Compared with Llama 3 8B, which has a larger amount of training, it is not inferior. It has a slight lead in English, and there is an improvement of up to 50% in Chinese subjects.

The context length of the new model has been extended from 128K to 1M, which means that the model can handle 2 million words of input at the same time, which is equivalent to two books of Dream of Red Mansions or 125 papers. On LongBench-Chat with a length of 128K, the GLM-4-9B-Chat model improves by 20% compared to the previous generation. In the needle-in-a-haystack test with a length of 1M, GLM-4-9B-Chat-1M also achieved a good result of all green.

The new generation of large models also improves support for multiple languages. The model vocabulary has been upgraded from 60,000 to 150,000, and the coding efficiency of languages other than Chinese and English has increased by an average of 30%, which means that the model can handle tasks in small languages faster. Evaluations show that the ChatGLM-4-9B model’s multi-language capabilities comprehensively exceed Llama-3 8B.

While supporting local operation of consumer-grade graphics cards, GLM-4-9B not only demonstrates powerful dialogue capabilities, supports 1 million long texts, and covers multiple languages, and more importantly: intelligent The large models released by Pu are completely free and open source. Now, every developer can run this version of the GLM-4 model locally.

GitHub link: https://github.com/THUDM/GLM-4

Model: huggingface: https://huggingface.co/collections/THUDM/glm-4-665fcf188c414b03c2f7e3b7

Magic Community: https://modelscope.cn/organization/ZhipuAI

In addition to the powerful text model, Zhipu AI also open sourced the multi-modal model based on GLM-4-9B Model GLM-4V-9B. By adding Vision Transformer, this model achieves capabilities comparable to GPT-4V with only 13B parameters.

#While technology evolves, the price of large models is also constantly decreasing. Zhipu has launched the GLM-4-AIR model, which basically retains the performance of the GLM-4 large model in January and has significantly reduced its price to 1 yuan/million tokens.

The performance of the GLM-4-Air is comparable to the larger GLM-4-0116 model at 1/100 the price. It is worth mentioning that the API of GLM-4-Air has greatly improved the inference speed. Compared with GLM-4-0116, the inference speed of GLM-4-Air has been increased by 200%, and it can output 71 tokens per second, which is far higher than that of GLM-4-0116. Faster than the reading speed of the human eye.

Zhipu stated that the price adjustment for large models is based on the comprehensive results of technological breakthroughs, computing power efficiency improvements and cost control. Price adjustments will be made at regular intervals in the future to better satisfy developers. , customer needs, highly competitive prices are not only reasonable, but also in line with their own business strategies.

Ecological construction enters the next level

As one of the first domestic startups to enter the large model track, Zhipu AI has now become the leader of domestic AI technology companies. represent.

It is not only the leader in domestic large model technology, but also a Chinese force that cannot be ignored in the large model academia and open source ecosystem. Zhipu has extensive influence in the field of AI, with cumulative downloads of open source models reaching 16 million times. Supporting the open source community is Zhipu’s unwavering commitment.

Going one step further, Zhipu AI is also jointly formulating AI safety standards for large models. On May 22, companies from different countries and regions, including OpenAI, Google, Microsoft and Zhipu AI, jointly signed the Frontier AI Safety Commitments. It points out that it is necessary to ensure a responsible governance structure and transparency for the safety of cutting-edge artificial intelligence, responsibly explain how to measure the risks of cutting-edge artificial intelligence models, and establish a clear process for risk mitigation mechanisms for cutting-edge artificial intelligence safety models.

Outside the field of AI, for many industries that have benefited from breakthroughs in large models, Zhipu AI is driving enterprise productivity changes through MaaS, and its large model ecosystem has begun to take shape. .

"Why do we judge that 2024 is the first year of AGI? If you can answer this question in one sentence: Scaling Law has not expired, and AI technology growth has entered a new stage. Large model technology innovation is still As the progress progresses by leaps and bounds, there are even signs that the speed is getting faster and faster," Zhang Peng said. "Frankly speaking, we have never seen a technology iteratively upgrade with such a steep innovation curve in history, and it lasts for such a long time."

Zhipu AI technology innovation and commercial implementation are speeding up Take this steep curve.

#In the process of technological development, Zhipu AI has been on the fast track.

The above is the detailed content of The open source version of GLM-4 is finally here: surpassing Llama3, multi-modality comparable to GPT4V, and the MaaS platform has also been greatly upgraded.. For more information, please follow other related articles on the PHP Chinese website!

A Business Leader's Guide To Generative Engine Optimization (GEO)May 03, 2025 am 11:14 AM

A Business Leader's Guide To Generative Engine Optimization (GEO)May 03, 2025 am 11:14 AMGoogle is leading this shift. Its "AI Overviews" feature already serves more than one billion users, providing complete answers before anyone clicks a link.[^2] Other players are also gaining ground fast. ChatGPT, Microsoft Copilot, and Pe

This Startup Is Using AI Agents To Fight Malicious Ads And Impersonator AccountsMay 03, 2025 am 11:13 AM

This Startup Is Using AI Agents To Fight Malicious Ads And Impersonator AccountsMay 03, 2025 am 11:13 AMIn 2022, he founded social engineering defense startup Doppel to do just that. And as cybercriminals harness ever more advanced AI models to turbocharge their attacks, Doppel’s AI systems have helped businesses combat them at scale— more quickly and

How World Models Are Radically Reshaping The Future Of Generative AI And LLMsMay 03, 2025 am 11:12 AM

How World Models Are Radically Reshaping The Future Of Generative AI And LLMsMay 03, 2025 am 11:12 AMVoila, via interacting with suitable world models, generative AI and LLMs can be substantively boosted. Let’s talk about it. This analysis of an innovative AI breakthrough is part of my ongoing Forbes column coverage on the latest in AI, including

May Day 2050: What Have We Left To Celebrate?May 03, 2025 am 11:11 AM

May Day 2050: What Have We Left To Celebrate?May 03, 2025 am 11:11 AMLabor Day 2050. Parks across the nation fill with families enjoying traditional barbecues while nostalgic parades wind through city streets. Yet the celebration now carries a museum-like quality — historical reenactment rather than commemoration of c

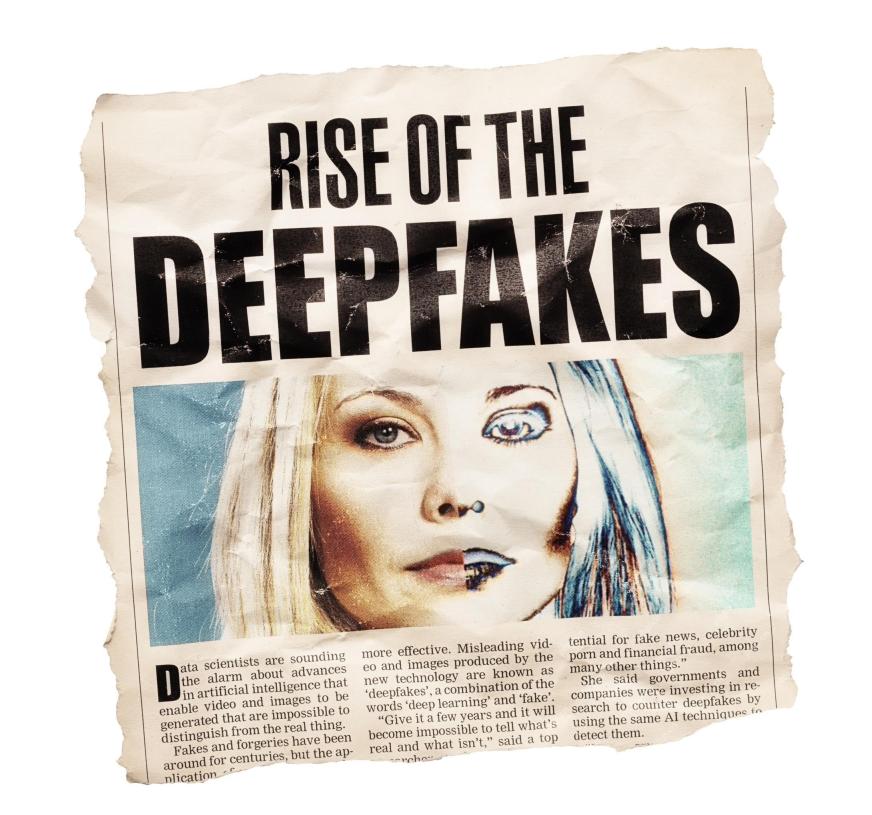

The Deepfake Detector You've Never Heard Of That's 98% AccurateMay 03, 2025 am 11:10 AM

The Deepfake Detector You've Never Heard Of That's 98% AccurateMay 03, 2025 am 11:10 AMTo help address this urgent and unsettling trend, a peer-reviewed article in the February 2025 edition of TEM Journal provides one of the clearest, data-driven assessments as to where that technological deepfake face off currently stands. Researcher

Quantum Talent Wars: The Hidden Crisis Threatening Tech's Next FrontierMay 03, 2025 am 11:09 AM

Quantum Talent Wars: The Hidden Crisis Threatening Tech's Next FrontierMay 03, 2025 am 11:09 AMFrom vastly decreasing the time it takes to formulate new drugs to creating greener energy, there will be huge opportunities for businesses to break new ground. There’s a big problem, though: there’s a severe shortage of people with the skills busi

The Prototype: These Bacteria Can Generate ElectricityMay 03, 2025 am 11:08 AM

The Prototype: These Bacteria Can Generate ElectricityMay 03, 2025 am 11:08 AMYears ago, scientists found that certain kinds of bacteria appear to breathe by generating electricity, rather than taking in oxygen, but how they did so was a mystery. A new study published in the journal Cell identifies how this happens: the microb

AI And Cybersecurity: The New Administration's 100-Day ReckoningMay 03, 2025 am 11:07 AM

AI And Cybersecurity: The New Administration's 100-Day ReckoningMay 03, 2025 am 11:07 AMAt the RSAC 2025 conference this week, Snyk hosted a timely panel titled “The First 100 Days: How AI, Policy & Cybersecurity Collide,” featuring an all-star lineup: Jen Easterly, former CISA Director; Nicole Perlroth, former journalist and partne

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

SublimeText3 Chinese version

Chinese version, very easy to use

MinGW - Minimalist GNU for Windows

This project is in the process of being migrated to osdn.net/projects/mingw, you can continue to follow us there. MinGW: A native Windows port of the GNU Compiler Collection (GCC), freely distributable import libraries and header files for building native Windows applications; includes extensions to the MSVC runtime to support C99 functionality. All MinGW software can run on 64-bit Windows platforms.

Safe Exam Browser

Safe Exam Browser is a secure browser environment for taking online exams securely. This software turns any computer into a secure workstation. It controls access to any utility and prevents students from using unauthorized resources.

SecLists

SecLists is the ultimate security tester's companion. It is a collection of various types of lists that are frequently used during security assessments, all in one place. SecLists helps make security testing more efficient and productive by conveniently providing all the lists a security tester might need. List types include usernames, passwords, URLs, fuzzing payloads, sensitive data patterns, web shells, and more. The tester can simply pull this repository onto a new test machine and he will have access to every type of list he needs.

SAP NetWeaver Server Adapter for Eclipse

Integrate Eclipse with SAP NetWeaver application server.