Technology peripherals

Technology peripherals AI

AI A new chain of three-dimensional perception of embodied intelligence, TeleAI & Shanghai AI Lab proposed a multi-perspective fusion embodied model 'SAM-E'

A new chain of three-dimensional perception of embodied intelligence, TeleAI & Shanghai AI Lab proposed a multi-perspective fusion embodied model 'SAM-E'A new chain of three-dimensional perception of embodied intelligence, TeleAI & Shanghai AI Lab proposed a multi-perspective fusion embodied model 'SAM-E'

The AIxiv column is a column where this site publishes academic and technical content. In the past few years, the AIxiv column of this site has received more than 2,000 reports, covering top laboratories from major universities and companies around the world, effectively promoting academic exchanges and dissemination. If you have excellent work that you want to share, please feel free to contribute or contact us for reporting. Submission email: liyazhou@jiqizhixin.com; zhaoyunfeng@jiqizhixin.com

First of all, SAM-E has a powerful promptable "

Subsequently, a multi-view Transformer is designed to fuse and align depth features, image features and instruction features to achieve object "

Finally, a

Paper title: SAM-E: Leveraging Visual Foundation Model with Sequence Imitation for Embodied Manipulation Paper link: https://sam-embodied.github.io/static/SAM-E.pdf Project address: https://sam-embodied.github.io/

The core viewpoint of the SAM-E method mainly includes two aspects:

- Using SAM's prompt-driven structure, a powerful

- base model

is constructed, which has excellent generalization performance under task language instructions. Through LoRA fine-tuning technology, the model is adapted to specific tasks, further improving its performance. Adopt - sequential action modeling technology

to capture the timing information in the action sequence, better understand the dynamic changes of the task, and adjust the robot's strategy and execution in a timely manner This way, the robot can maintain a high execution efficiency.

##can prompt perception and fine-tuning

In the training phase, SAM-E uses LoRA for efficient fine-tuning

, which greatly reduces the training parameters and enables the basic vision model to quickly adapt to specific tasks.

multi-task scenarios

When

- , SAM-E effectively improved the new tasks by virtue of its strong generalization performance and efficient execution efficiency Performance.

##

The above is the detailed content of A new chain of three-dimensional perception of embodied intelligence, TeleAI & Shanghai AI Lab proposed a multi-perspective fusion embodied model 'SAM-E'. For more information, please follow other related articles on the PHP Chinese website!

An AI Space Company Is BornMay 12, 2025 am 11:07 AM

An AI Space Company Is BornMay 12, 2025 am 11:07 AMThis article showcases how AI is revolutionizing the space industry, using Tomorrow.io as a prime example. Unlike established space companies like SpaceX, which weren't built with AI at their core, Tomorrow.io is an AI-native company. Let's explore

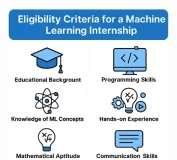

10 Machine Learning Internships in India (2025)May 12, 2025 am 10:47 AM

10 Machine Learning Internships in India (2025)May 12, 2025 am 10:47 AMLand Your Dream Machine Learning Internship in India (2025)! For students and early-career professionals, a machine learning internship is the perfect launchpad for a rewarding career. Indian companies across diverse sectors – from cutting-edge GenA

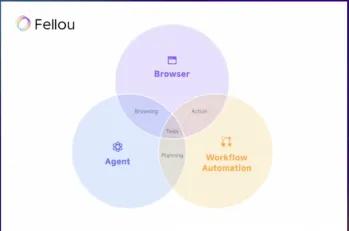

Try Fellou AI and Say Goodbye to Google and ChatGPTMay 12, 2025 am 10:26 AM

Try Fellou AI and Say Goodbye to Google and ChatGPTMay 12, 2025 am 10:26 AMThe landscape of online browsing has undergone a significant transformation in the past year. This shift began with enhanced, personalized search results from platforms like Perplexity and Copilot, and accelerated with ChatGPT's integration of web s

Personal Hacking Will Be A Pretty Fierce BearMay 11, 2025 am 11:09 AM

Personal Hacking Will Be A Pretty Fierce BearMay 11, 2025 am 11:09 AMCyberattacks are evolving. Gone are the days of generic phishing emails. The future of cybercrime is hyper-personalized, leveraging readily available online data and AI to craft highly targeted attacks. Imagine a scammer who knows your job, your f

Pope Leo XIV Reveals How AI Influenced His Name ChoiceMay 11, 2025 am 11:07 AM

Pope Leo XIV Reveals How AI Influenced His Name ChoiceMay 11, 2025 am 11:07 AMIn his inaugural address to the College of Cardinals, Chicago-born Robert Francis Prevost, the newly elected Pope Leo XIV, discussed the influence of his namesake, Pope Leo XIII, whose papacy (1878-1903) coincided with the dawn of the automobile and

FastAPI-MCP Tutorial for Beginners and Experts - Analytics VidhyaMay 11, 2025 am 10:56 AM

FastAPI-MCP Tutorial for Beginners and Experts - Analytics VidhyaMay 11, 2025 am 10:56 AMThis tutorial demonstrates how to integrate your Large Language Model (LLM) with external tools using the Model Context Protocol (MCP) and FastAPI. We'll build a simple web application using FastAPI and convert it into an MCP server, enabling your L

Dia-1.6B TTS : Best Text-to-Dialogue Generation Model - Analytics VidhyaMay 11, 2025 am 10:27 AM

Dia-1.6B TTS : Best Text-to-Dialogue Generation Model - Analytics VidhyaMay 11, 2025 am 10:27 AMExplore Dia-1.6B: A groundbreaking text-to-speech model developed by two undergraduates with zero funding! This 1.6 billion parameter model generates remarkably realistic speech, including nonverbal cues like laughter and sneezes. This article guide

3 Ways AI Can Make Mentorship More Meaningful Than EverMay 10, 2025 am 11:17 AM

3 Ways AI Can Make Mentorship More Meaningful Than EverMay 10, 2025 am 11:17 AMI wholeheartedly agree. My success is inextricably linked to the guidance of my mentors. Their insights, particularly regarding business management, formed the bedrock of my beliefs and practices. This experience underscores my commitment to mentor

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Safe Exam Browser

Safe Exam Browser is a secure browser environment for taking online exams securely. This software turns any computer into a secure workstation. It controls access to any utility and prevents students from using unauthorized resources.

Atom editor mac version download

The most popular open source editor

SublimeText3 Chinese version

Chinese version, very easy to use

WebStorm Mac version

Useful JavaScript development tools

Dreamweaver Mac version

Visual web development tools