Technology peripherals

Technology peripherals AI

AI Is it better to have more data or higher quality? This research can help you make your choice

Is it better to have more data or higher quality? This research can help you make your choiceScaling the basic model refers to using more data, calculations and parameters for pre-training, which is simply "scale expansion".

Although directly expanding the model size seems simple and crude, it has indeed brought many outstanding models to the machine learning community. Many previous studies have recognized the practice of expanding the scale of neuroeconomic models. The so-called quantitative changes lead to qualitative changes. This view is also known as neural scaling laws. However, as the model size increases, it results in intensive consumption of computing resources. This means that larger models require more computing resources, including processors and memory. This is not feasible for many practical applications, especially on resource-constrained devices. Therefore, researchers have begun to focus on how to use computing resources more efficiently to improve models.

Recently, many people believe that "data" is the best closed source The key to the model, whether it is LLM, VLM or diffusion model. As the importance of data quality has been recognized, a lot of research has emerged aimed at improving data quality: either filtering high-quality data from large databases or generating new high-quality data. However, the expansion law in the past generally regarded "data" as a homogeneous entity, and did not take the "data quality" that people have been paying attention to recently as a consideration dimension.

Despite the vastness of data models on the web, high-quality data (based on multiple evaluation metrics) is often limited. Now, groundbreaking research is coming - the expansion law in data filtering dimensions! It comes from Carnegie Mellon University and the Bosch Center for AI, with a particular focus on the quantity-quality trade-off (QQT) between "large scale" and "high quality."

- Paper title: Scaling Laws for Data Filtering—Data Curation cannot be Compute Agnostic

- Paper address: https://arxiv.org/pdf/2404.07177.pdf

- Code address: https://github.com/locuslab/scaling_laws_data_filtering

## As shown in Figure 1, when training for multiple epochs, the utility of high-quality data is not great (because the model has already completed learning).

At this point, use lower quality data (the initial utility smaller) is often more helpful than reusing high-quality data.

Under the quantity-quality trade-off (QQT), how do we determine what kind of data combination is better for training?

To answer this question, any data curation workflow must consider the total computational effort used for model training. This is different from the community's view of data filtering. For example, the LAION filtering strategy extracts the highest quality 10% from common crawl results.

But as can be seen from Figure 2, it is obvious that once training exceeds 35 epochs, the effect of training on a completely unorganized data set is better than that on high-quality data organized using the LAION strategy on the effect of training.

#Current neural expansion laws cannot model this dynamic trade-off between quality and quantity. In addition, there are even fewer studies on the extension of visual-language models, and most current research is limited to the field of language modeling.

The groundbreaking research we are going to introduce today has overcome three important limitations of the previous neural expansion law, and it has done:

(1) Consider the "quality" axis when expanding data;

(2) Estimate the expansion law of the data pool combination (without actually training on the combination), this Helps guide the implementation of optimal data organization decisions;

(3) Adjust the LLM expansion law to make it suitable for comparative training (such as CLIP), in which each batch has square The number of comparisons for the quantity.

The team proposed the expansion law for heterogeneous and limited amount of network data for the first time.

Large-scale models are trained on a combination of data pools of various qualities. By modeling the aggregate data utility derived from the diffusion parameters of individual data pools (A-F in Figure 1 (a)), it is possible to directly estimate the model's performance on any combination of these data pools.

It is important to point out that this method does not require training on these data pool combinations to estimate their expansion laws, but can be directly estimated based on the expansion parameters of each component pool. their expansion curves.

Compared with the expansion law in the past, the expansion law here has some important differences. It can model and compare repetitions in the training mechanism and achieve O (n²) comparison. For example, if the size of the training pool is doubled, the number of comparisons that contribute to the model loss will be quadrupled.

They mathematically describe how data from different pools interact with each other, allowing the performance of the model to be estimated under different combinations of data. This results in a data organization strategy that is appropriate for currently available computations.

One of the key messages from this study is: Data compilation cannot be done in isolation from calculations.

When the computational budget is small (fewer repetitions), quality takes precedence under the QQT trade-off, as shown by the best performance of aggressive filtering (E) at low computational effort in Figure 1.

On the other hand, when the scale of calculation far exceeds the training data used, the effectiveness of limited high-quality data will decrease, and you need to find ways to make up for this. This results in a less aggressive filtering strategy, i.e. better performance with larger data volumes.

The team conducted experimental demonstrations, and the results showed that this new scaling law for heterogeneous network data can predict data from 32M to 640M using DataComp's medium-sized pool (128M samples). A Pareto optimal filtering strategy under computational budget.

Data filtering under a certain computing budget

The team studied the effect of data filtering under different computing budgets through experiments.

They trained a VLM using a large initial data pool. For the base unfiltered data pool, they chose a "medium" scale version of Datacomp, a recent data compilation benchmark. The data pool contains 128M samples. They used 18 different downstream tasks to evaluate the model's zero-shot performance.

They first studied the LAION filtering strategy used to obtain the LAION dataset, and the results are shown in Figure 2. They observed the following results:

#1. When computational budget is low, it is better to use high-quality data.

2. Data filtering can get in the way when the computational budget is high.

Why?

LAION filtering retains approximately 10% of the data, so the computational budget is approximately 450M and each sample from the filtered LAION pool is used approximately 32 times. The key insight here is that if the same sample is seen multiple times during training, the utility will decrease each time.

The team then studied two other data filtering methods:

(1) CLIP score filtering, using CLIP L/14 Model;

(2) T-MARS, which ranks data based on CLIP scores after masking text features (OCR) in images. For each data filtering method, they used four filtering levels and various different total computational efforts.

Figure 3 shows the comparison of the results of Top 10-20%, Top 30%, and Top 40% CLIP filtering when the calculation scale is 32M, 128M, and 640M.

At 32M compute scale, a highly aggressive filtering strategy (retaining only the top 10-20% based on CLIP score) gives the best results, The least aggressive filtering method, which retained the top 40%, performed the worst. However, when the computing scale is expanded to 640M, this trend is completely reversed. Similar trends are observed using the T-MARS score metric.

The expansion law of data filtering

The team first defined utility mathematically.

Their approach is not to estimate the loss of n samples at the end of training, but to consider the instantaneous utility of a sample at any point in the training phase. The mathematical formula is:

This shows that the instantaneous utility of a sample is directly proportional to the current loss and inversely proportional to what is seen so far. number of samples reached. This is also in line with our intuitive thinking: as the number of samples seen by the model increases, the effectiveness of the samples will decrease. The focus is on the data utility parameter b .

The next step is the effectiveness of data being reused.

Mathematically, the utility parameter b of a sample that has been seen k 1 times is defined as:

Where τ is the half-life of the utility parameter. The higher the value of τ, the slower the sample utility decays with repetition. δ is a concise way of writing the decay of utility with repetition. Then, the expression of the model’s loss after seeing n samples and each sample having been seen k times is:

Where n_j is the number of samples seen by the model at the end of the j-th training epoch. This equation is the basis of the newly proposed expansion law.

Finally, there is another layer of complexity, namely heterogeneous network data.

Then we get the theorem they gave: given p data pools randomly and uniformly sampled, their respective utility and repetition parameters are (b_1, τ_1)... (b_p, τ_p), then the new repeated half-life of each bucket is τˆ = p·τ. Furthermore, the effective utility value b_eff of the combined data pool at the kth iteration is the weighted average of the individual utility values. Its mathematical form is:

, which is the new per-bucket attenuation parameter.

, which is the new per-bucket attenuation parameter.

Finally, you can use b_eff in the above theorem in (3) to estimate the loss when training on the data pool combination.

Fitting expansion curves for various data utility pools

The team experimentally explored the newly proposed expansion law.

Figure 4 shows the expansion curves of various data utility pools after fitting. The data utility index used is the T-MARS score.

Column 2 of Figure 4 shows that the utility of each data pool decreases as epochs increase. Here are some key observations from the team:

1. Network data is heterogeneous and cannot be modeled by a single set of extended parameters.

2. Different data pools have different data diversity.

3. The effect of high-quality data with repeated phenomena cannot keep up with the direct use of low-quality data.

Result: Estimating expansion law for data combination under QQT

The corresponding parameters a, b, d, τ. The goal here is to determine what is the most efficient data wrangling strategy given a training compute budget.

Through the previous theorem and the expansion parameters of each data pool, the expansion laws of different pool combinations can now be estimated. For example, the Top-20% pool can be thought of as a combination of the Top-10% and Top 10%-20% pools. This trend from the expansion curve can then be used to predict a Pareto-optimal data filtering strategy for a given computational budget.

Figure 5 gives the expansion curves for different data combinations, which were evaluated on ImageNet.

#It should be emphasized here that these curves are estimated directly from the expansion parameters of each component pool based on the above theorem. They did not train on these data pool combinations to estimate these expansion curves. The scatter points are actual test performance and serve to verify the estimated results.

It can be seen that: (1) When the calculation budget is low/the number of repetitions is small, the aggressive filtering strategy is the best.

(2) Data compilation cannot be performed without calculation.

Expand the expansion curve

##The 2023 paper by Cherti et al. Reproducible scaling laws for contrastive language-image learning" studied the scaling laws proposed for the CLIP model, in which dozens of models with computational scales ranging from 3B to 34B training samples were trained, and the models covered different ViT series models. Training models at this computational scale is very expensive. Cherti et al. (2023) aimed to fit expansion laws for this family of models, but the expansion curves for models trained on small data sets had many errors.

The CMU team believes this is mainly because they did not consider the reduction in utility caused by reusing data. So they estimated the errors of these models using the newly proposed expansion law.

Figure 6 is the expanded curve after correction, which can predict errors with high accuracy.

This shows that the newly proposed expansion law is suitable for large models trained with 34B data calculations. This shows that when predicting model training results, the new The expansion law does take into account the degradation in utility of duplicate data.

For more technical details and experimental results, please refer to the original paper.

The above is the detailed content of Is it better to have more data or higher quality? This research can help you make your choice. For more information, please follow other related articles on the PHP Chinese website!

解读CRISP-ML(Q):机器学习生命周期流程Apr 08, 2023 pm 01:21 PM

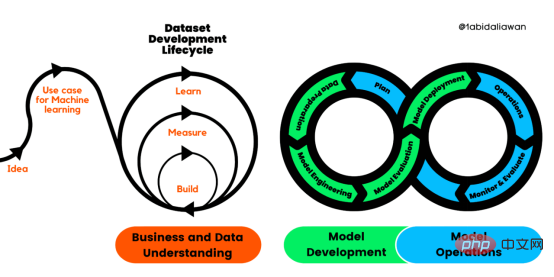

解读CRISP-ML(Q):机器学习生命周期流程Apr 08, 2023 pm 01:21 PM译者 | 布加迪审校 | 孙淑娟目前,没有用于构建和管理机器学习(ML)应用程序的标准实践。机器学习项目组织得不好,缺乏可重复性,而且从长远来看容易彻底失败。因此,我们需要一套流程来帮助自己在整个机器学习生命周期中保持质量、可持续性、稳健性和成本管理。图1. 机器学习开发生命周期流程使用质量保证方法开发机器学习应用程序的跨行业标准流程(CRISP-ML(Q))是CRISP-DM的升级版,以确保机器学习产品的质量。CRISP-ML(Q)有六个单独的阶段:1. 业务和数据理解2. 数据准备3. 模型

人工智能的环境成本和承诺Apr 08, 2023 pm 04:31 PM

人工智能的环境成本和承诺Apr 08, 2023 pm 04:31 PM人工智能(AI)在流行文化和政治分析中经常以两种极端的形式出现。它要么代表着人类智慧与科技实力相结合的未来主义乌托邦的关键,要么是迈向反乌托邦式机器崛起的第一步。学者、企业家、甚至活动家在应用人工智能应对气候变化时都采用了同样的二元思维。科技行业对人工智能在创建一个新的技术乌托邦中所扮演的角色的单一关注,掩盖了人工智能可能加剧环境退化的方式,通常是直接伤害边缘人群的方式。为了在应对气候变化的过程中充分利用人工智能技术,同时承认其大量消耗能源,引领人工智能潮流的科技公司需要探索人工智能对环境影响的

找不到中文语音预训练模型?中文版 Wav2vec 2.0和HuBERT来了Apr 08, 2023 pm 06:21 PM

找不到中文语音预训练模型?中文版 Wav2vec 2.0和HuBERT来了Apr 08, 2023 pm 06:21 PMWav2vec 2.0 [1],HuBERT [2] 和 WavLM [3] 等语音预训练模型,通过在多达上万小时的无标注语音数据(如 Libri-light )上的自监督学习,显著提升了自动语音识别(Automatic Speech Recognition, ASR),语音合成(Text-to-speech, TTS)和语音转换(Voice Conversation,VC)等语音下游任务的性能。然而这些模型都没有公开的中文版本,不便于应用在中文语音研究场景。 WenetSpeech [4] 是

条形统计图用什么呈现数据Jan 20, 2021 pm 03:31 PM

条形统计图用什么呈现数据Jan 20, 2021 pm 03:31 PM条形统计图用“直条”呈现数据。条形统计图是用一个单位长度表示一定的数量,根据数量的多少画成长短不同的直条,然后把这些直条按一定的顺序排列起来;从条形统计图中很容易看出各种数量的多少。条形统计图分为:单式条形统计图和复式条形统计图,前者只表示1个项目的数据,后者可以同时表示多个项目的数据。

自动驾驶车道线检测分类的虚拟-真实域适应方法Apr 08, 2023 pm 02:31 PM

自动驾驶车道线检测分类的虚拟-真实域适应方法Apr 08, 2023 pm 02:31 PMarXiv论文“Sim-to-Real Domain Adaptation for Lane Detection and Classification in Autonomous Driving“,2022年5月,加拿大滑铁卢大学的工作。虽然自主驾驶的监督检测和分类框架需要大型标注数据集,但光照真实模拟环境生成的合成数据推动的无监督域适应(UDA,Unsupervised Domain Adaptation)方法则是低成本、耗时更少的解决方案。本文提出对抗性鉴别和生成(adversarial d

数据通信中的信道传输速率单位是bps,它表示什么Jan 18, 2021 pm 02:58 PM

数据通信中的信道传输速率单位是bps,它表示什么Jan 18, 2021 pm 02:58 PM数据通信中的信道传输速率单位是bps,它表示“位/秒”或“比特/秒”,即数据传输速率在数值上等于每秒钟传输构成数据代码的二进制比特数,也称“比特率”。比特率表示单位时间内传送比特的数目,用于衡量数字信息的传送速度;根据每帧图像存储时所占的比特数和传输比特率,可以计算数字图像信息传输的速度。

数据分析方法有哪几种Dec 15, 2020 am 09:48 AM

数据分析方法有哪几种Dec 15, 2020 am 09:48 AM数据分析方法有4种,分别是:1、趋势分析,趋势分析一般用于核心指标的长期跟踪;2、象限分析,可依据数据的不同,将各个比较主体划分到四个象限中;3、对比分析,分为横向对比和纵向对比;4、交叉分析,主要作用就是从多个维度细分数据。

15年软件架构师经验总结:在ML领域,初学者踩过的五个坑Apr 11, 2023 pm 07:31 PM

15年软件架构师经验总结:在ML领域,初学者踩过的五个坑Apr 11, 2023 pm 07:31 PM数据科学和机器学习正变得越来越流行,这个领域的人数每天都在增长。这意味着有很多数据科学家在构建他们的第一个机器学习模型时没有丰富的经验,而这也是错误可能会发生的地方。近日,软件架构师、数据科学家、Kaggle 大师 Agnis Liukis 撰写了一篇文章,他在文中谈了谈在机器学习中最常见的一些初学者错误的解决方案,以确保初学者了解并避免它们。Agnis Liukis 拥有超过 15 年的软件架构和开发经验,他熟练掌握 Java、JavaScript、Spring Boot、React.JS

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

PhpStorm Mac version

The latest (2018.2.1) professional PHP integrated development tool

Atom editor mac version download

The most popular open source editor

ZendStudio 13.5.1 Mac

Powerful PHP integrated development environment

SAP NetWeaver Server Adapter for Eclipse

Integrate Eclipse with SAP NetWeaver application server.

EditPlus Chinese cracked version

Small size, syntax highlighting, does not support code prompt function