Backend Development

Backend Development C++

C++ GPU-Accelerated Graphics Rendering in C++: High-Performance Secrets Revealed

GPU-Accelerated Graphics Rendering in C++: High-Performance Secrets RevealedC++ can take advantage of the GPU's stream processing architecture to improve graphics rendering performance through parallel processing: Data preparation: Copy data from the CPU to GPU memory. Shader programming: Write shader programs in GLSL or C++ AMP to define rendering pipeline behavior. GPU Execution: Shaders are loaded onto the GPU and graphics processing is performed on the parallel processing unit. Data copy: Copies rendering results back to CPU memory. Using CUDA, developers can unlock the potential of the GPU for fast image processing, such as blur effects.

GPU-Accelerated Graphics Rendering in C++: Revealing the High-Performance Secret

In modern graphics rendering, the GPU (Graphics Processing Unit) ) plays a vital role in significantly improving rendering performance by processing large amounts of calculations in parallel. As an efficient, low-level programming language, C++ can effectively utilize the powerful functions of GPU to achieve high-speed graphics rendering.

Principle Introduction

GPU adopts a stream processing architecture and contains a large number of parallel processing units (CUDA cores or OpenCL processing units). These units execute the same instructions simultaneously, efficiently processing large data blocks, and significantly accelerating graphics rendering tasks such as image processing, geometric calculations, and rasterization.

Steps to render graphics using GPU

- Data preparation: Copy graphics data from CPU to GPU memory.

- Shader Programming: Write shaders using GLSL (OpenGL Shading Language) or C++ AMP (Microsoft technology for accelerating parallel programming) to define the behavior of various stages in the graphics rendering pipeline .

- GPU execution: Load the shader program to the GPU and execute it using APIs such as CUDA or OpenCL to perform graphics processing on the parallel processing unit.

- Data copy: Copy the rendering results from GPU memory back to CPU memory so that they can be displayed to the user.

Practical case

Image processing example based on CUDA

Use CUDA to process image pixels in parallel and realize image processing Convolution operation (blurring effect). Code example below:

#include <opencv2/opencv.hpp>

#include <cuda.h>

#include <cuda_runtime.h>

__global__ void convolve(const float* in, float* out, const float* filter, int rows, int cols, int filterSize) {

int x = blockIdx.x * blockDim.x + threadIdx.x;

int y = blockIdx.y * blockDim.y + threadIdx.y;

if (x < rows && y < cols) {

float sum = 0.0f;

for (int i = 0; i < filterSize; i++) {

for (int j = 0; j < filterSize; j++) {

int offsetX = x + i - filterSize / 2;

int offsetY = y + j - filterSize / 2;

if (offsetX >= 0 && offsetX < rows && offsetY >= 0 && offsetY < cols) {

sum += in[offsetX * cols + offsetY] * filter[i * filterSize + j];

}

}

}

out[x * cols + y] = sum;

}

}

int main() {

cv::Mat image = cv::imread("image.jpg");

cv::Size blockSize(16, 16);

cv::Mat d_image, d_filter, d_result;

cudaMalloc(&d_image, image.rows * image.cols * sizeof(float));

cudaMalloc(&d_filter, 9 * sizeof(float));

cudaMalloc(&d_result, image.rows * image.cols * sizeof(float));

cudaMemcpy(d_image, image.data, image.rows * image.cols * sizeof(float), cudaMemcpyHostToDevice);

cudaMemcpy(d_filter, ((float*)cv::getGaussianKernel(3, 1.5, CV_32F).data), 9 * sizeof(float), cudaMemcpyHostToDevice);

dim3 dimGrid(image.cols / blockSize.width, image.rows / blockSize.height);

dim3 dimBlock(blockSize.width, blockSize.height);

convolve<<<dimGrid, dimBlock>>>(d_image, d_result, d_filter, image.rows, image.cols, 3);

cudaMemcpy(image.data, d_result, image.rows * image.cols * sizeof(float), cudaMemcpyDeviceToHost);

cv::imshow("Blurred Image", image);

cv::waitKey(0);

cudaFree(d_image);

cudaFree(d_filter);

cudaFree(d_result);

return 0;

}Conclusion

By using C++ and GPU acceleration, developers can unleash the power of the GPU for high-performance graphics rendering. Whether it's image processing, geometric calculations, or rasterization, GPUs can dramatically speed up your application's graphics processing and create stunning visual effects.

The above is the detailed content of GPU-Accelerated Graphics Rendering in C++: High-Performance Secrets Revealed. For more information, please follow other related articles on the PHP Chinese website!

使用C++实现机器学习算法:GPU加速的最佳方法Jun 02, 2024 am 10:06 AM

使用C++实现机器学习算法:GPU加速的最佳方法Jun 02, 2024 am 10:06 AMCUDA可加速C++中的ML算法,提供更快的训练时间、更高的精度和可扩展性。具体步骤包括:定义数据结构和内核、初始化数据和模型、分配GPU内存、将数据复制到GPU、创建CUDA上下文和流、训练模型、将模型复制回主机、清理。

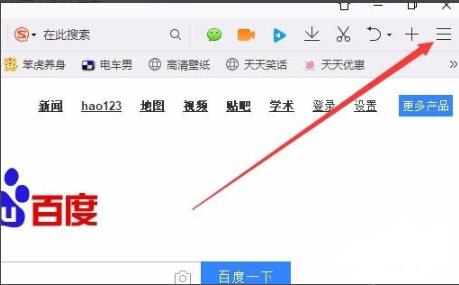

Win10启用GPU加速的步骤Dec 27, 2023 am 08:47 AM

Win10启用GPU加速的步骤Dec 27, 2023 am 08:47 AM在电脑上如果开启了gpu加速功能的话可以让显卡的性能变得更加强劲浏览网站或者看电影可以得到更好的体验,但是很多的用户还不知道该怎么开启,下面就一起来看看吧。win10gpu加速怎么开启:方法一:QQ浏览器下载地址>>1、打开浏览器点击右上角的“菜单”。2、在菜单中选择“设置”。3、点击任务栏上的“高级”。4、点击“开启GPU加速渲染网页”下拉中的“开启”即可。方法二:360浏览器下载地址>>1、点击右上方任务栏的“工具”。2、在下拉菜单中点击“选项”。3、点击左侧任务栏中的“实验室

如何开启Win10 2004版本的GPU加速功能Dec 25, 2023 pm 10:39 PM

如何开启Win10 2004版本的GPU加速功能Dec 25, 2023 pm 10:39 PMwin10的最新版本2004版更新了很多的特色功能,比较受到关注的就是可以优化游戏的gpu加速功能,那么这个功能该怎么开启呢?下面就来看看详细方法吧。win102004gpu加速怎么开启:1、按下“win+i”打开windows设置。2、在设置中点击“系统”选项。3、点击左侧任务栏中的“显示”。4、在右侧的界面中下滑点击“图形设置”。5、将“硬件加速GPU计划”下面的开关打开即可。

学习Go语言的游戏开发和图形渲染Nov 30, 2023 am 11:14 AM

学习Go语言的游戏开发和图形渲染Nov 30, 2023 am 11:14 AM随着游戏行业的日益发展和人们对游戏品质要求的提高,越来越多的游戏开发者开始尝试使用更先进的编程语言和图形渲染技术来构建游戏。其中,Go语言作为一种高效、简洁、安全的现代编程语言,越来越受到游戏开发者的欢迎。本文将介绍学习Go语言游戏开发和图形渲染的步骤和方法。一、了解Go语言Go语言是由谷歌开发的一种开源编程语言。它是一种静态类型语言,具有内存自动管理和垃圾

图形渲染中的渲染速度问题Oct 09, 2023 am 08:22 AM

图形渲染中的渲染速度问题Oct 09, 2023 am 08:22 AM图形渲染中的渲染速度问题,需要具体代码示例摘要:随着计算机图形渲染技术的不断发展,人们对于渲染速度的要求也越来越高。本文将通过具体的代码示例,介绍图形渲染中可能出现的速度问题,并提出一些优化方法来提升渲染速度。一、背景介绍图形渲染是计算机图形学中的一个重要环节,它将三维的模型数据转化为二维的图像。渲染速度直接影响用户体验,尤其是在实时渲染的应用中,如电子游戏

Win10 2004版本中的GPU加速功能是什么?Jan 01, 2024 pm 07:53 PM

Win10 2004版本中的GPU加速功能是什么?Jan 01, 2024 pm 07:53 PM在我们升级更新使用win10操作系统的时候,对于微软公司最新更新的win102004版本,相信很多小伙伴都一直在关注它的功能更新。那么对于win102004版本更新的gpu加速是什么,小编觉得这个功能主要是加强了显卡的优化,是我们在看视频玩游戏的时候可以有更好的用户体验。那么现在就来和小编一起了解一下吧~win102004版本gpu加速是什么1.在Windows10版本2004中,可以预期WindowsLinux子系统的系统恢复和整体性能将得到显著改善。2.微软还通过基于聊天的新界面改善了Cor

图形渲染中的实时性问题Oct 08, 2023 pm 07:19 PM

图形渲染中的实时性问题Oct 08, 2023 pm 07:19 PM图形渲染在计算机图形学中起着至关重要的作用,它将数据转化为可视化的图像展示给用户。然而,在实时图形渲染中,需要以每秒60帧的速度持续更新图像,这就给计算机的性能和算法的设计提出了更高的要求。本文将讨论实时图形渲染中的实时性问题,并提供一些具体的代码示例。实时图形渲染中的关键问题之一是如何高效地计算和更新每一帧的图像。以下是一个简单的渲染循环示例,用于说明渲染

C++图形渲染:从像素到图像的精通之旅Jun 03, 2024 pm 06:17 PM

C++图形渲染:从像素到图像的精通之旅Jun 03, 2024 pm 06:17 PM图形渲染中的像素操作:像素:图像的基本单位,表示颜色值(C++中使用SDL_Color结构)。图像创建:使用SFML的sf::Image类创建位图图像。像素访问和修改:使用getPixel()和setPixel()函数访问和修改像素。实战案例:绘制线条,使用布雷森汉姆算法通过像素数据绘制直线。结论:掌握像素操作可创建各种视觉效果,C++和SFML简化了应用程序中的图形渲染。

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

SublimeText3 Linux new version

SublimeText3 Linux latest version

SecLists

SecLists is the ultimate security tester's companion. It is a collection of various types of lists that are frequently used during security assessments, all in one place. SecLists helps make security testing more efficient and productive by conveniently providing all the lists a security tester might need. List types include usernames, passwords, URLs, fuzzing payloads, sensitive data patterns, web shells, and more. The tester can simply pull this repository onto a new test machine and he will have access to every type of list he needs.

WebStorm Mac version

Useful JavaScript development tools

SublimeText3 English version

Recommended: Win version, supports code prompts!