Hand-tearing Llama3 layer 1: Implementing llama3 from scratch

1. Architecture of Llama3

In this series of articles, we implement llama3 from scratch.

The overall architecture of Llama3:

Picture

Picture

The model parameters of Llama3:

Let’s take a look at these The actual values of the parameters in the LlaMa 3 model.

Picture

Picture

[1] Context window (context-window)

When instantiating the LlaMa class, the variable max_seq_len defines context -window. There are other parameters in the class, but this parameter is most directly related to the transformer model. The max_seq_len here is 8K.

Picture

Picture

[2] Vocabulary-size and Attention Layers

Transformer class is a A model with defined vocabulary and number of layers. Vocabulary here refers to the set of words (and tokens) that the model is able to recognize and process. Attention layers refer to the transformer block (a combination of attention and feed-forward layers) used in the model.

Picture

Picture

Based on these numbers, LlaMa 3 has a vocabulary of 128K, which is quite large. Furthermore, it has 32 transformer blocks.

[3] Feature-dimension and attention-heads

Feature-dimension and attention-heads are introduced into the Self-Attention module. Feature dimension refers to the vector size of tokens in the embedding space (feature dimension refers to the dimension size of the input data or embedding vector), while attention-heads include the QK-module that drives the self-attention mechanism in transformers.

Picture

Picture

[4] Hidden Dimensions

Hidden dimensions refer to the feed forward neural network (Feed Forward) , the dimension size of the hidden layer. Feedforward neural networks usually contain one or more hidden layers, and the dimensions of these hidden layers determine the capacity and complexity of the network. In the Transformer model, the hidden layer dimension of the feedforward neural network is usually a multiple of the feature dimension to increase the representation ability of the model. In LLama3, the hidden dimension is 1.3 times the feature dimension. It should be noted that hidden layers and hidden dimensions are two concepts.

A higher number of hidden layers allows the network to internally create and manipulate richer representations before projecting them back into smaller output dimensions.

Picture

Picture

[5] Combine the above parameters into Transformer

The first matrix is the input feature matrix, which is processed through the Attention layer Generate Attention Weighted features. In this image, the input feature matrix is only 5 x 3 in size, but in the real Llama 3 model it grows to 8K x 4096, which is huge.

Next are the hidden layers in the Feed-Forward Network, growing to 5325 and then falling back to 4096 in the last layer.

Picture

Picture

[6] Multi-layer Transformer block

LlaMa 3 combines the above 32 transformer blocks, and the output is from one block Pass to the next block until the last one is reached.

Picture

Picture

[7] Putting it all together

Once we have all the above parts started, it’s time to put them together Together, see how they create the LlaMa effect.

Picture

Picture

Step 1: First we have our input matrix with size 8K(context-window) x 128K(vocabulary-size). This matrix undergoes an embedding process to convert this high-dimensional matrix into a low-dimensional one.

Step 2: In this case, this low-dimensional result becomes 4096, which is the specified dimension of the features in the LlaMa model we saw earlier.

In neural networks, dimensionality enhancement and dimensionality reduction are common operations, and they each have different purposes and effects.

Dimensionality increase is usually to increase the capacity of the model so that it can capture more complex features and patterns. When the input data is mapped into a higher dimensional space, different feature combinations can be more easily distinguished by the model. This is especially useful when dealing with non-linear problems, as it can help the model learn more complex decision boundaries.

Dimensionality reduction is to reduce the complexity of the model and the risk of overfitting. By reducing the dimensionality of the feature space, the model can be forced to learn more refined and generalized feature representations. In addition, dimensionality reduction can be used as a regularization method to help improve the generalization ability of the model. In some cases, dimensionality reduction can also reduce computational costs and improve model operating efficiency.

In practical applications, the strategy of dimensionality increase and then dimensionality reduction can be regarded as a process of feature extraction and transformation. In this process, the model first explores the intrinsic structure of the data by increasing the dimensionality, and then extracts the most useful features and patterns by reducing the dimensionality. This method can help the model avoid overfitting to the training data while maintaining sufficient complexity.

Step 3: This feature is processed through the Transformer block, first by the Attention layer, and then by the FFN layer. The Attention layer processes across features horizontally, while the FFN layer processes across dimensions vertically.

Step 4: Step 3 is repeated for the 32 layers of the Transformer block. Finally, the dimensions of the resulting matrix are the same as those used for the feature dimensions.

Step 5: Finally, this matrix is converted back to the original vocabulary matrix size, which is 128K, so that the model can select and map the words available in the vocabulary.

This is how LlaMa 3 scores high on those benchmarks and creates the LlaMa 3 effect.

We will summarize several terms that are easily confused in a short language:

1. max_seq_len (maximum sequence length)

This is the model’s single processing time The maximum number of tokens that can be accepted.

In the LlaMa 3-8B model, this parameter is set to 8,000 tokens, that is, Context Window Size = 8K. This means that the maximum number of tokens the model can consider in a single processing is 8,000. This is critical for understanding long texts or maintaining the context of long-term conversations.

2. Vocabulary-size (vocabulary)

This is the number of all different tokens that the model can recognize. This includes all possible words, punctuation, and special characters. The vocabulary of the model is 128,000, expressed as Vocabulary-size = 128K. This means that the model is able to recognize and process 128,000 different tokens, which include various words, punctuation marks, and special characters.

3. Attention Layers

A main component in the Transformer model. It is mainly responsible for processing input data by learning which parts of the input data are most important (i.e. which tokens are "attended"). A model may have multiple such layers, each trying to understand the input data from a different perspective.

The LlaMa 3-8B model contains 32 processing layers, that is, Number of Layers = 32. These layers include multiple Attention Layers and other types of network layers, each of which processes and understands the input data from a different perspective.

4. transformer block

Contains modules of multiple different layers, usually including at least one Attention Layer and a Feed-Forward Network (feed-forward network). A model can have multiple transformer blocks. These blocks are connected sequentially, and the output of each block is the input of the next block. The transformer block can also be called a decoder layer.

In the context of the Transformer model, usually we say that the model has "32 layers", which can be equivalent to saying that the model has "32 Transformer blocks". Each Transformer block usually contains a self-attention layer and a feed-forward neural network layer. These two sub-layers together form a complete processing unit or "layer".

Therefore, when we say that the model has 32 Transformer blocks, we are actually describing that the model is composed of 32 such processing units, each unit has the ability to perform self-attention processing and pre-processing of data. Feed network processing. This presentation emphasizes the hierarchical structure of the model and its processing capabilities at each level.

In summary, "32 layers" and "32 Transformer blocks" are basically synonymous when describing the Transformer model structure. They both mean that the model contains 32 independent data processing cycles, and each cycle includes Self-attention and feedforward network operations.

5. Feature-dimension (feature dimension)

This is the dimension of each vector when the input token is represented as a vector in the model.

Each token is converted into a vector containing 4096 features in the model, that is, Feature-dimension = 4096. This high dimension enables the model to capture richer semantic information and contextual relationships.

6. Attention-Heads

In each Attention Layer, there can be multiple Attention-Heads, and each head independently analyzes the input data from different perspectives.

Each Attention Layer contains 32 independent Attention Heads, that is, Number of Attention Heads = 32. These heads analyze input data from different aspects and jointly provide more comprehensive data analysis capabilities.

7. Hidden Dimensions

This usually refers to the width of the layer in the Feed-Forward Network, that is, the number of neurons in each layer. Typically, Hidden Dimensions will be larger than Feature-dimension, which allows the model to create a richer data representation internally.

In Feed-Forward Networks, the dimension of the hidden layer is 5325, that is, Hidden Dimensions = 5325. This is larger than the feature dimension, allowing the model to perform deeper feature translation and learning between internal layers.

Relationships and values:

Relationship between Attention Layers and Attention-Heads: Each Attention Layer can contain multiple Attention-Heads.

Numerical relationship: A model may have multiple transformer blocks, each block contains an Attention Layer and one or more other layers. Each Attention Layer may have multiple Attention-Heads. In this way, the entire model performs complex data processing in different layers and heads.

Download the official link script of the Llama3 model: https://llama.meta.com/llama-downloads/

2. View the model

The following code shows How to use the tiktoken library to load and use a Byte Pair Encoding (BPE)-based tokenizer. This tokenizer is designed to process text data, especially for use in natural language processing and machine learning models.

We enter hello world and see how the word segmenter performs word segmentation.

from pathlib import Pathimport tiktokenfrom tiktoken.load import load_tiktoken_bpeimport torchimport jsonimport matplotlib.pyplot as plttokenizer_path = "Meta-Llama-3-8B/tokenizer.model"special_tokens = ["","","","","","","","","","",# end of turn] + [f"" for i in range(5, 256 - 5)]mergeable_ranks = load_tiktoken_bpe(tokenizer_path)tokenizer = tiktoken.Encoding(name=Path(tokenizer_path).name,pat_str=r"(?i:'s|'t|'re|'ve|'m|'ll|'d)|[^\r\n\p{L}\p{N}]?\p{L}+|\p{N}{1,3}| ?[^\s\p{L}\p{N}]+[\r\n]*|\s*[\r\n]+|\s+(?!\S)|\s+",mergeable_ranks=mergeable_ranks,special_tokens={token: len(mergeable_ranks) + i for i, token in enumerate(special_tokens)},)tokenizer.decode(tokenizer.encode("hello world!")) ##Picture

##Picture

model = torch.load("Meta-Llama-3-8B/consolidated.00.pth")print(json.dumps(list(model.keys())[:20], indent=4))

Picture

Picture

- "tok_embeddings.weight": This means that the model has a word embedding layer that is used to Input words (or more generally, tokens) are converted into fixed-dimensional vectors. This is the first step in most natural language processing models.

- "layers.0.attention..." and "layers.1.attention...": These parameters represent multiple layers, each layer containing an attention mechanism module. In this module, wq, wk, wv, and wo represent the weight matrices of query, key, value, and output respectively. This is the core component of the Transformer model and is used to capture the relationship between different parts of the input sequence.

- "layers.0.feed_forward..." and "layers.1.feed_forward...": These parameters indicate that each layer also contains a feed forward network (Feed Forward Network), which usually consists of two It consists of a linear transformation with a nonlinear activation function in the middle. w1, w2, and w3 may represent the weights of different linear layers in this feedforward network.

- "layers.0.attention_norm.weight" and "layers.1.attention_norm.weight": These parameters indicate that there is a normalization layer (possibly Layer Normalization) behind the attention module in each layer. , used to stabilize the training process.

- "layers.0.ffn_norm.weight" and "layers.1.ffn_norm.weight": These parameters indicate that there is also a normalization layer behind the feedforward network. The output content of the above code is the same as the picture below, which is a transformer block in Llama3.

Picture

Picture

with open("Meta-Llama-3-8B/params.json", "r") as f:config = json.load(f)config

图片

图片

- 'dim': 4096 - 表示模型中的隐藏层维度或特征维度。这是模型处理数据时每个向量的大小。

- 'n_layers': 32 - 表示模型中层的数量。在基于Transformer的模型中,这通常指的是编码器和解码器中的层的数量。

- 'n_heads': 32 - 表示在自注意力(Self-Attention)机制中,头(head)的数量。多头注意力机制是Transformer模型的关键特性之一,它允许模型在不同的表示子空间中并行捕获信息。

- 'n_kv_heads': 8 - 这个参数不是标准Transformer模型的常见配置,可能指的是在某些特定的注意力机制中,用于键(Key)和值(Value)的头的数量。

- 'vocab_size': 128256 - 表示模型使用的词汇表大小。这是模型能够识别的不同单词或标记的总数。

- 'multiple_of': 1024 - 这可能是指模型的某些维度需要是1024的倍数,以确保模型结构的对齐或优化。

- 'ffn_dim_multiplier': 1.3 - 表示前馈网络(Feed-Forward Network, FFN)的维度乘数。在Transformer模型中,FFN是每个注意力层后的一个网络,这个乘数可能用于调整FFN的大小。

- 'norm_eps': 1e-05 - 表示在归一化层(如Layer Normalization)中使用的epsilon值,用于防止除以零的错误。这是数值稳定性的一个小技巧。

- 'rope_theta': 500000.0 - 这个参数不是标准Transformer模型的常见配置,可能是指某种特定于模型的技术或优化的参数。它可能与位置编码或某种正则化技术有关。

我们使用这个配置来推断模型的细节,比如:

- 模型有32个Transformer层

- 每个多头注意力块有32个头

- 词汇表的大小等等

dim = config["dim"]n_layers = config["n_layers"]n_heads = config["n_heads"]n_kv_heads = config["n_kv_heads"]vocab_size = config["vocab_size"]multiple_of = config["multiple_of"]ffn_dim_multiplier = config["ffn_dim_multiplier"]norm_eps = config["norm_eps"]rope_theta = torch.tensor(config["rope_theta"])

图片

图片

将Text转化为Token

代码如下:

prompt = "the answer to the ultimate question of life, the universe, and everything is "tokens = [128000] + tokenizer.encode(prompt)print(tokens)tokens = torch.tensor(tokens)prompt_split_as_tokens = [tokenizer.decode([token.item()]) for token in tokens]print(prompt_split_as_tokens)

[128000, 1820, 4320, 311, 279, 17139, 3488, 315, 2324, 11, 279, 15861, 11, 323, 4395, 374, 220]['', 'the', ' answer', ' to', ' the', ' ultimate', ' question', ' of', ' life', ',', ' the', ' universe', ',', ' and', ' everything', ' is', ' ']

将令牌转换为它们的嵌入表示

截止到目前,我们的[17x1]令牌现在变成了[17x4096],即长度为4096的17个嵌入(每个令牌一个)。

下图是为了验证我们输入的这句话,是17个token。

图片

图片

代码如下:

embedding_layer = torch.nn.Embedding(vocab_size, dim)embedding_layer.weight.data.copy_(model["tok_embeddings.weight"])token_embeddings_unnormalized = embedding_layer(tokens).to(torch.bfloat16)token_embeddings_unnormalized.shape

图片

图片

三、构建Transformer的第一层

我们接着使用 RMS 归一化对嵌入进行归一化,也就是图中这个位置:

图片

图片

使用公式如下:

图片

图片

代码如下:

# def rms_norm(tensor, norm_weights):# rms = (tensor.pow(2).mean(-1, keepdim=True) + norm_eps)**0.5# return tensor * (norm_weights / rms)def rms_norm(tensor, norm_weights):return (tensor * torch.rsqrt(tensor.pow(2).mean(-1, keepdim=True) + norm_eps)) * norm_weights

这段代码定义了一个名为 rms_norm 的函数,它实现了对输入张量(tensor)的RMS(Root Mean Square,均方根)归一化处理。这个函数接受两个参数:tensor 和 norm_weights。tensor 是需要进行归一化处理的输入张量,而 norm_weights 是归一化时使用的权重。

函数的工作原理如下:

- 首先,计算输入张量每个元素的平方(tensor.pow(2))。

- 然后,对平方后的张量沿着最后一个维度(-1)计算均值(mean),并保持维度不变(keepdim=True),这样得到每个元素的均方值。

- 接着,将均方值加上一个很小的正数 norm_eps(为了避免除以零的情况),然后计算其平方根的倒数(torch.rsqrt),得到RMS的倒数。

- 最后,将输入张量与RMS的倒数相乘,再乘以归一化权重 norm_weights,得到归一化后的张量。

在进行归一化处理后,我们的数据形状仍然保持为 [17x4096],这与嵌入层的形状相同,只不过数据已经过归一化。

token_embeddings = rms_norm(token_embeddings_unnormalized, model["layers.0.attention_norm.weight"])token_embeddings.shape

图片

图片

图片

图片

接下来,我们介绍注意力机制的实现,也就是下图中的红框标注的位置:

图片

图片

图片

图片

1. 输入句子

- 描述:这是我们的输入句子。

- 解释:输入句子被表示为一个矩阵 ( X ),其中每一行代表一个词的嵌入向量。

2. 嵌入每个词

- 描述:我们对每个词进行嵌入。

- 解释:输入句子中的每个词被转换为一个高维向量,这些向量组成了矩阵 ( X )。

3. 分成8个头

- 描述:将矩阵 ( X ) 分成8个头。我们用权重矩阵 ( W^Q )、( W^K ) 和 ( W^V ) 分别乘以 ( X )。

- 解释:多头注意力机制将输入矩阵 ( X ) 分成多个头(这里是8个),每个头有自己的查询(Query)、键(Key)和值(Value)矩阵。具体来说,输入矩阵 ( X ) 分别与查询权重矩阵 ( W^Q )、键权重矩阵 ( W^K ) 和值权重矩阵 ( W^V ) 相乘,得到查询矩阵 ( Q )、键矩阵 ( K ) 和值矩阵 ( V )。

4. 计算注意力

- 描述:使用得到的查询、键和值矩阵计算注意力。

- 解释:对于每个头,使用查询矩阵 ( Q )、键矩阵 ( K ) 和值矩阵 ( V ) 计算注意力分数。具体步骤包括:

计算 ( Q ) 和 ( K ) 的点积。

对点积结果进行缩放。

应用softmax函数得到注意力权重。

用注意力权重乘以值矩阵 ( V ) 得到输出矩阵 ( Z )。

5. 拼接结果矩阵

- 描述:将得到的 ( Z ) 矩阵拼接起来,然后用权重矩阵 ( W^O ) 乘以拼接后的矩阵,得到层的输出。

- 解释:将所有头的输出矩阵 ( Z ) 拼接成一个矩阵,然后用输出权重矩阵 ( W^O ) 乘以这个拼接后的矩阵,得到最终的输出矩阵 ( Z )。

额外说明

- 查询、键、值和输出向量的形状:在加载查询、键、值和输出向量时,注意到它们的形状分别是 [4096x4096]、[1024x4096]、[1024x4096]、[1024x4096] 和 [4096x4096]。

- 并行化注意力头的乘法:将它们捆绑在一起有助于并行化注意力头的乘法。

这张图展示了Transformer模型中多头注意力机制的实现过程,从输入句子的嵌入开始,经过多头分割、注意力计算,最后拼接结果并生成输出。每个步骤都详细说明了如何从输入矩阵 ( X ) 生成最终的输出矩阵 ( Z )。

当我们从模型中加载查询(query)、键(key)、值(value)和输出(output)向量时,我们注意到它们的形状分别是 [4096x4096]、[1024x4096]、[1024x4096]、[4096x4096]

乍一看这很奇怪,因为理想情况下我们希望每个头的每个q、k、v和o都是单独的

print(model["layers.0.attention.wq.weight"].shape,model["layers.0.attention.wk.weight"].shape,model["layers.0.attention.wv.weight"].shape,model["layers.0.attention.wo.weight"].shape)

Picture

Picture

The shape of the Query weight matrix (wq.weight) is [4096, 4096]. The shape of the key weight matrix (wk.weight) is [1024, 4096]. The shape of the value weight matrix (wv.weight) is [1024, 4096]. The shape of the output (Output) weight matrix (wo.weight) is [4096, 4096]. The output results show that the shapes of the query (Q) and output (O) weight matrices are the same, both [4096, 4096]. This means that both the input feature and the output feature have dimensions of 4096 for both query and output. The shapes of the key (K) and value (V) weight matrices are also the same, both [1024, 4096]. This shows that the input feature dimensions for keys and values are 4096, but the output feature dimensions are compressed to 1024. The shape of these weight matrices reflects how the model designer sets the dimensions of different parts of the attention mechanism. In particular, the dimensions of keys and values are reduced probably to reduce computational complexity and memory consumption, while keeping queries and outputs higher in dimensionality may be to retain more information. This design choice depends on the specific model architecture and application scenario

Let us use the sentence "I admire Li Hongzhang" as an example to simplify the implementation process of explaining the attention mechanism in this figure. Enter the sentence: First, we have the sentence "I admire Li Hongzhang". Before processing this sentence, we need to convert each word in the sentence into a mathematically processable form, that is, a word vector. This process is called word embedding.

Word embedding: Each word, such as "I", "appreciation", and "Li Hongzhang", will be converted into a fixed-size vector. These vectors contain the semantic information of the words.

Split into multiple heads: In order to allow the model to understand the sentence from different perspectives, we split the vector of each word into multiple parts, here are 8 heads. Each head focuses on a different aspect of the sentence.

Calculate attention: For each head, we will calculate something called attention. This process involves three steps: Take "I appreciate Li Hongzhang" as an example. If we want to focus on the word "appreciation", then "appreciation" is the query, and other words such as "I" and "Li Hongzhang" are keys. The vector of is the value.

Query (Q): This is the part where we want to find information. Key (K): This is the part that contains the information. Value (V): This is the actual information content. Splicing and output: After calculating the attention of each head, we concatenate these results and generate the final output through a weight matrix Wo. This output will be used in the next layer of processing or as part of the final result.

The shape problem mentioned in the comments to the figure is about how to store and process these vectors efficiently in a computer. In actual code implementation, in order to improve efficiency, developers may package the query, key, and value vectors of multiple headers together instead of processing each header individually. This can take advantage of the parallel processing capabilities of modern computers to speed up calculations.

- The shape of the query weight matrix (wq.weight) is [4096, 4096].

- The shape of the key weight matrix (wk.weight) is [1024, 4096].

- The shape of the value (Value) weight matrix (wv.weight) is [1024, 4096].

- The shape of the output (Output) weight matrix (wo.weight) is [4096, 4096].

The output results show that:

- The shapes of the query (Q) and output (O) weight matrices are the same, both [4096, 4096]. This means that both the input feature and the output feature have dimensions of 4096 for both query and output.

- The shapes of the key (K) and value (V) weight matrices are also the same, both [1024, 4096]. This shows that the input feature dimensions for keys and values are 4096, but the output feature dimensions are compressed to 1024.

The shape of these weight matrices reflects how the model designer sets the dimensions of different parts of the attention mechanism. In particular, the dimensions of keys and values are reduced probably to reduce computational complexity and memory consumption, while keeping queries and outputs higher in dimensionality may be to retain more information. This design choice depends on the specific model architecture and application scenario

Let us use the sentence "I admire Li Hongzhang" as an example to simplify the implementation process of explaining the attention mechanism in this figure.

- Input sentence: First, we have the sentence "I appreciate Li Hongzhang". Before processing this sentence, we need to convert each word in the sentence into a mathematically processable form, that is, a word vector. This process is called word embedding.

- Word embedding: Each word, such as "I", "appreciation", "Li Hongzhang", will be converted into a fixed-size vector. These vectors contain the semantic information of the words.

- Split into multiple heads: In order to allow the model to understand the sentence from different perspectives, we split the vector of each word into multiple parts, here are 8 heads. Each head focuses on a different aspect of the sentence.

- Calculate attention: For each head, we will calculate something called attention. This process involves three steps: Take "I appreciate Li Hongzhang" as an example. If we want to focus on the word "appreciation", then "appreciation" is the query, and other words such as "I" and "Li Hongzhang" are keys. The vector of is the value.

Query (Q): This is the part where we want to find information.

Key (K): This is the part that contains information.

Value (V): This is the actual information content.

- Splicing and output: After calculating the attention of each head, we splice these results together and generate the final output through a weight matrix Wo. This output will be used in the next layer of processing or as part of the final result.

The shape problem mentioned in the comments to the figure is about how to store and process these vectors efficiently in a computer. In actual code implementation, in order to improve efficiency, developers may package the query, key, and value vectors of multiple headers together instead of processing each header individually. This can take advantage of the parallel processing capabilities of modern computers to speed up calculations.

We continue to use the sentence "I appreciate Li Hongzhang" to explain the role of the weight matrices WQ, WK, WV and WO.

In the Transformer model, each word is converted into a vector through word embedding. These vectors are then passed through a series of linear transformations to calculate attention scores. These linear transformations are implemented through the weight matrices WQ, WK, WV and WO.

- WQ (weight matrix Q): This matrix is used to convert the vector of each word into a "query" vector. In our example, if we want to focus on the word "appreciation", we will multiply the vector of "appreciation" by WQ to get the query vector.

- WK (weight matrix K): This matrix is used to convert the vector of each word into a "Key" vector. Similarly, we will multiply the vector of each word, including "I" and "Li Hongzhang", by WK to get the key vector.

- WV (weight matrix V): This matrix is used to convert the vector of each word into a "value" vector. After multiplying each word vector by WV, we get a value vector. These three matrices (WQ, WK, WV) are used to generate different query, key and value vectors for each header. Doing this allows each head to focus on a different aspect of the sentence.

- WQ (weight matrix Q), WK (weight matrix K), WV (weight matrix V) and WO (weight matrix O) are the parameters in the Transformer model. They are passed during the model training process. It is learned by optimization methods such as backpropagation algorithm and gradient descent.

In the whole process, WQ, WK, WV and WO are learned through training. They determine how the model converts the input word vectors into different representations and how to combine these representations. Get the final output. These matrices are the core part of the attention mechanism in the Transformer model, and they enable the model to capture the relationship between different words in the sentence.

WQ (weight matrix Q), WK (weight matrix K), WV (weight matrix V) and WO (weight matrix O) are the parameters in the Transformer model. They are used in model training. In the process, it is learned through optimization methods such as backpropagation algorithm and gradient descent.

Let’s take a look at how this learning process works:

- 初始化:在训练开始之前,这些矩阵通常会被随机初始化。这意味着它们的初始值是随机选取的,这样可以打破对称性并开始学习过程。

- 前向传播:在模型的训练过程中,输入数据(如句子“我欣赏李鸿章”)会通过模型的各个层进行前向传播。在注意力机制中,输入的词向量会与WQ、WK、WV矩阵相乘,以生成查询、键和值向量。

- 计算损失:模型的输出会与期望的输出(通常是训练数据中的标签)进行比较,计算出一个损失值。这个损失值衡量了模型的预测与实际情况的差距。

- 反向传播:损失值会通过反向传播算法传回模型,计算每个参数(包括WQ、WK、WV和WO)对损失的影响,即它们的梯度。

- 参数更新:根据计算出的梯度,使用梯度下降或其他优化算法来更新这些矩阵的值。这个过程会逐渐减小损失值,使模型的预测更加准确。

- 迭代过程:这个前向传播、损失计算、反向传播和参数更新的过程会在训练数据上多次迭代进行,直到模型的性能达到一定的标准或者不再显著提升。

通过这个训练过程,WQ、WK、WV和WO这些矩阵会逐渐调整它们的值,以便模型能够更好地理解和处理输入数据。在训练完成后,这些矩阵将固定下来,用于模型的推理阶段,即对新的输入数据进行预测。

四、展开查询向量

在本小节中,我们将从多个注意力头中展开查询向量,得到的形状是 [32x128x4096] 这里,32 是 llama3 中注意力头的数量,128 是查询向量的大小,而 4096 是令牌嵌入的大小。

q_layer0 = model["layers.0.attention.wq.weight"]head_dim = q_layer0.shape[0] // n_headsq_layer0 = q_layer0.view(n_heads, head_dim, dim)q_layer0.shape

图片

图片

这段代码通过对模型中第一层的查询(Q)权重矩阵进行重塑(reshape),将其分解为多个注意力头的形式,从而揭示了32和128这两个维度。

- q_layer0 = model["layers.0.attention.wq.weight"]:这行代码从模型中提取第一层的查询(Q)权重矩阵。

- head_dim = q_layer0.shape[0] // n_heads:这行代码计算每个注意力头的维度大小。它通过将查询权重矩阵的第一个维度(原本是4096)除以注意力头的数量(n_heads),得到每个头的维度。如果n_heads是32(即模型设计为有32个注意力头),那么head_dim就是4096 // 32 = 128。

- q_layer0 = q_layer0.view(n_heads, head_dim, dim):这行代码使用.view()方法重塑查询权重矩阵,使其形状变为[n_heads, head_dim, dim]。这里dim很可能是原始特征维度4096,n_heads是32,head_dim是128,因此重塑后的形状是[32, 128, 4096]。

- q_layer0.shape 输出:torch.Size([32, 128, 4096]):这行代码打印重塑后的查询权重矩阵的形状,确认了其形状为[32, 128, 4096]。

之所以在这段代码中出现了32和128这两个维度,而在之前的代码段中没有,是因为这段代码通过重塑操作明确地将查询权重矩阵分解为多个注意力头,每个头具有自己的维度。32代表了模型中注意力头的数量,而128代表了分配给每个头的特征维度大小。这种分解是为了实现多头注意力机制,其中每个头可以独立地关注输入的不同部分,最终通过组合这些头的输出来提高模型的表达能力。

实现第一层的第一个头

访问了第一层第一个头的查询(query)权重矩阵,这个查询权重矩阵的大小是 [128x4096]。

q_layer0_head0 = q_layer0[0]q_layer0_head0.shape

图片

图片

我们现在将查询权重与令牌嵌入相乘,以获得令牌的查询

在这里,你可以看到结果形状是 [17x128],这是因为我们有17个令牌,每个令牌都有一个长度为128的查询(每个令牌在一个头上方的查询)。

br

Picture

Picture

This code performs a matrix multiplication operation to combine the token embeddings (token_embeddings) with the query (query) weight of the first header of the first layer The transpose (.T) of the matrix (q_layer0_head0) is multiplied to generate the per-token query vector (q_per_token).

- q_per_token = torch.matmul(token_embeddings, q_layer0_head0.T):

torch.matmul is the matrix multiplication function in PyTorch, which can handle two tensors multiplication.

token_embeddings should be a tensor of shape [17, 4096], indicating that there are 17 tokens, each token is represented by a 4096-dimensional embedding vector.

q_layer0_head0 is the query weight matrix of the first head of the first layer, and its original shape is [128, 4096]. .T is the transpose operation in PyTorch, which transposes the shape of q_layer0_head0 to [4096, 128].

In this way, the matrix multiplication of token_embeddings and q_layer0_head0.T is the multiplication of [17, 4096] and [4096, 128], and the result is a tensor with shape [17, 128].

- q_per_token.shape and output: torch.Size([17, 128]):

This line of code prints out the shape of the q_per_token tensor, confirming that it is [ 17, 128].

This means that for every token entered (17 in total), we now have a 128-dimensional query vector. This 128-dimensional query vector is obtained by multiplying the token embedding and the query weight matrix and can be used for subsequent attention mechanism calculations.

In short, this code converts the embedding vector of each token into a query vector through matrix multiplication, preparing for the next step of implementing the attention mechanism. Each token now has a query vector corresponding to it, and these query vectors will be used to calculate attention scores with other tokens.

The above is the detailed content of Hand-tearing Llama3 layer 1: Implementing llama3 from scratch. For more information, please follow other related articles on the PHP Chinese website!

AI Game Development Enters Its Agentic Era With Upheaval's Dreamer PortalMay 02, 2025 am 11:17 AM

AI Game Development Enters Its Agentic Era With Upheaval's Dreamer PortalMay 02, 2025 am 11:17 AMUpheaval Games: Revolutionizing Game Development with AI Agents Upheaval, a game development studio comprised of veterans from industry giants like Blizzard and Obsidian, is poised to revolutionize game creation with its innovative AI-powered platfor

Uber Wants To Be Your Robotaxi Shop, Will Providers Let Them?May 02, 2025 am 11:16 AM

Uber Wants To Be Your Robotaxi Shop, Will Providers Let Them?May 02, 2025 am 11:16 AMUber's RoboTaxi Strategy: A Ride-Hail Ecosystem for Autonomous Vehicles At the recent Curbivore conference, Uber's Richard Willder unveiled their strategy to become the ride-hail platform for robotaxi providers. Leveraging their dominant position in

AI Agents Playing Video Games Will Transform Future RobotsMay 02, 2025 am 11:15 AM

AI Agents Playing Video Games Will Transform Future RobotsMay 02, 2025 am 11:15 AMVideo games are proving to be invaluable testing grounds for cutting-edge AI research, particularly in the development of autonomous agents and real-world robots, even potentially contributing to the quest for Artificial General Intelligence (AGI). A

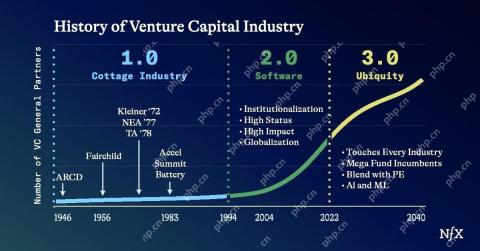

The Startup Industrial Complex, VC 3.0, And James Currier's ManifestoMay 02, 2025 am 11:14 AM

The Startup Industrial Complex, VC 3.0, And James Currier's ManifestoMay 02, 2025 am 11:14 AMThe impact of the evolving venture capital landscape is evident in the media, financial reports, and everyday conversations. However, the specific consequences for investors, startups, and funds are often overlooked. Venture Capital 3.0: A Paradigm

Adobe Updates Creative Cloud And Firefly At Adobe MAX London 2025May 02, 2025 am 11:13 AM

Adobe Updates Creative Cloud And Firefly At Adobe MAX London 2025May 02, 2025 am 11:13 AMAdobe MAX London 2025 delivered significant updates to Creative Cloud and Firefly, reflecting a strategic shift towards accessibility and generative AI. This analysis incorporates insights from pre-event briefings with Adobe leadership. (Note: Adob

Everything Meta Announced At LlamaConMay 02, 2025 am 11:12 AM

Everything Meta Announced At LlamaConMay 02, 2025 am 11:12 AMMeta's LlamaCon announcements showcase a comprehensive AI strategy designed to compete directly with closed AI systems like OpenAI's, while simultaneously creating new revenue streams for its open-source models. This multifaceted approach targets bo

The Brewing Controversy Over The Proposition That AI Is Nothing More Than Just Normal TechnologyMay 02, 2025 am 11:10 AM

The Brewing Controversy Over The Proposition That AI Is Nothing More Than Just Normal TechnologyMay 02, 2025 am 11:10 AMThere are serious differences in the field of artificial intelligence on this conclusion. Some insist that it is time to expose the "emperor's new clothes", while others strongly oppose the idea that artificial intelligence is just ordinary technology. Let's discuss it. An analysis of this innovative AI breakthrough is part of my ongoing Forbes column that covers the latest advancements in the field of AI, including identifying and explaining a variety of influential AI complexities (click here to view the link). Artificial intelligence as a common technology First, some basic knowledge is needed to lay the foundation for this important discussion. There is currently a large amount of research dedicated to further developing artificial intelligence. The overall goal is to achieve artificial general intelligence (AGI) and even possible artificial super intelligence (AS)

Model Citizens, Why AI Value Is The Next Business YardstickMay 02, 2025 am 11:09 AM

Model Citizens, Why AI Value Is The Next Business YardstickMay 02, 2025 am 11:09 AMThe effectiveness of a company's AI model is now a key performance indicator. Since the AI boom, generative AI has been used for everything from composing birthday invitations to writing software code. This has led to a proliferation of language mod

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

SecLists

SecLists is the ultimate security tester's companion. It is a collection of various types of lists that are frequently used during security assessments, all in one place. SecLists helps make security testing more efficient and productive by conveniently providing all the lists a security tester might need. List types include usernames, passwords, URLs, fuzzing payloads, sensitive data patterns, web shells, and more. The tester can simply pull this repository onto a new test machine and he will have access to every type of list he needs.

SublimeText3 English version

Recommended: Win version, supports code prompts!

Zend Studio 13.0.1

Powerful PHP integrated development environment

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Notepad++7.3.1

Easy-to-use and free code editor