Sqoop是一个用来将Hadoop(Hive、HBase)和关系型数据库中的数据相互转移的工具,可以将一个关系型数据库(例如:MySQL ,Oracle ,Postgres等)中的数据导入到Hadoop的HDFS中,也可以将HDFS的数据导入到关系型数据库中。Sqoop目前已经是Apache的顶级项目了,

Sqoop是一个用来将Hadoop(Hive、HBase)和关系型数据库中的数据相互转移的工具,可以将一个关系型数据库(例如:MySQL ,Oracle ,Postgres等)中的数据导入到Hadoop的HDFS中,也可以将HDFS的数据导入到关系型数据库中。 Sqoop目前已经是Apache的顶级项目了,目前版本是1.4.4 和 Sqoop2 1.99.3,本文以1.4.4的版本为例讲解基本的安装配置和简单应用的演示。- 安装配置

- 准备测试数据

- 导入数据到HDFS

- 导入数据到Hive

- 导入数据到HBase

sqoop-1.4.4.bin__hadoop-2.0.4-alpha.tar.gz

1.1、下载后解压配置:

tar -zxvf sqoop-1.4.4.bin__hadoop-2.0.4-alpha.tar.gz /usr/local/ cd /usr/local ln -s sqoop-1.4.4.bin__hadoop-2.0.4-alpha sqoop1.2、环境变量配置

vi ~/.bash_profile :

#Sqoop add by micmiu.com export SQOOP_HOME=/usr/local/sqoop export PATH=$SQOOP_HOME/bin:$PATH1.3、配置Sqoop参数: 复制

vi ?<sqoop_home>/conf/sqoop-env.sh</sqoop_home>

# 指定各环境变量的实际配置 # Set Hadoop-specific environment variables here. #Set path to where bin/hadoop is available #export HADOOP_COMMON_HOME= #Set path to where hadoop-*-core.jar is available #export HADOOP_MAPRED_HOME= #set the path to where bin/hbase is available #export HBASE_HOME= #Set the path to where bin/hive is available #export HIVE_HOME=ps:因为我当前用户的默认环境变量中已经配置了相关变量,故该配置文件无需再修改:

# Hadoop

export HADOOP_PREFIX="/usr/local/hadoop"

export HADOOP_HOME=${HADOOP_PREFIX}

export PATH=$PATH:$HADOOP_PREFIX/bin:$HADOOP_PREFIX/sbin

export HADOOP_COMMON_HOME=${HADOOP_PREFIX}

export HADOOP_HDFS_HOME=${HADOOP_PREFIX}

export HADOOP_MAPRED_HOME=${HADOOP_PREFIX}

export HADOOP_YARN_HOME=${HADOOP_PREFIX}

# Native Path

export HADOOP_COMMON_LIB_NATIVE_DIR=${HADOOP_PREFIX}/lib/native

export HADOOP_OPTS="-Djava.library.path=$HADOOP_PREFIX/lib/native"

# Hadoop end

#Hive

export HIVE_HOME=/usr/local/hive

export PATH=$HIVE_HOME/bin:$PATH

#HBase

export HBASE_HOME=/usr/local/hbase

export PATH=$HBASE

#add by micmiu.com

1.4、驱动jar包

下面测试演示以MySQL为例,则需要把mysql对应的驱动lib文件copy到 <sqoop_home>/lib</sqoop_home> 目录下。

[二]、测试数据准备

以MySQL 为例:

- 192.168.6.77(hostname:Master.Hadoop)

- database: test

- 用户:root 密码:micmiu

CREATE TABLE `demo_blog` ( `id` int(11) NOT NULL AUTO_INCREMENT, `blog` varchar(100) NOT NULL, PRIMARY KEY (`id`) ) ENGINE=MyISAM DEFAULT CHARSET=utf8;

CREATE TABLE `demo_log` ( `operator` varchar(16) NOT NULL, `log` varchar(100) NOT NULL ) ENGINE=MyISAM DEFAULT CHARSET=utf8;插入测试数据:

insert into demo_blog (id, blog) values (1, "micmiu.com");

insert into demo_blog (id, blog) values (2, "ctosun.com");

insert into demo_blog (id, blog) values (3, "baby.micmiu.com");

insert into demo_log (operator, log) values ("micmiu", "create");

insert into demo_log (operator, log) values ("micmiu", "update");

insert into demo_log (operator, log) values ("michael", "edit");

insert into demo_log (operator, log) values ("michael", "delete");

[三]、导入数据到HDFS

3.1、导入有主键的表

比如我需要把表 demo_blog (含主键) 的数据导入到HDFS中,执行如下命令:

sqoop import --connect jdbc:mysql://192.168.6.77/test --username root --password micmiu --table demo_blog执行过程如下:

$ sqoop import --connect jdbc:mysql://192.168.6.77/test --username root --password micmiu --table demo_blog Warning: /usr/lib/hcatalog does not exist! HCatalog jobs will fail. Please set $HCAT_HOME to the root of your HCatalog installation. 14/04/09 09:58:43 WARN tool.BaseSqoopTool: Setting your password on the command-line is insecure. Consider using -P instead. 14/04/09 09:58:43 INFO manager.MySQLManager: Preparing to use a MySQL streaming resultset. 14/04/09 09:58:43 INFO tool.CodeGenTool: Beginning code generation 14/04/09 09:58:43 INFO manager.SqlManager: Executing SQL statement: SELECT t.* FROM `demo_blog` AS t LIMIT 1 14/04/09 09:58:43 INFO manager.SqlManager: Executing SQL statement: SELECT t.* FROM `demo_blog` AS t LIMIT 1 14/04/09 09:58:43 INFO orm.CompilationManager: HADOOP_MAPRED_HOME is /usr/local/hadoop Note: /tmp/sqoop-hadoop/compile/e8fd26a5bca5b7f51cdb03bf847ce389/demo_blog.java uses or overrides a deprecated API. Note: Recompile with -Xlint:deprecation for details. 14/04/09 09:58:44 INFO orm.CompilationManager: Writing jar file: /tmp/sqoop-hadoop/compile/e8fd26a5bca5b7f51cdb03bf847ce389/demo_blog.jar 14/04/09 09:58:44 WARN manager.MySQLManager: It looks like you are importing from mysql. 14/04/09 09:58:44 WARN manager.MySQLManager: This transfer can be faster! Use the --direct 14/04/09 09:58:44 WARN manager.MySQLManager: option to exercise a MySQL-specific fast path. 14/04/09 09:58:44 INFO manager.MySQLManager: Setting zero DATETIME behavior to convertToNull (mysql) 14/04/09 09:58:44 INFO mapreduce.ImportJobBase: Beginning import of demo_blog SLF4J: Class path contains multiple SLF4J bindings. SLF4J: Found binding in [jar:file:/usr/local/hadoop-2.2.0/share/hadoop/common/lib/slf4j-log4j12-1.7.5.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: Found binding in [jar:file:/usr/local/hbase-0.98.0-hadoop2/lib/slf4j-log4j12-1.6.4.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation. SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory] 14/04/09 09:58:44 INFO Configuration.deprecation: mapred.jar is deprecated. Instead, use mapreduce.job.jar 14/04/09 09:58:45 INFO Configuration.deprecation: mapred.map.tasks is deprecated. Instead, use mapreduce.job.maps 14/04/09 09:58:45 INFO client.RMProxy: Connecting to ResourceManager at Master.Hadoop/192.168.6.77:8032 14/04/09 09:58:47 INFO db.DataDrivenDBInputFormat: BoundingValsQuery: SELECT MIN(`id`), MAX(`id`) FROM `demo_blog` 14/04/09 09:58:47 INFO mapreduce.JobSubmitter: number of splits:3 14/04/09 09:58:47 INFO Configuration.deprecation: mapred.job.classpath.files is deprecated. Instead, use mapreduce.job.classpath.files 14/04/09 09:58:47 INFO Configuration.deprecation: user.name is deprecated. Instead, use mapreduce.job.user.name 14/04/09 09:58:47 INFO Configuration.deprecation: mapred.cache.files.filesizes is deprecated. Instead, use mapreduce.job.cache.files.filesizes 14/04/09 09:58:47 INFO Configuration.deprecation: mapred.cache.files is deprecated. Instead, use mapreduce.job.cache.files 14/04/09 09:58:47 INFO Configuration.deprecation: mapred.reduce.tasks is deprecated. Instead, use mapreduce.job.reduces 14/04/09 09:58:47 INFO Configuration.deprecation: mapred.output.value.class is deprecated. Instead, use mapreduce.job.output.value.class 14/04/09 09:58:47 INFO Configuration.deprecation: mapreduce.map.class is deprecated. Instead, use mapreduce.job.map.class 14/04/09 09:58:47 INFO Configuration.deprecation: mapred.job.name is deprecated. Instead, use mapreduce.job.name 14/04/09 09:58:47 INFO Configuration.deprecation: mapreduce.inputformat.class is deprecated. Instead, use mapreduce.job.inputformat.class 14/04/09 09:58:47 INFO Configuration.deprecation: mapred.output.dir is deprecated. Instead, use mapreduce.output.fileoutputformat.outputdir 14/04/09 09:58:47 INFO Configuration.deprecation: mapreduce.outputformat.class is deprecated. Instead, use mapreduce.job.outputformat.class 14/04/09 09:58:47 INFO Configuration.deprecation: mapred.cache.files.timestamps is deprecated. Instead, use mapreduce.job.cache.files.timestamps 14/04/09 09:58:47 INFO Configuration.deprecation: mapred.output.key.class is deprecated. Instead, use mapreduce.job.output.key.class 14/04/09 09:58:47 INFO Configuration.deprecation: mapred.working.dir is deprecated. Instead, use mapreduce.job.working.dir 14/04/09 09:58:47 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1396936838233_0001 14/04/09 09:58:47 INFO impl.YarnClientImpl: Submitted application application_1396936838233_0001 to ResourceManager at Master.Hadoop/192.168.6.77:8032 14/04/09 09:58:47 INFO mapreduce.Job: The url to track the job: http://Master.Hadoop:8088/proxy/application_1396936838233_0001/ 14/04/09 09:58:47 INFO mapreduce.Job: Running job: job_1396936838233_0001 14/04/09 09:59:00 INFO mapreduce.Job: Job job_1396936838233_0001 running in uber mode : false 14/04/09 09:59:00 INFO mapreduce.Job: map 0% reduce 0% 14/04/09 09:59:14 INFO mapreduce.Job: map 33% reduce 0% 14/04/09 09:59:16 INFO mapreduce.Job: map 67% reduce 0% 14/04/09 09:59:19 INFO mapreduce.Job: map 100% reduce 0% 14/04/09 09:59:19 INFO mapreduce.Job: Job job_1396936838233_0001 completed successfully 14/04/09 09:59:19 INFO mapreduce.Job: Counters: 27 File System Counters FILE: Number of bytes read=0 FILE: Number of bytes written=271866 FILE: Number of read operations=0 FILE: Number of large read operations=0 FILE: Number of write operations=0 HDFS: Number of bytes read=295 HDFS: Number of bytes written=44 HDFS: Number of read operations=12 HDFS: Number of large read operations=0 HDFS: Number of write operations=6 Job Counters Launched map tasks=3 Other local map tasks=3 Total time spent by all maps in occupied slots (ms)=43032 Total time spent by all reduces in occupied slots (ms)=0 Map-Reduce Framework Map input records=3 Map output records=3 Input split bytes=295 Spilled Records=0 Failed Shuffles=0 Merged Map outputs=0 GC time elapsed (ms)=590 CPU time spent (ms)=6330 Physical memory (bytes) snapshot=440934400 Virtual memory (bytes) snapshot=3882573824 Total committed heap usage (bytes)=160563200 File Input Format Counters Bytes Read=0 File Output Format Counters Bytes Written=44 14/04/09 09:59:19 INFO mapreduce.ImportJobBase: Transferred 44 bytes in 34.454 seconds (1.2771 bytes/sec) 14/04/09 09:59:19 INFO mapreduce.ImportJobBase: Retrieved 3 records.验证导入到hdfs上的数据:

$ hdfs dfs -ls /user/hadoop/demo_blog Found 4 items -rw-r--r-- 3 hadoop supergroup 0 2014-04-09 09:59 /user/hadoop/demo_blog/_SUCCESS -rw-r--r-- 3 hadoop supergroup 13 2014-04-09 09:59 /user/hadoop/demo_blog/part-m-00000 -rw-r--r-- 3 hadoop supergroup 13 2014-04-09 09:59 /user/hadoop/demo_blog/part-m-00001 -rw-r--r-- 3 hadoop supergroup 18 2014-04-09 09:59 /user/hadoop/demo_blog/part-m-00002 [hadoop@Master ~]$ hdfs dfs -cat /user/hadoop/demo_blog/part-m-0000* 1,micmiu.com 2,ctosun.com 3,baby.micmiu.comps:默认设置下导入到hdfs上的路径是:?

/user/username/tablename/(files),比如我的当前用户是hadoop,那么实际路径即:?/user/hadoop/demo_blog/(files)。

如果要自定义路径需要增加参数:--warehouse-dir 比如:

sqoop import --connect jdbc:mysql://Master.Hadoop/test --username root --password micmiu --table demo_blog --warehouse-dir /user/micmiu/sqoop3.2、导入不含主键的表 比如需要把表 demo_log(无主键) 的数据导入到hdfs中,执行如下命令:

sqoop import --connect jdbc:mysql://192.168.6.77/test --username root --password micmiu --table demo_log --warehouse-dir /user/micmiu/sqoop --split-by operatorps:无主键表的导入需要增加参数?

--split-by xxx ?或者 -m 1

执行过程:

$ sqoop import --connect jdbc:mysql://192.168.6.77/test --username root --password micmiu --table demo_log --warehouse-dir /user/micmiu/sqoop --split-by operator Warning: /usr/lib/hcatalog does not exist! HCatalog jobs will fail. Please set $HCAT_HOME to the root of your HCatalog installation. 14/04/09 15:02:06 WARN tool.BaseSqoopTool: Setting your password on the command-line is insecure. Consider using -P instead. 14/04/09 15:02:06 INFO manager.MySQLManager: Preparing to use a MySQL streaming resultset. 14/04/09 15:02:06 INFO tool.CodeGenTool: Beginning code generation 14/04/09 15:02:06 INFO manager.SqlManager: Executing SQL statement: SELECT t.* FROM `demo_log` AS t LIMIT 1 14/04/09 15:02:06 INFO manager.SqlManager: Executing SQL statement: SELECT t.* FROM `demo_log` AS t LIMIT 1 14/04/09 15:02:06 INFO orm.CompilationManager: HADOOP_MAPRED_HOME is /usr/local/hadoop Note: /tmp/sqoop-hadoop/compile/dddc1bcdba30515f95a2d604f22e4fe9/demo_log.java uses or overrides a deprecated API. Note: Recompile with -Xlint:deprecation for details. 14/04/09 15:02:07 INFO orm.CompilationManager: Writing jar file: /tmp/sqoop-hadoop/compile/dddc1bcdba30515f95a2d604f22e4fe9/demo_log.jar 14/04/09 15:02:07 WARN manager.MySQLManager: It looks like you are importing from mysql. 14/04/09 15:02:07 WARN manager.MySQLManager: This transfer can be faster! Use the --direct 14/04/09 15:02:07 WARN manager.MySQLManager: option to exercise a MySQL-specific fast path. 14/04/09 15:02:07 INFO manager.MySQLManager: Setting zero DATETIME behavior to convertToNull (mysql) 14/04/09 15:02:07 INFO mapreduce.ImportJobBase: Beginning import of demo_log SLF4J: Class path contains multiple SLF4J bindings. SLF4J: Found binding in [jar:file:/usr/local/hadoop-2.2.0/share/hadoop/common/lib/slf4j-log4j12-1.7.5.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: Found binding in [jar:file:/usr/local/hbase-0.98.0-hadoop2/lib/slf4j-log4j12-1.6.4.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation. SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory] 14/04/09 15:02:07 INFO Configuration.deprecation: mapred.jar is deprecated. Instead, use mapreduce.job.jar 14/04/09 15:02:08 INFO Configuration.deprecation: mapred.map.tasks is deprecated. Instead, use mapreduce.job.maps 14/04/09 15:02:08 INFO client.RMProxy: Connecting to ResourceManager at Master.Hadoop/192.168.6.77:8032 14/04/09 15:02:10 INFO db.DataDrivenDBInputFormat: BoundingValsQuery: SELECT MIN(`operator`), MAX(`operator`) FROM `demo_log` 14/04/09 15:02:10 WARN db.TextSplitter: Generating splits for a textual index column. 14/04/09 15:02:10 WARN db.TextSplitter: If your database sorts in a case-insensitive order, this may result in a partial import or duplicate records. 14/04/09 15:02:10 WARN db.TextSplitter: You are strongly encouraged to choose an integral split column. 14/04/09 15:02:10 INFO mapreduce.JobSubmitter: number of splits:4 14/04/09 15:02:10 INFO Configuration.deprecation: mapred.job.classpath.files is deprecated. Instead, use mapreduce.job.classpath.files 14/04/09 15:02:10 INFO Configuration.deprecation: user.name is deprecated. Instead, use mapreduce.job.user.name 14/04/09 15:02:10 INFO Configuration.deprecation: mapred.cache.files.filesizes is deprecated. Instead, use mapreduce.job.cache.files.filesizes 14/04/09 15:02:10 INFO Configuration.deprecation: mapred.cache.files is deprecated. Instead, use mapreduce.job.cache.files 14/04/09 15:02:10 INFO Configuration.deprecation: mapred.reduce.tasks is deprecated. Instead, use mapreduce.job.reduces 14/04/09 15:02:10 INFO Configuration.deprecation: mapred.output.value.class is deprecated. Instead, use mapreduce.job.output.value.class 14/04/09 15:02:10 INFO Configuration.deprecation: mapreduce.map.class is deprecated. Instead, use mapreduce.job.map.class 14/04/09 15:02:10 INFO Configuration.deprecation: mapred.job.name is deprecated. Instead, use mapreduce.job.name 14/04/09 15:02:10 INFO Configuration.deprecation: mapreduce.inputformat.class is deprecated. Instead, use mapreduce.job.inputformat.class 14/04/09 15:02:10 INFO Configuration.deprecation: mapred.output.dir is deprecated. Instead, use mapreduce.output.fileoutputformat.outputdir 14/04/09 15:02:10 INFO Configuration.deprecation: mapreduce.outputformat.class is deprecated. Instead, use mapreduce.job.outputformat.class 14/04/09 15:02:10 INFO Configuration.deprecation: mapred.cache.files.timestamps is deprecated. Instead, use mapreduce.job.cache.files.timestamps 14/04/09 15:02:10 INFO Configuration.deprecation: mapred.output.key.class is deprecated. Instead, use mapreduce.job.output.key.class 14/04/09 15:02:10 INFO Configuration.deprecation: mapred.working.dir is deprecated. Instead, use mapreduce.job.working.dir 14/04/09 15:02:10 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1396936838233_0003 14/04/09 15:02:10 INFO impl.YarnClientImpl: Submitted application application_1396936838233_0003 to ResourceManager at Master.Hadoop/192.168.6.77:8032 14/04/09 15:02:10 INFO mapreduce.Job: The url to track the job: http://Master.Hadoop:8088/proxy/application_1396936838233_0003/ 14/04/09 15:02:10 INFO mapreduce.Job: Running job: job_1396936838233_0003 14/04/09 15:02:17 INFO mapreduce.Job: Job job_1396936838233_0003 running in uber mode : false 14/04/09 15:02:17 INFO mapreduce.Job: map 0% reduce 0% 14/04/09 15:02:28 INFO mapreduce.Job: map 25% reduce 0% 14/04/09 15:02:30 INFO mapreduce.Job: map 50% reduce 0% 14/04/09 15:02:33 INFO mapreduce.Job: map 100% reduce 0% 14/04/09 15:02:33 INFO mapreduce.Job: Job job_1396936838233_0003 completed successfully 14/04/09 15:02:33 INFO mapreduce.Job: Counters: 27 File System Counters FILE: Number of bytes read=0 FILE: Number of bytes written=362536 FILE: Number of read operations=0 FILE: Number of large read operations=0 FILE: Number of write operations=0 HDFS: Number of bytes read=516 HDFS: Number of bytes written=56 HDFS: Number of read operations=16 HDFS: Number of large read operations=0 HDFS: Number of write operations=8 Job Counters Launched map tasks=4 Other local map tasks=4 Total time spent by all maps in occupied slots (ms)=44481 Total time spent by all reduces in occupied slots (ms)=0 Map-Reduce Framework Map input records=4 Map output records=4 Input split bytes=516 Spilled Records=0 Failed Shuffles=0 Merged Map outputs=0 GC time elapsed (ms)=429 CPU time spent (ms)=6650 Physical memory (bytes) snapshot=587669504 Virtual memory (bytes) snapshot=5219356672 Total committed heap usage (bytes)=205848576 File Input Format Counters Bytes Read=0 File Output Format Counters Bytes Written=56 14/04/09 15:02:33 INFO mapreduce.ImportJobBase: Transferred 56 bytes in 25.2746 seconds (2.2157 bytes/sec) 14/04/09 15:02:33 INFO mapreduce.ImportJobBase: Retrieved 4 records.验证导入的数据:

$ hdfs dfs -ls /user/micmiu/sqoop/demo_log Found 5 items -rw-r--r-- 3 hadoop supergroup 0 2014-04-09 15:02 /user/micmiu/sqoop/demo_log/_SUCCESS -rw-r--r-- 3 hadoop supergroup 28 2014-04-09 15:02 /user/micmiu/sqoop/demo_log/part-m-00000 -rw-r--r-- 3 hadoop supergroup 0 2014-04-09 15:02 /user/micmiu/sqoop/demo_log/part-m-00001 -rw-r--r-- 3 hadoop supergroup 0 2014-04-09 15:02 /user/micmiu/sqoop/demo_log/part-m-00002 -rw-r--r-- 3 hadoop supergroup 28 2014-04-09 15:02 /user/micmiu/sqoop/demo_log/part-m-00003 $ hdfs dfs -cat /user/micmiu/sqoop/demo_log/part-m-0000* michael,edit michael,delete micmiu,create micmiu,update[四]、导入数据到Hive 比如把表demo_blog 数据导入到Hive中,增加参数 --hive-import?:

sqoop import --connect jdbc:mysql://192.168.6.77/test --username root --password micmiu --table demo_blog --warehouse-dir /user/sqoop --hive-import --create-hive-table执行过程如下:

$ sqoop import --connect jdbc:mysql://192.168.6.77/test --username root --password micmiu --table demo_blog --warehouse-dir /user/sqoop --hive-import --create-hive-table Warning: /usr/lib/hcatalog does not exist! HCatalog jobs will fail. Please set $HCAT_HOME to the root of your HCatalog installation. 14/04/09 10:44:21 WARN tool.BaseSqoopTool: Setting your password on the command-line is insecure. Consider using -P instead. 14/04/09 10:44:21 INFO tool.BaseSqoopTool: Using Hive-specific delimiters for output. You can override 14/04/09 10:44:21 INFO tool.BaseSqoopTool: delimiters with --fields-terminated-by, etc. 14/04/09 10:44:21 WARN tool.BaseSqoopTool: It seems that you've specified at least one of following: 14/04/09 10:44:21 WARN tool.BaseSqoopTool: --hive-home 14/04/09 10:44:21 WARN tool.BaseSqoopTool: --hive-overwrite 14/04/09 10:44:21 WARN tool.BaseSqoopTool: --create-hive-table 14/04/09 10:44:21 WARN tool.BaseSqoopTool: --hive-table 14/04/09 10:44:21 WARN tool.BaseSqoopTool: --hive-partition-key 14/04/09 10:44:21 WARN tool.BaseSqoopTool: --hive-partition-value 14/04/09 10:44:21 WARN tool.BaseSqoopTool: --map-column-hive 14/04/09 10:44:21 WARN tool.BaseSqoopTool: Without specifying parameter --hive-import. Please note that 14/04/09 10:44:21 WARN tool.BaseSqoopTool: those arguments will not be used in this session. Either 14/04/09 10:44:21 WARN tool.BaseSqoopTool: specify --hive-import to apply them correctly or remove them 14/04/09 10:44:21 WARN tool.BaseSqoopTool: from command line to remove this warning. 14/04/09 10:44:21 INFO tool.BaseSqoopTool: Please note that --hive-home, --hive-partition-key, 14/04/09 10:44:21 INFO tool.BaseSqoopTool: hive-partition-value and --map-column-hive options are 14/04/09 10:44:21 INFO tool.BaseSqoopTool: are also valid for HCatalog imports and exports 14/04/09 10:44:21 INFO manager.MySQLManager: Preparing to use a MySQL streaming resultset. 14/04/09 10:44:21 INFO tool.CodeGenTool: Beginning code generation 14/04/09 10:44:21 INFO manager.SqlManager: Executing SQL statement: SELECT t.* FROM `demo_blog` AS t LIMIT 1 14/04/09 10:44:21 INFO manager.SqlManager: Executing SQL statement: SELECT t.* FROM `demo_blog` AS t LIMIT 1 14/04/09 10:44:21 INFO orm.CompilationManager: HADOOP_MAPRED_HOME is /usr/local/hadoop Note: /tmp/sqoop-hadoop/compile/c071f02ecad006293202fd2c2fad0dce/demo_blog.java uses or overrides a deprecated API. Note: Recompile with -Xlint:deprecation for details. 14/04/09 10:44:22 INFO orm.CompilationManager: Writing jar file: /tmp/sqoop-hadoop/compile/c071f02ecad006293202fd2c2fad0dce/demo_blog.jar 14/04/09 10:44:22 WARN manager.MySQLManager: It looks like you are importing from mysql. 14/04/09 10:44:22 WARN manager.MySQLManager: This transfer can be faster! Use the --direct 14/04/09 10:44:22 WARN manager.MySQLManager: option to exercise a MySQL-specific fast path. 14/04/09 10:44:22 INFO manager.MySQLManager: Setting zero DATETIME behavior to convertToNull (mysql) 14/04/09 10:44:22 INFO mapreduce.ImportJobBase: Beginning import of demo_blog SLF4J: Class path contains multiple SLF4J bindings. SLF4J: Found binding in [jar:file:/usr/local/hadoop-2.2.0/share/hadoop/common/lib/slf4j-log4j12-1.7.5.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: Found binding in [jar:file:/usr/local/hbase-0.98.0-hadoop2/lib/slf4j-log4j12-1.6.4.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation. SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory] 14/04/09 10:44:22 INFO Configuration.deprecation: mapred.jar is deprecated. Instead, use mapreduce.job.jar 14/04/09 10:44:23 INFO Configuration.deprecation: mapred.map.tasks is deprecated. Instead, use mapreduce.job.maps 14/04/09 10:44:23 INFO client.RMProxy: Connecting to ResourceManager at Master.Hadoop/192.168.6.77:8032 14/04/09 10:44:25 INFO db.DataDrivenDBInputFormat: BoundingValsQuery: SELECT MIN(`id`), MAX(`id`) FROM `demo_blog` 14/04/09 10:44:25 INFO mapreduce.JobSubmitter: number of splits:3 14/04/09 10:44:25 INFO Configuration.deprecation: mapred.job.classpath.files is deprecated. Instead, use mapreduce.job.classpath.files 14/04/09 10:44:25 INFO Configuration.deprecation: user.name is deprecated. Instead, use mapreduce.job.user.name 14/04/09 10:44:25 INFO Configuration.deprecation: mapred.cache.files.filesizes is deprecated. Instead, use mapreduce.job.cache.files.filesizes 14/04/09 10:44:25 INFO Configuration.deprecation: mapred.cache.files is deprecated. Instead, use mapreduce.job.cache.files 14/04/09 10:44:25 INFO Configuration.deprecation: mapred.reduce.tasks is deprecated. Instead, use mapreduce.job.reduces 14/04/09 10:44:25 INFO Configuration.deprecation: mapred.output.value.class is deprecated. Instead, use mapreduce.job.output.value.class 14/04/09 10:44:25 INFO Configuration.deprecation: mapreduce.map.class is deprecated. Instead, use mapreduce.job.map.class 14/04/09 10:44:25 INFO Configuration.deprecation: mapred.job.name is deprecated. Instead, use mapreduce.job.name 14/04/09 10:44:25 INFO Configuration.deprecation: mapreduce.inputformat.class is deprecated. Instead, use mapreduce.job.inputformat.class 14/04/09 10:44:25 INFO Configuration.deprecation: mapred.output.dir is deprecated. Instead, use mapreduce.output.fileoutputformat.outputdir 14/04/09 10:44:25 INFO Configuration.deprecation: mapreduce.outputformat.class is deprecated. Instead, use mapreduce.job.outputformat.class 14/04/09 10:44:25 INFO Configuration.deprecation: mapred.cache.files.timestamps is deprecated. Instead, use mapreduce.job.cache.files.timestamps 14/04/09 10:44:25 INFO Configuration.deprecation: mapred.output.key.class is deprecated. Instead, use mapreduce.job.output.key.class 14/04/09 10:44:25 INFO Configuration.deprecation: mapred.working.dir is deprecated. Instead, use mapreduce.job.working.dir 14/04/09 10:44:25 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1396936838233_0002 14/04/09 10:44:25 INFO impl.YarnClientImpl: Submitted application application_1396936838233_0002 to ResourceManager at Master.Hadoop/192.168.6.77:8032 14/04/09 10:44:25 INFO mapreduce.Job: The url to track the job: http://Master.Hadoop:8088/proxy/application_1396936838233_0002/ 14/04/09 10:44:25 INFO mapreduce.Job: Running job: job_1396936838233_0002 14/04/09 10:44:33 INFO mapreduce.Job: Job job_1396936838233_0002 running in uber mode : false 14/04/09 10:44:33 INFO mapreduce.Job: map 0% reduce 0% 14/04/09 10:44:46 INFO mapreduce.Job: map 67% reduce 0% 14/04/09 10:44:48 INFO mapreduce.Job: map 100% reduce 0% 14/04/09 10:44:49 INFO mapreduce.Job: Job job_1396936838233_0002 completed successfully 14/04/09 10:44:49 INFO mapreduce.Job: Counters: 27 File System Counters FILE: Number of bytes read=0 FILE: Number of bytes written=271860 FILE: Number of read operations=0 FILE: Number of large read operations=0 FILE: Number of write operations=0 HDFS: Number of bytes read=295 HDFS: Number of bytes written=44 HDFS: Number of read operations=12 HDFS: Number of large read operations=0 HDFS: Number of write operations=6 Job Counters Launched map tasks=3 Other local map tasks=3 Total time spent by all maps in occupied slots (ms)=34047 Total time spent by all reduces in occupied slots (ms)=0 Map-Reduce Framework Map input records=3 Map output records=3 Input split bytes=295 Spilled Records=0 Failed Shuffles=0 Merged Map outputs=0 GC time elapsed (ms)=505 CPU time spent (ms)=5350 Physical memory (bytes) snapshot=427388928 Virtual memory (bytes) snapshot=3881439232 Total committed heap usage (bytes)=171638784 File Input Format Counters Bytes Read=0 File Output Format Counters Bytes Written=44 14/04/09 10:44:49 INFO mapreduce.ImportJobBase: Transferred 44 bytes in 26.0401 seconds (1.6897 bytes/sec) 14/04/09 10:44:49 INFO mapreduce.ImportJobBase: Retrieved 3 records. 14/04/09 10:44:49 INFO manager.SqlManager: Executing SQL statement: SELECT t.* FROM `demo_blog` AS t LIMIT 1 14/04/09 10:44:49 INFO hive.HiveImport: Loading uploaded data into Hive 14/04/09 10:44:52 INFO hive.HiveImport: 14/04/09 10:44:52 INFO Configuration.deprecation: mapred.reduce.tasks is deprecated. Instead, use mapreduce.job.reduces 14/04/09 10:44:52 INFO hive.HiveImport: 14/04/09 10:44:52 INFO Configuration.deprecation: mapred.min.split.size is deprecated. Instead, use mapreduce.input.fileinputformat.split.minsize 14/04/09 10:44:52 INFO hive.HiveImport: 14/04/09 10:44:52 INFO Configuration.deprecation: mapred.reduce.tasks.speculative.execution is deprecated. Instead, use mapreduce.reduce.speculative 14/04/09 10:44:52 INFO hive.HiveImport: 14/04/09 10:44:52 INFO Configuration.deprecation: mapred.min.split.size.per.node is deprecated. Instead, use mapreduce.input.fileinputformat.split.minsize.per.node 14/04/09 10:44:52 INFO hive.HiveImport: 14/04/09 10:44:52 INFO Configuration.deprecation: mapred.input.dir.recursive is deprecated. Instead, use mapreduce.input.fileinputformat.input.dir.recursive 14/04/09 10:44:52 INFO hive.HiveImport: 14/04/09 10:44:52 INFO Configuration.deprecation: mapred.min.split.size.per.rack is deprecated. Instead, use mapreduce.input.fileinputformat.split.minsize.per.rack 14/04/09 10:44:52 INFO hive.HiveImport: 14/04/09 10:44:52 INFO Configuration.deprecation: mapred.max.split.size is deprecated. Instead, use mapreduce.input.fileinputformat.split.maxsize 14/04/09 10:44:52 INFO hive.HiveImport: 14/04/09 10:44:52 INFO Configuration.deprecation: mapred.committer.job.setup.cleanup.needed is deprecated. Instead, use mapreduce.job.committer.setup.cleanup.needed 14/04/09 10:44:53 INFO hive.HiveImport: 14/04/09 10:44:53 WARN conf.HiveConf: DEPRECATED: hive.metastore.ds.retry.* no longer has any effect. Use hive.hmshandler.retry.* instead 14/04/09 10:44:53 INFO hive.HiveImport: 14/04/09 10:44:53 INFO hive.HiveImport: Logging initialized using configuration in file:/usr/local/hive-0.13.0-bin/conf/hive-log4j.properties 14/04/09 10:44:53 INFO hive.HiveImport: SLF4J: Class path contains multiple SLF4J bindings. 14/04/09 10:44:53 INFO hive.HiveImport: SLF4J: Found binding in [jar:file:/usr/local/hadoop-2.2.0/share/hadoop/common/lib/slf4j-log4j12-1.7.5.jar!/org/slf4j/impl/StaticLoggerBinder.class] 14/04/09 10:44:53 INFO hive.HiveImport: SLF4J: Found binding in [jar:file:/usr/local/hbase-0.98.0-hadoop2/lib/slf4j-log4j12-1.6.4.jar!/org/slf4j/impl/StaticLoggerBinder.class] 14/04/09 10:44:53 INFO hive.HiveImport: SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation. 14/04/09 10:44:53 INFO hive.HiveImport: SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory] 14/04/09 10:44:57 INFO hive.HiveImport: OK 14/04/09 10:44:57 INFO hive.HiveImport: Time taken: 0.773 seconds 14/04/09 10:44:57 INFO hive.HiveImport: Loading data to table default.demo_blog 14/04/09 10:44:57 INFO hive.HiveImport: Table default.demo_blog stats: [numFiles=4, numRows=0, totalSize=44, rawDataSize=0] 14/04/09 10:44:57 INFO hive.HiveImport: OK 14/04/09 10:44:57 INFO hive.HiveImport: Time taken: 0.25 seconds 14/04/09 10:44:57 INFO hive.HiveImport: Hive import complete. 14/04/09 10:44:57 INFO hive.HiveImport: Export directory is empty, removing itHive CLI中验证导入的数据:

hive> show tables; OK demo_blog hbase_table_1 hbase_table_2 hbase_table_3 micmiu_blog micmiu_hx_master pokes xflow_dstip Time taken: 0.073 seconds, Fetched: 8 row(s) hive> select * from demo_blog; OK 1 micmiu.com 2 ctosun.com 3 baby.micmiu.com Time taken: 0.506 seconds, Fetched: 3 row(s)[五]、导入数据到HBase 演示把表 demo_blog 数据导入到HBase ,指定Hbase中表名为 demo_sqoop2hbase 的命令:

sqoop import --connect jdbc:mysql://192.168.6.77/test --username root --password micmiu --table demo_blog --hbase-table demo_sqoop2hbase --hbase-create-table --hbase-row-key id --column-family url执行过程:

$ sqoop import --connect jdbc:mysql://192.168.6.77/test --username root --password micmiu --table demo_blog --hbase-table demo_sqoop2hbase --hbase-create-table --hbase-row-key id --column-family url Warning: /usr/lib/hcatalog does not exist! HCatalog jobs will fail. Please set $HCAT_HOME to the root of your HCatalog installation. 14/04/09 16:23:38 WARN tool.BaseSqoopTool: Setting your password on the command-line is insecure. Consider using -P instead. 14/04/09 16:23:38 INFO manager.MySQLManager: Preparing to use a MySQL streaming resultset. 14/04/09 16:23:38 INFO tool.CodeGenTool: Beginning code generation 14/04/09 16:23:39 INFO manager.SqlManager: Executing SQL statement: SELECT t.* FROM `demo_blog` AS t LIMIT 1 14/04/09 16:23:39 INFO manager.SqlManager: Executing SQL statement: SELECT t.* FROM `demo_blog` AS t LIMIT 1 14/04/09 16:23:39 INFO orm.CompilationManager: HADOOP_MAPRED_HOME is /usr/local/hadoop Note: /tmp/sqoop-hadoop/compile/85408c854ee8fba75bbb2458e5e25093/demo_blog.java uses or overrides a deprecated API. Note: Recompile with -Xlint:deprecation for details. 14/04/09 16:23:40 INFO orm.CompilationManager: Writing jar file: /tmp/sqoop-hadoop/compile/85408c854ee8fba75bbb2458e5e25093/demo_blog.jar 14/04/09 16:23:40 WARN manager.MySQLManager: It looks like you are importing from mysql. 14/04/09 16:23:40 WARN manager.MySQLManager: This transfer can be faster! Use the --direct 14/04/09 16:23:40 WARN manager.MySQLManager: option to exercise a MySQL-specific fast path. 14/04/09 16:23:40 INFO manager.MySQLManager: Setting zero DATETIME behavior to convertToNull (mysql) 14/04/09 16:23:40 INFO mapreduce.ImportJobBase: Beginning import of demo_blog SLF4J: Class path contains multiple SLF4J bindings. SLF4J: Found binding in [jar:file:/usr/local/hadoop-2.2.0/share/hadoop/common/lib/slf4j-log4j12-1.7.5.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: Found binding in [jar:file:/usr/local/hbase-0.98.0-hadoop2/lib/slf4j-log4j12-1.6.4.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation. SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory] 14/04/09 16:23:40 INFO Configuration.deprecation: mapred.jar is deprecated. Instead, use mapreduce.job.jar 14/04/09 16:23:40 INFO Configuration.deprecation: mapred.map.tasks is deprecated. Instead, use mapreduce.job.maps 14/04/09 16:23:41 INFO zookeeper.ZooKeeper: Client environment:zookeeper.version=3.4.5-1392090, built on 09/30/2012 17:52 GMT 14/04/09 16:23:41 INFO zookeeper.ZooKeeper: Client environment:host.name=Master.Hadoop 14/04/09 16:23:41 INFO zookeeper.ZooKeeper: Client environment:java.version=1.6.0_20 14/04/09 16:23:41 INFO zookeeper.ZooKeeper: Client environment:java.vendor=Sun Microsystems Inc. 14/04/09 16:23:41 INFO zookeeper.ZooKeeper: Client environment:java.home=/java/jdk1.6.0_20/jre 14/04/09 16:23:41 INFO zookeeper.ZooKeeper: Client environment:java.class.path=/usr/local/hadoop/etc/hadoop: ....... 14/04/09 16:23:41 INFO zookeeper.ZooKeeper: Client environment:java.library.path=/usr/local/hadoop/lib/native 14/04/09 16:23:41 INFO zookeeper.ZooKeeper: Client environment:java.io.tmpdir=/tmp 14/04/09 16:23:41 INFO zookeeper.ZooKeeper: Client environment:java.compiler= 14/04/09 16:23:41 INFO zookeeper.ZooKeeper: Client environment:os.name=Linux 14/04/09 16:23:41 INFO zookeeper.ZooKeeper: Client environment:os.arch=amd64 14/04/09 16:23:41 INFO zookeeper.ZooKeeper: Client environment:os.version=2.6.32-71.el6.x86_64 14/04/09 16:23:41 INFO zookeeper.ZooKeeper: Client environment:user.name=hadoop 14/04/09 16:23:41 INFO zookeeper.ZooKeeper: Client environment:user.home=/home/hadoop 14/04/09 16:23:41 INFO zookeeper.ZooKeeper: Client environment:user.dir=/home/hadoop 14/04/09 16:23:41 INFO zookeeper.ZooKeeper: Initiating client connection, connectString=Slave6.Hadoop:2181,Slave5.Hadoop:2181,Slave7.Hadoop:2181 sessionTimeout=90000 watcher=hconnection-0x57c8b24d, quorum=Slave6.Hadoop:2181,Slave5.Hadoop:2181,Slave7.Hadoop:2181, baseZNode=/hbase 14/04/09 16:23:41 INFO zookeeper.ClientCnxn: Opening socket connection to server Slave5.Hadoop/192.168.8.205:2181. Will not attempt to authenticate using SASL (Unable to locate a login configuration) 14/04/09 16:23:41 INFO zookeeper.RecoverableZooKeeper: Process identifier=hconnection-0x57c8b24d connecting to ZooKeeper ensemble=Slave6.Hadoop:2181,Slave5.Hadoop:2181,Slave7.Hadoop:2181 14/04/09 16:23:41 INFO zookeeper.ClientCnxn: Socket connection established to Slave5.Hadoop/192.168.8.205:2181, initiating session 14/04/09 16:23:41 INFO zookeeper.ClientCnxn: Session establishment complete on server Slave5.Hadoop/192.168.8.205:2181, sessionid = 0x453fecb6c50009, negotiated timeout = 90000 14/04/09 16:23:41 INFO Configuration.deprecation: hadoop.native.lib is deprecated. Instead, use io.native.lib.available 14/04/09 16:23:41 INFO zookeeper.ZooKeeper: Initiating client connection, connectString=Slave6.Hadoop:2181,Slave5.Hadoop:2181,Slave7.Hadoop:2181 sessionTimeout=90000 watcher=catalogtracker-on-hconnection-0x57c8b24d, quorum=Slave6.Hadoop:2181,Slave5.Hadoop:2181,Slave7.Hadoop:2181, baseZNode=/hbase 14/04/09 16:23:41 INFO zookeeper.ClientCnxn: Opening socket connection to server Slave7.Hadoop/192.168.8.207:2181. Will not attempt to authenticate using SASL (Unable to locate a login configuration) 14/04/09 16:23:41 INFO zookeeper.RecoverableZooKeeper: Process identifier=catalogtracker-on-hconnection-0x57c8b24d connecting to ZooKeeper ensemble=Slave6.Hadoop:2181,Slave5.Hadoop:2181,Slave7.Hadoop:2181 14/04/09 16:23:41 INFO zookeeper.ClientCnxn: Socket connection established to Slave7.Hadoop/192.168.8.207:2181, initiating session 14/04/09 16:23:41 INFO zookeeper.ClientCnxn: Session establishment complete on server Slave7.Hadoop/192.168.8.207:2181, sessionid = 0x2453fecb6f50008, negotiated timeout = 90000 14/04/09 16:23:41 INFO zookeeper.ZooKeeper: Session: 0x2453fecb6f50008 closed 14/04/09 16:23:41 INFO zookeeper.ClientCnxn: EventThread shut down 14/04/09 16:23:41 INFO mapreduce.HBaseImportJob: Creating missing HBase table demo_sqoop2hbase 14/04/09 16:23:42 INFO zookeeper.ZooKeeper: Initiating client connection, connectString=Slave6.Hadoop:2181,Slave5.Hadoop:2181,Slave7.Hadoop:2181 sessionTimeout=90000 watcher=catalogtracker-on-hconnection-0x57c8b24d, quorum=Slave6.Hadoop:2181,Slave5.Hadoop:2181,Slave7.Hadoop:2181, baseZNode=/hbase 14/04/09 16:23:42 INFO zookeeper.RecoverableZooKeeper: Process identifier=catalogtracker-on-hconnection-0x57c8b24d connecting to ZooKeeper ensemble=Slave6.Hadoop:2181,Slave5.Hadoop:2181,Slave7.Hadoop:2181 14/04/09 16:23:42 INFO zookeeper.ClientCnxn: Opening socket connection to server Slave7.Hadoop/192.168.8.207:2181. Will not attempt to authenticate using SASL (Unable to locate a login configuration) 14/04/09 16:23:42 INFO zookeeper.ClientCnxn: Socket connection established to Slave7.Hadoop/192.168.8.207:2181, initiating session 14/04/09 16:23:42 INFO zookeeper.ClientCnxn: Session establishment complete on server Slave7.Hadoop/192.168.8.207:2181, sessionid = 0x2453fecb6f50009, negotiated timeout = 90000 14/04/09 16:23:42 INFO zookeeper.ZooKeeper: Session: 0x2453fecb6f50009 closed 14/04/09 16:23:42 INFO zookeeper.ClientCnxn: EventThread shut down 14/04/09 16:23:42 INFO client.RMProxy: Connecting to ResourceManager at Master.Hadoop/192.168.6.77:8032 14/04/09 16:23:47 INFO db.DataDrivenDBInputFormat: BoundingValsQuery: SELECT MIN(`id`), MAX(`id`) FROM `demo_blog` 14/04/09 16:23:47 INFO mapreduce.JobSubmitter: number of splits:3 14/04/09 16:23:47 INFO Configuration.deprecation: mapred.job.classpath.files is deprecated. Instead, use mapreduce.job.classpath.files 14/04/09 16:23:47 INFO Configuration.deprecation: user.name is deprecated. Instead, use mapreduce.job.user.name 14/04/09 16:23:47 INFO Configuration.deprecation: mapred.cache.files.filesizes is deprecated. Instead, use mapreduce.job.cache.files.filesizes 14/04/09 16:23:47 INFO Configuration.deprecation: mapred.cache.files is deprecated. Instead, use mapreduce.job.cache.files 14/04/09 16:23:47 INFO Configuration.deprecation: mapred.reduce.tasks is deprecated. Instead, use mapreduce.job.reduces 14/04/09 16:23:47 INFO Configuration.deprecation: mapred.output.value.class is deprecated. Instead, use mapreduce.job.output.value.class 14/04/09 16:23:47 INFO Configuration.deprecation: mapreduce.map.class is deprecated. Instead, use mapreduce.job.map.class 14/04/09 16:23:47 INFO Configuration.deprecation: mapred.job.name is deprecated. Instead, use mapreduce.job.name 14/04/09 16:23:47 INFO Configuration.deprecation: mapreduce.inputformat.class is deprecated. Instead, use mapreduce.job.inputformat.class 14/04/09 16:23:47 INFO Configuration.deprecation: mapreduce.outputformat.class is deprecated. Instead, use mapreduce.job.outputformat.class 14/04/09 16:23:47 INFO Configuration.deprecation: mapred.cache.files.timestamps is deprecated. Instead, use mapreduce.job.cache.files.timestamps 14/04/09 16:23:47 INFO Configuration.deprecation: mapred.output.key.class is deprecated. Instead, use mapreduce.job.output.key.class 14/04/09 16:23:47 INFO Configuration.deprecation: mapred.working.dir is deprecated. Instead, use mapreduce.job.working.dir 14/04/09 16:23:47 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1396936838233_0005 14/04/09 16:23:47 INFO impl.YarnClientImpl: Submitted application application_1396936838233_0005 to ResourceManager at Master.Hadoop/192.168.6.77:8032 14/04/09 16:23:47 INFO mapreduce.Job: The url to track the job: http://Master.Hadoop:8088/proxy/application_1396936838233_0005/ 14/04/09 16:23:47 INFO mapreduce.Job: Running job: job_1396936838233_0005 14/04/09 16:23:55 INFO mapreduce.Job: Job job_1396936838233_0005 running in uber mode : false 14/04/09 16:23:55 INFO mapreduce.Job: map 0% reduce 0% 14/04/09 16:24:05 INFO mapreduce.Job: map 33% reduce 0% 14/04/09 16:24:12 INFO mapreduce.Job: map 100% reduce 0% 14/04/09 16:24:12 INFO mapreduce.Job: Job job_1396936838233_0005 completed successfully 14/04/09 16:24:12 INFO mapreduce.Job: Counters: 27 File System Counters FILE: Number of bytes read=0 FILE: Number of bytes written=354636 FILE: Number of read operations=0 FILE: Number of large read operations=0 FILE: Number of write operations=0 HDFS: Number of bytes read=295 HDFS: Number of bytes written=0 HDFS: Number of read operations=3 HDFS: Number of large read operations=0 HDFS: Number of write operations=0 Job Counters Launched map tasks=3 Other local map tasks=3 Total time spent by all maps in occupied slots (ms)=35297 Total time spent by all reduces in occupied slots (ms)=0 Map-Reduce Framework Map input records=3 Map output records=3 Input split bytes=295 Spilled Records=0 Failed Shuffles=0 Merged Map outputs=0 GC time elapsed (ms)=381 CPU time spent (ms)=11050 Physical memory (bytes) snapshot=543367168 Virtual memory (bytes) snapshot=3918925824 Total committed heap usage (bytes)=156958720 File Input Format Counters Bytes Read=0 File Output Format Counters Bytes Written=0 14/04/09 16:24:12 INFO mapreduce.ImportJobBase: Transferred 0 bytes in 29.7126 seconds (0 bytes/sec) 14/04/09 16:24:12 INFO mapreduce.ImportJobBase: Retrieved 3 records.hbase shell中验证导入的数据:

hbase(main):009:0> list

TABLE

demo_sqoop2hbase

table_02

table_03

test_table

xyz

5 row(s) in 0.0310 seconds

=> ["demo_sqoop2hbase", "table_02", "table_03", "test_table", "xyz"]

hbase(main):010:0> scan "demo_sqoop2hbase"

ROW COLUMN+CELL

1 column=url:blog, timestamp=1397031850700, value=micmiu.com

2 column=url:blog, timestamp=1397031844106, value=ctosun.com

3 column=url:blog, timestamp=1397031849888, value=baby.micmiu.com

3 row(s) in 0.0730 seconds

hbase(main):011:0> describe "demo_sqoop2hbase"

DESCRIPTION ENABLED

'demo_sqoop2hbase', {NAME => 'url', DATA_BLOCK_ENCODING => 'NONE', BL true

OOMFILTER => 'ROW', REPLICATION_SCOPE => '0', VERSIONS => '1', COMPRE

SSION => 'NONE', MIN_VERSIONS => '0', TTL => '2147483647', KEEP_DELET

ED_CELLS => 'false', BLOCKSIZE => '65536', IN_MEMORY => 'false', BLOC

KCACHE => 'true'}

1 row(s) in 0.0580 seconds

hbase(main):012:0>

验证导入成功。

本文到此已经把MySQL中的数据迁移到 HDFS、Hive、HBase的三种基本情况演示结束。

参考:

- http://sqoop.apache.org/docs/1.4.4/SqoopUserGuide.html

原文地址:Sqoop安装配置及演示, 感谢原作者分享。

win11安装语言包错误0x800f0950什么原因Jul 01, 2023 pm 11:29 PM

win11安装语言包错误0x800f0950什么原因Jul 01, 2023 pm 11:29 PMwin11安装语言包错误0x800f0950什么原因?当我们在给windows11系统安装新语言包时,有时会遇到系统提示错误代码:0x800f0950,导致语言包安装流程无法继续进行下去。导致这个错误代码一般是什么原因,又要怎么解决呢?今天小编就来给大家说明一下win11安装语言包错误0x800f0950的具体解决步骤,有需要的用户们赶紧来看一下吧。win11电脑错误代码0x800f0950解决技巧1、首先按下快捷键“Win+R”打开运行,然后输入:Regedit打开注册表。2、在搜索框中输入“

如何在 Google Docs 中安装自定义字体Apr 26, 2023 pm 01:40 PM

如何在 Google Docs 中安装自定义字体Apr 26, 2023 pm 01:40 PMGoogleDocs在学校和工作环境中变得很流行,因为它提供了文字处理器所期望的所有功能。使用Google文档,您可以创建文档、简历和项目提案,还可以与世界各地的其他用户同时工作。您可能会注意到GoogleDocs不包括MicrosoftWord附带的所有功能,但它提供了自定义文档的能力。使用正确的字体可以改变文档的外观并使其具有吸引力。GoogleDocs提供了大量字体,您可以根据自己的喜好从中选择任何人。如果您希望将自定义字体添加到Google文档,请继续阅读本文。在本文中

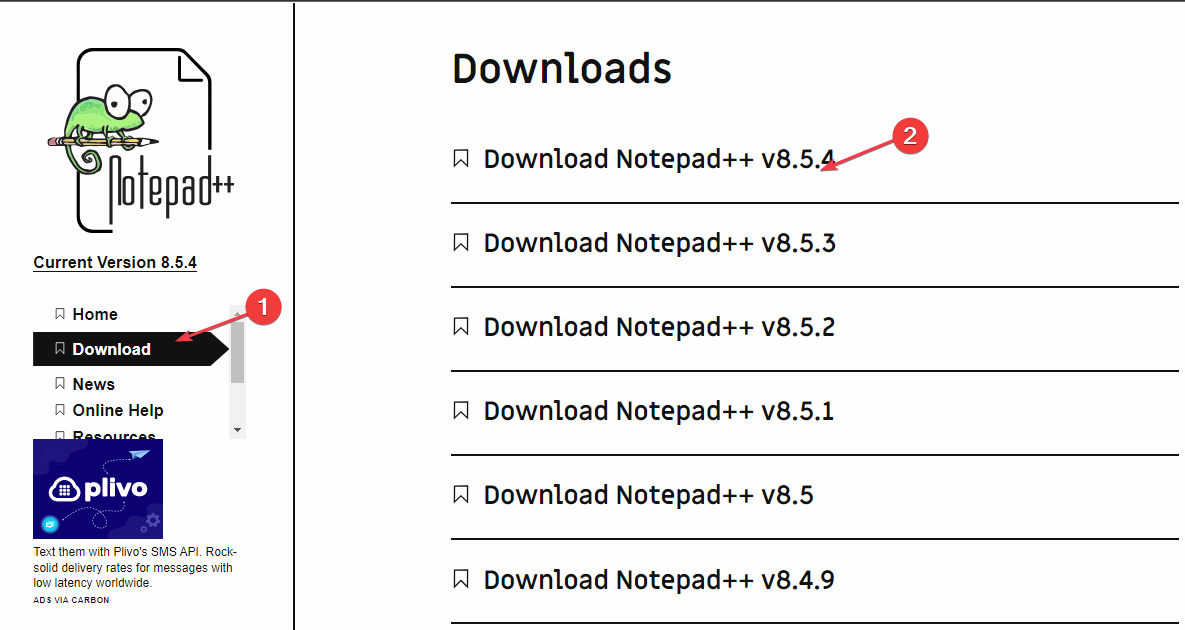

适用于 Windows 11 的记事本++:如何下载和安装它Jul 06, 2023 pm 10:41 PM

适用于 Windows 11 的记事本++:如何下载和安装它Jul 06, 2023 pm 10:41 PMNotepad++主要由开发人员用于编辑源代码,由临时用户用于编辑文本。但是,如果您刚刚升级到Windows11,则在您的系统上下载和安装该应用程序可能具有挑战性。因此,我们将讨论在Windows11上下载和安装记事本++。此外,您可以轻松阅读我们关于修复Notepad++在Windows上没有响应的详细指南。记事本++可以在Windows11上运行吗?是的,记事本++可以在Windows11上有效工作,而不会出现兼容性问题。更具体地说,没有臃肿的选项或错误,只需在一个非常小的编辑器中即可。此外

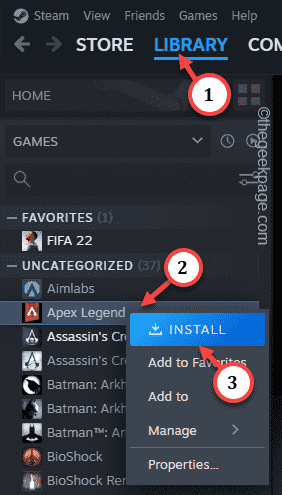

Steam 未检测到 Windows 11/10 中已安装的游戏,如何修复Jun 27, 2023 pm 11:47 PM

Steam 未检测到 Windows 11/10 中已安装的游戏,如何修复Jun 27, 2023 pm 11:47 PMSteam客户端无法识别您计算机上的任何游戏吗?当您从计算机上卸载Steam客户端时,会发生这种情况。但是,当您重新安装Steam应用程序时,它会自动识别已安装文件夹中的游戏。但是,别担心。不,您不必重新下载计算机上的所有游戏。有一些基本和一些高级解决方案可用。修复1–尝试在同一位置安装游戏这是解决这个问题的最简单方法。只需打开Steam应用程序并尝试在同一位置安装游戏即可。步骤1–在您的系统上打开Steam客户端。步骤2–直接进入“库”以查找您拥有的所有游戏。第3步–选择游戏。它将列在“未分类

修复:在 Xbox 应用上的 Halo Infinite(Campaign)安装错误代码 0X80070032、0X80070424 或 0X80070005May 21, 2023 am 11:41 AM

修复:在 Xbox 应用上的 Halo Infinite(Campaign)安装错误代码 0X80070032、0X80070424 或 0X80070005May 21, 2023 am 11:41 AM<p><strong>HaloInfinite(Campaign)</strong>是一款第一人称射击视频游戏,于2021年11月推出,可供单人和多用户使用。该游戏是Halo系列的延续,适用于Windows、XboxOne和Xbox系列的用户X|S。最近,它还在PC版XboxGamePass上发布,以提高其可访问性。大量玩家报告在尝试使用WindowsPC上的<strong>Xbox应

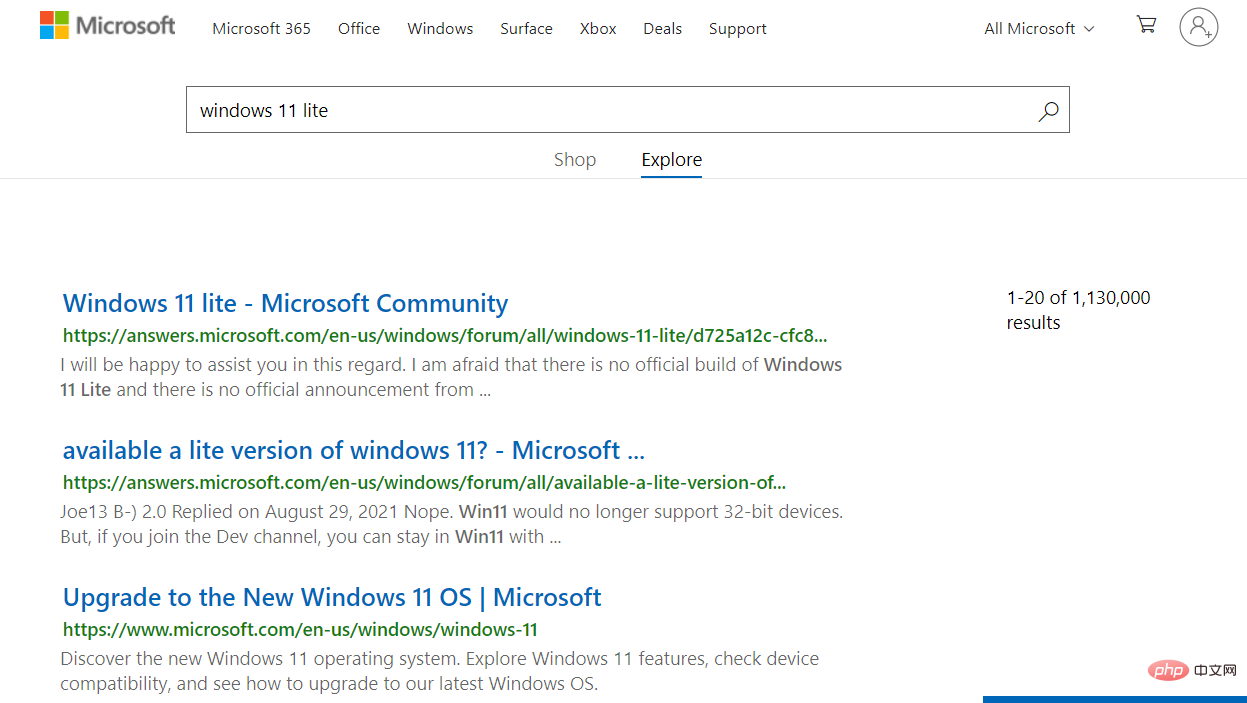

Windows 11 Lite:它是什么以及如何在您的 PC 上安装它Apr 14, 2023 pm 11:19 PM

Windows 11 Lite:它是什么以及如何在您的 PC 上安装它Apr 14, 2023 pm 11:19 PM我们深知MicrosoftWindows11是一个功能齐全且设计吸引人的操作系统。但是,用户一直要求Windows11Lite版本。尽管它提供了重大改进,但Windows11是一个资源匮乏的操作系统,它可能很快就会使旧机器混乱到无法顺利运行的地步。本文将解决您最常问的关于是否有Windows11Lite版本以及是否可以安全下载的问题。跟着!有Windows11Lite版本吗?我们正在谈论的Windows11Lite21H2版本是由Neelkalpa的T

虚拟机如何安装Win11Jul 03, 2023 pm 12:17 PM

虚拟机如何安装Win11Jul 03, 2023 pm 12:17 PM虚拟机怎么安装Win11?近期有用户想要尝试使用VirtualBox虚拟机安装Win11,但是不太清楚具体的操作方法,针对这一情况,小编将为大家演示使用VirtualBox安装Win11的方法,很多小伙伴不知道怎么详细操作,小编下面整理了使用VirtualBox安装Win11的步骤,如果你感兴趣的话,跟着小编一起往下看看吧! 使用VirtualBox安装Win11的步骤 1、要下载VirtualBox,请前往VirtualBox官方下载页面,下载适用于Windows的.exe文件。如果你

教大家win7精简版如何安装Jul 09, 2023 pm 02:05 PM

教大家win7精简版如何安装Jul 09, 2023 pm 02:05 PM重装系统对于电脑小白来说真不是一件简单的事情,那么下面就和大家聊聊电脑重装精简版win7系统的一个方法吧。1、在小白一键重装系统官网中下载小白三步装机版软件并打开,软件会自动帮助我们匹配合适的系统,然后点击立即重装。2、接下来软件就会帮助我们直接下载系统镜像,只需要耐心等候即可。3、下载完成后软件会帮助我们直接进行在线重装Windows系统,请根据提示操作。4、安装完成后会提示我们重启,选择立即重启。5、重启后在PE菜单中选择XiaoBaiPE-MSDNOnlineInstallMode菜单进入

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

MinGW - Minimalist GNU for Windows

This project is in the process of being migrated to osdn.net/projects/mingw, you can continue to follow us there. MinGW: A native Windows port of the GNU Compiler Collection (GCC), freely distributable import libraries and header files for building native Windows applications; includes extensions to the MSVC runtime to support C99 functionality. All MinGW software can run on 64-bit Windows platforms.

DVWA

Damn Vulnerable Web App (DVWA) is a PHP/MySQL web application that is very vulnerable. Its main goals are to be an aid for security professionals to test their skills and tools in a legal environment, to help web developers better understand the process of securing web applications, and to help teachers/students teach/learn in a classroom environment Web application security. The goal of DVWA is to practice some of the most common web vulnerabilities through a simple and straightforward interface, with varying degrees of difficulty. Please note that this software

SecLists

SecLists is the ultimate security tester's companion. It is a collection of various types of lists that are frequently used during security assessments, all in one place. SecLists helps make security testing more efficient and productive by conveniently providing all the lists a security tester might need. List types include usernames, passwords, URLs, fuzzing payloads, sensitive data patterns, web shells, and more. The tester can simply pull this repository onto a new test machine and he will have access to every type of list he needs.

WebStorm Mac version

Useful JavaScript development tools

SublimeText3 Linux new version

SublimeText3 Linux latest version