概述 之前的文章SURF和SIFT算子实现特征点检测简单地讲了利用SIFT和SURF算子检测特征点,在检测的基础上可以使用SIFT和SURF算子对特征点进行特征提取并使用匹配函数进行特征点的匹配。具体实现是首先采用SurfFeatureDetector检测特征点,再使用SurfDescripto

概述

之前的文章SURF和SIFT算子实现特征点检测简单地讲了利用SIFT和SURF算子检测特征点,在检测的基础上可以使用SIFT和SURF算子对特征点进行特征提取并使用匹配函数进行特征点的匹配。具体实现是首先采用SurfFeatureDetector检测特征点,再使用SurfDescriptorExtractor计算特征点的特征向量,最后采用BruteForceMatcher暴力匹配法或者FlannBasedMatcher选择性匹配法(二者的不同)来进行特征点匹配。

实验所用环境是opencv2.4.0+vs2008+win7,需要注意opencv2.4.X版本中SurfFeatureDetector是包含在opencv2/nonfree/features2d.hpp中,BruteForceMatcher是包含在opencv2/legacy/legacy.hpp中,FlannBasedMatcher是包含在opencv2/features2d/features2d.hpp中。

BruteForce匹配法

首先使用BruteForceMatcher暴力匹配法,代码如下:

/**

* @采用SURF算子检测特征点,对特征点进行特征提取,并使用BruteForce匹配法进行特征点的匹配

* @SurfFeatureDetector + SurfDescriptorExtractor + BruteForceMatcher

* @author holybin

*/

#include <stdio.h>

#include <iostream>

#include "opencv2/core/core.hpp"

#include "opencv2/nonfree/features2d.hpp" //SurfFeatureDetector实际在该头文件中

#include "opencv2/legacy/legacy.hpp" //BruteForceMatcher实际在该头文件中

//#include "opencv2/features2d/features2d.hpp" //FlannBasedMatcher实际在该头文件中

#include "opencv2/highgui/highgui.hpp"

using namespace cv;

using namespace std;

int main( int argc, char** argv )

{

Mat src_1 = imread( "D:\\opencv_pic\\cat3d120.jpg", CV_LOAD_IMAGE_GRAYSCALE );

Mat src_2 = imread( "D:\\opencv_pic\\cat0.jpg", CV_LOAD_IMAGE_GRAYSCALE );

if( !src_1.data || !src_2.data )

{

cout keypoints_1, keypoints_2;

detector.detect( src_1, keypoints_1 );

detector.detect( src_2, keypoints_2 );

cout > matcher;

vector matches;

matcher.match( descriptors_1, descriptors_2, matches );

cout<br>

<p>实验结果:</p>

<img src="/static/imghwm/default1.png" data-src="/inc/test.jsp?url=http%3A%2F%2Fimg.blog.csdn.net%2F20141115151204375%3Fwatermark%2F2%2Ftext%2FaHR0cDovL2Jsb2cuY3Nkbi5uZXQvaG9seWJpbg%3D%3D%2Ffont%2F5a6L5L2T%2Ffontsize%2F400%2Ffill%2FI0JBQkFCMA%3D%3D%2Fdissolve%2F70%2Fgravity%2FSouthEast&refer=http%3A%2F%2Fblog.csdn.net%2Fu012564690%2Farticle%2Fdetails%2F17370511" class="lazy" alt="OpenCV中feature2D学习SIFT和SURF算子实现特征点提取与匹配" ><br>

<p><span><br>

</span></p>

<h1 id="span-FLANN匹配法-span"><span>FLANN匹配法</span></h1>

<p>使用暴力匹配的结果不怎么好,下面使用FlannBasedMatcher进行特征匹配,只保留好的特征匹配点,代码如下:</p>

<pre class="brush:php;toolbar:false">/**

* @采用SURF算子检测特征点,对特征点进行特征提取,并使用FLANN匹配法进行特征点的匹配

* @SurfFeatureDetector + SurfDescriptorExtractor + FlannBasedMatcher

* @author holybin

*/

#include <stdio.h>

#include <iostream>

#include "opencv2/core/core.hpp"

#include "opencv2/nonfree/features2d.hpp" //SurfFeatureDetector实际在该头文件中

//#include "opencv2/legacy/legacy.hpp" //BruteForceMatcher实际在该头文件中

#include "opencv2/features2d/features2d.hpp" //FlannBasedMatcher实际在该头文件中

#include "opencv2/highgui/highgui.hpp"

using namespace cv;

using namespace std;

int main( int argc, char** argv )

{

Mat src_1 = imread( "D:\\opencv_pic\\cat3d120.jpg", CV_LOAD_IMAGE_GRAYSCALE );

Mat src_2 = imread( "D:\\opencv_pic\\cat0.jpg", CV_LOAD_IMAGE_GRAYSCALE );

if( !src_1.data || !src_2.data )

{

cout keypoints_1, keypoints_2;

detector.detect( src_1, keypoints_1 );

detector.detect( src_2, keypoints_2 );

cout allMatches;

matcher.match( descriptors_1, descriptors_2, allMatches );

cout maxDist )

maxDist = dist;

}

printf(" max dist : %f \n", maxDist );

printf(" min dist : %f \n", minDist );

//-- 过滤匹配点,保留好的匹配点(这里采用的标准:distance goodMatches;

for( int i = 0; i (),

DrawMatchesFlags::NOT_DRAW_SINGLE_POINTS //不显示未匹配的点

);

imshow("matching result", matchImg );

//-- 输出匹配点的对应关系

for( int i = 0; i <br>

<p>实验结果:</p>

<img src="/static/imghwm/default1.png" data-src="/inc/test.jsp?url=http%3A%2F%2Fimg.blog.csdn.net%2F20141115151359125%3Fwatermark%2F2%2Ftext%2FaHR0cDovL2Jsb2cuY3Nkbi5uZXQvaG9seWJpbg%3D%3D%2Ffont%2F5a6L5L2T%2Ffontsize%2F400%2Ffill%2FI0JBQkFCMA%3D%3D%2Fdissolve%2F70%2Fgravity%2FSouthEast&refer=http%3A%2F%2Fblog.csdn.net%2Fu012564690%2Farticle%2Fdetails%2F17370511" class="lazy" alt="OpenCV中feature2D学习SIFT和SURF算子实现特征点提取与匹配" ><br>

<p><br>

</p>

<p>从第二个实验结果可以看出,经过过滤之后特征点数目从49减少到33,匹配的准确度有所上升。当然也可以使用SIFT算子进行上述两种匹配实验,只需要将SurfFeatureDetector换成SiftFeatureDetector,将SurfDescriptorExtractor换成SiftDescriptorExtractor即可。</p>

<p><br>

</p>

<h1 id="span-拓展-span"><span>拓展</span></h1>

<p> 在FLANN匹配法的基础上,还可以进一步利用透视变换和空间映射找出已知物体(目标检测),具体来说就是利用findHomography函数利用匹配的关键点找出相应的变换,再利用perspectiveTransform函数映射点群。具体可以参考这篇文章:OpenCV中feature2D学习——SIFT和SURF算法实现目标检测。</p>

<p><br>

</p>

</iostream></stdio.h> python OpenCV图像金字塔实例分析May 11, 2023 pm 08:40 PM

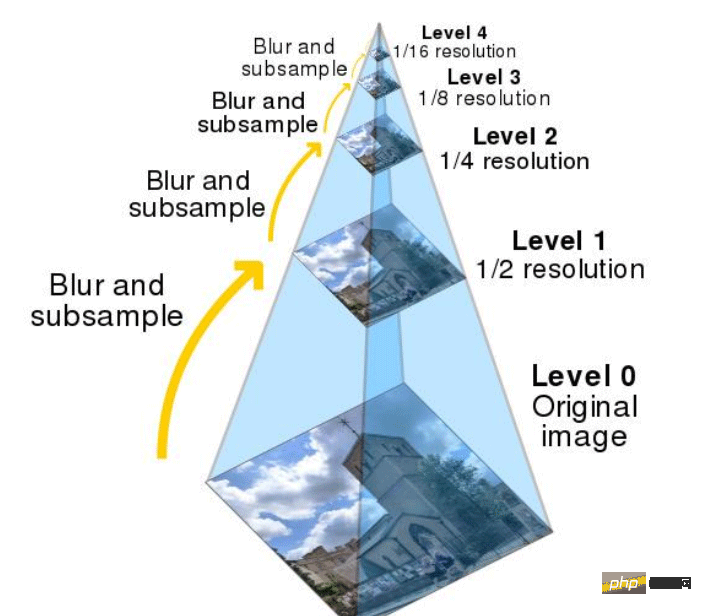

python OpenCV图像金字塔实例分析May 11, 2023 pm 08:40 PM1.图像金字塔理论基础图像金字塔是图像多尺度表达的一种,是一种以多分辨率来解释图像的有效但概念简单的结构。一幅图像的金字塔是一系列以金字塔形状排列的分辨率逐步降低,且来源于同一张原始图的图像集合。其通过梯次向下采样获得,直到达到某个终止条件才停止采样。我们将一层一层的图像比喻成金字塔,层级越高,则图像越小,分辨率越低。那我们为什么要做图像金字塔呢?这就是因为改变像素大小有时候并不会改变它的特征,比方说给你看1000万像素的图片,你能知道里面有个人,给你看十万像素的,你也能知道里面有个人,但是对计

Python+OpenCV怎么实现拖拽虚拟方块效果May 15, 2023 pm 07:22 PM

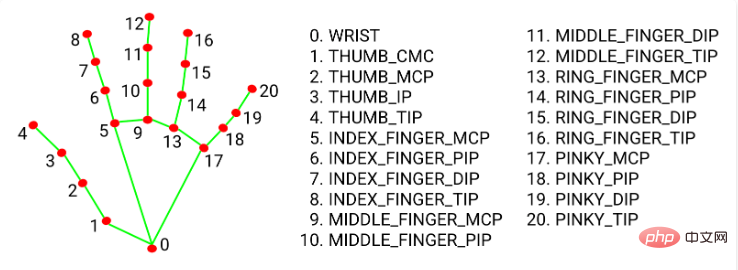

Python+OpenCV怎么实现拖拽虚拟方块效果May 15, 2023 pm 07:22 PM一、项目效果二、核心流程1、openCV读取视频流、在每一帧图片上画一个矩形。2、使用mediapipe获取手指关键点坐标。3、根据手指坐标位置和矩形的坐标位置,判断手指点是否在矩形上,如果在则矩形跟随手指移动。三、代码流程环境准备:python:3.8.8opencv:4.2.0.32mediapipe:0.8.10.1注:1、opencv版本过高或过低可能出现一些如摄像头打不开、闪退等问题,python版本影响opencv可选择的版本。2、pipinstallmediapipe后可能导致op

如何使用PHP和OpenCV库实现视频处理?Jul 17, 2023 pm 09:13 PM

如何使用PHP和OpenCV库实现视频处理?Jul 17, 2023 pm 09:13 PM如何使用PHP和OpenCV库实现视频处理?摘要:在现代科技应用中,视频处理已经成为一项重要的技术。本文将介绍如何使用PHP编程语言结合OpenCV库来实现一些基本的视频处理功能,并附上相应的代码示例。关键词:PHP、OpenCV、视频处理、代码示例引言:随着互联网的发展和智能手机的普及,视频内容已经成为人们生活中不可或缺的一部分。然而,要想实现视频的编辑和

在PHP中使用OpenCV实现计算机视觉应用Jun 19, 2023 pm 03:09 PM

在PHP中使用OpenCV实现计算机视觉应用Jun 19, 2023 pm 03:09 PM计算机视觉(ComputerVision)是人工智能领域的重要分支之一,它可以使计算机能够自动地感知和理解图像、视频等视觉信号,实现人机交互以及自动化控制等应用场景。OpenCV(OpenSourceComputerVisionLibrary)是一个流行的开源计算机视觉库,在计算机视觉、机器学习、深度学习等领域都有广泛的应用。本文将介绍在PHP中使

在Python中,可以使用OpenCV库中的方法对图像进行分割和提取。May 08, 2023 pm 10:55 PM

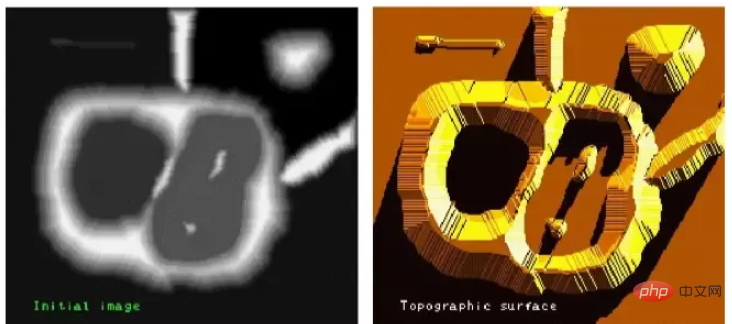

在Python中,可以使用OpenCV库中的方法对图像进行分割和提取。May 08, 2023 pm 10:55 PM图像分割与提取图像中将前景对象作为目标图像分割或者提取出来。对背景本身并无兴趣分水岭算法及GrabCut算法对图像进行分割及提取。用分水岭算法实现图像分割与提取分水岭算法将图像形象地比喻为地理学上的地形表面,实现图像分割,该算法非常有效。算法原理任何一幅灰度图像,都可以被看作是地理学上的地形表面,灰度值高的区域可以被看成是山峰,灰度值低的区域可以被看成是山谷。左图是原始图像,右图是其对应的“地形表面”。该过程将图像分成两个不同的集合:集水盆地和分水岭线。我们构建的堤坝就是分水岭线,也即对原始图像

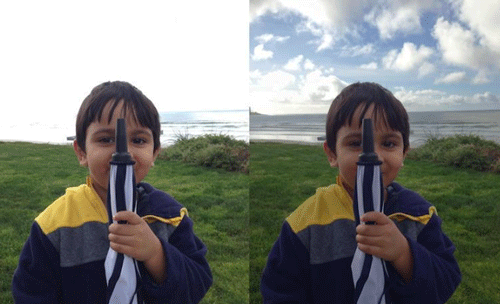

如何使用PHP和OpenCV库实现图像锐化?Jul 17, 2023 am 08:31 AM

如何使用PHP和OpenCV库实现图像锐化?Jul 17, 2023 am 08:31 AM如何使用PHP和OpenCV库实现图像锐化?概述:图像锐化是一种常见的图像处理技术,用于提高图像的清晰度和边缘的强度。在本文中,我们将介绍如何使用PHP和OpenCV库来实现图像锐化。OpenCV是一款功能强大的开源计算机视觉库,它提供了丰富的图像处理功能。我们将使用OpenCV的PHP扩展来实现图像锐化算法。步骤1:安装OpenCV和PHP扩展首先,我们需

利用Java、Selenium和OpenCV结合的方法,解决自动化测试中滑块验证问题。May 08, 2023 pm 08:16 PM

利用Java、Selenium和OpenCV结合的方法,解决自动化测试中滑块验证问题。May 08, 2023 pm 08:16 PM1、滑块验证思路被测对象的滑块对象长这个样子。相对而言是比较简单的一种形式,需要将左侧的拼图通过下方的滑块进行拖动,嵌入到右侧空槽中,即完成验证。要自动化完成这个验证过程,关键点就在于确定滑块滑动的距离。根据上面的分析,验证的关键点在于确定滑块滑动的距离。但是看似简单的一个需求,完成起来却并不简单。如果使用自然逻辑来分析这个过程,可以拆解如下:1.定位到左侧拼图所在的位置,由于拼图的形状和大小固定,那么其实只需要定位其左边边界离背景图片的左侧距离。(实际在本例中,拼图的起始位置也是固定的,节省了

python怎么使用OpenCV获取高动态范围成像HDRJun 02, 2023 pm 07:54 PM

python怎么使用OpenCV获取高动态范围成像HDRJun 02, 2023 pm 07:54 PM1背景1.1什么是高动态范围(HDR)成像?大多数数码相机和显示器将彩色图像捕获或显示为24位矩阵。每个颜色通道有8位,一共三个通道,因此每个通道的像素值在0到255之间。换句话说,普通相机或显示器具有有限的动态范围。然而,我们周围的世界颜色有一个非常大的变化范围。当灯关闭时,车库会变黑;太阳照射下,车库看起来变得非常明亮。即使不考虑这些极端情况,在日常情况下,8位也几乎不足以捕捉场景。因此,相机会尝试估计光线并自动设置曝光,以使图像中最有用的部分具有良好的动态颜色范围,而太暗和太亮的部分分别被

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

SublimeText3 Linux new version

SublimeText3 Linux latest version

WebStorm Mac version

Useful JavaScript development tools

Dreamweaver CS6

Visual web development tools

SAP NetWeaver Server Adapter for Eclipse

Integrate Eclipse with SAP NetWeaver application server.

SublimeText3 Chinese version

Chinese version, very easy to use