I believe that the Internet has become more and more an indispensable part of people's lives. Rich client applications such as ajax, flex, etc. make people more "happy" to experience many functions that could only be realized in C/S. For example, Google has moved all the most basic office applications to the Internet. Of course, while it is convenient, it will undoubtedly make the page slower and slower. I am doing front-end development. In terms of performance, according to Yahoo's survey, the back-end only accounts for 5%, while the front-end accounts for as much as 95%, of which 88% can be optimized.

The above is a life cycle diagram of a web2.0 page. Engineers vividly describe it as divided into four stages: "pregnancy, birth, graduation, and marriage." If we can be aware of this process when we click on a web link instead of a simple request-response, we can dig out many details that can improve performance. Today I listened to a lecture by Taobao Xiaoma Ge on web performance research by the Yahoo development team. I felt that I learned a lot and wanted to share it on my blog.

I believe many people have heard of the 14 rules for optimizing website performance. More information can be found at developer.yahoo.com

1. Reduce the number of HTTP requests as much as possible [content]

2. Use CDN (Content Delivery Network) [server]

3. Add Expires header (or Cache-control) [server]

4. Gzip component [server]

5. Place CSS styles at the top of the page [css]

6. Move scripts to the bottom (including inline) [javascript]

7. Avoid using Expressions in CSS [css]

8. Separate JavaScript and CSS into external files [javascript] [css]

9. Reduce DNS queries [content]

10. Compress JavaScript and CSS (including inline ) [javascript] [css]

11. Avoid redirects [server]

12. Remove duplicate scripts [javascript]

13. Configure entity tags (ETags) [css]

14 . Enable AJAX caching

There is a plug-in yslow under firefox, which is integrated in firebug. You can use it to easily check the performance of your website in these aspects.

This is the result of using yslow to evaluate my website Xifengfang. Unfortunately, it only has a score of 51. hehe. The scores of major Chinese websites are not high. I just took a test and both Sina and NetEase scored 31. Then the score of Yahoo (USA) is indeed 97 points! This shows Yahoo's efforts in this regard. Judging from the 14 rules they summarized and the 20 newly added points, there are many details that we really don't think about at all, and some practices are even a little "perverted".

The first one, reduce the number of HTTP requests as much as possible (Make Fewer HTTP Requests)

http requests are expensive, and finding ways to reduce the number of requests can naturally improve web page speed. Commonly used methods include merging css, js (merging css and js files in one page respectively), image maps and css sprites, etc. Of course, perhaps splitting css and js files into multiple files is due to considerations such as css structure and sharing. Alibaba's Chinese website approach at that time was to develop it separately, and then merge js and css in the background. This way it was still one request for the browser, but it could still be restored into multiple ones during development, which facilitated management and repeated references. . Yahoo even recommends writing the css and js of the homepage directly into the page file instead of external references. Because the number of visits to the homepage is too large, this can also reduce the number of requests by two. In fact, many domestic portals do this.

Css sprites only use the background images on the page to be merged into one, and then use the value defined by the background-position property of css to get its background. Taobao and Alibaba Chinese sites currently do this. If you are interested, you can take a look at the background images of Taobao and Alibaba.

http://www.csssprites.com/ This is a tool website that can automatically merge the images you upload and give the corresponding background-position coordinates. And output the results in png and gif formats.

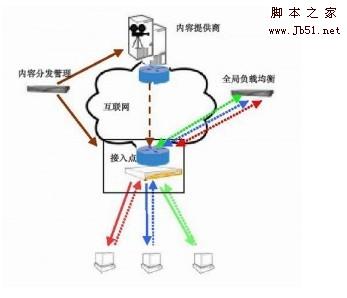

Article 2, use CDN (content delivery network): Use a Content Delivery Network

To be honest, I don’t know much about CDN. To put it simply, by adding a new layer of network architecture to the existing Internet, the content of the website is published to the cache server closest to the user. DNS load balancing technology determines the user's source to access the cache server nearby to obtain the required content. Users in Hangzhou access content on the server near Hangzhou, and users in Beijing access content on the server near Beijing. This can effectively reduce the time for data transmission on the network and increase the speed. For more detailed information, you can refer to the explanation of CDN on Baidu Encyclopedia. Yahoo! distributes static content to a CDN and reduces user impact time by 20% or more.

CDN technology diagram:

CDN network diagram:

Article 3. Add Expire/Cache-Control header: Add an Expires Header

Nowadays, more and more pictures, scripts, css, and flash are embedded into pages. When we access them, we will inevitably make many http requests. In fact, we can cache these files by setting the Expires header. Expire actually specifies the cache time of a specific type of file in the browser through the header message. Most of the pictures in flash do not need to be modified frequently after they are released. After caching, the browser will not need to download these files from the server in the future but will read them directly from the cache, which will speed up accessing the page again. will be greatly accelerated. A typical header information returned by the HTTP 1.1 protocol:

HTTP/1.1 200 OK

Date: Fri, 30 Oct 1998 13:19:41 GMT

Server: Apache/1.3.3 (Unix)

Cache-Control: max-age=3600, must-revalidate

Expires: Fri, 30 Oct 1998 14:19:41 GMT

Last-Modified: Mon, 29 Jun 1998 02:28:12 GMT

ETag: “3e86-410-3596fbbc”

Content-Length: 1040

Content-Type: text/html

This can be done by setting Cache-Control and Expires through server-side scripts.

For example, set expiration after 30 days in php:

It can also be done by configuring the server itself. I am not very clear about these, haha. Friends who want to know more can refer to http://www.web-caching.com/

As far as I know, the current expiration time of Alibaba Chinese website Expires is 30 days. However, there have been problems during the period, especially the setting of script expiration time should be carefully considered, otherwise it may take a long time for the client to "perceive" such changes after the corresponding script function is updated. I have encountered this problem before when I was working on [suggest project]. Therefore, what should be cached and what should not be cached should be carefully considered.

Item 4. Enable Gzip compression: Gzip Components

The idea of Gzip is to compress the file on the server side first and then transmit it. This can significantly reduce the size of file transfers. After the transmission is completed, the browser will decompress the compressed content again and execute it. All current browsers support gzip "well". Not only can browsers recognize it, but also major "crawlers" can also recognize it. SEOers can rest assured. Moreover, the compression ratio of gzip is very large. The general compression ratio is 85%, which means that a 100K page on the server side can be compressed to about 25K before being sent to the client. For the specific Gzip compression principle, you can refer to the article "Gzip Compression Algorithm" on csdn. Yahoo particularly emphasizes that all text content should be gzip compressed: html (php), js, css, xml, txt... Our website has done a good job in this regard, and it is an A. In the past, our homepage was not A, because there were many js placed by advertising codes on the homepage. The js of the website of the owner of these advertising codes had not been gzip compressed, which would also drag down our website.

Most of the above three points are server-side contents, and I only have a superficial understanding of them. Please correct me if I am wrong.

Item 5: Put Stylesheets at the Top of the page

Put css at the top of the page. Why? Because browsers such as IE and Firefox will not render anything until all the CSS is transmitted. The reason is as simple as what Brother Ma said. css, the full name is Cascading Style Sheets (cascading style sheets). Cascading means that the following css can cover the previous css, and higher-level css can cover lower-level css. In [css! important] This hierarchical relationship was briefly mentioned at the bottom of this article. Here we only need to know that css can be overridden. Since the previous one can be overwritten, it is undoubtedly reasonable for the browser to render it after it is completely loaded. In many browsers, such as IE, the problem with placing the style sheet at the bottom of the page is that it prohibits the sequential display of web content. The browser blocks display to avoid redrawing page elements, and the user only sees a blank page. Firefox does not block display, but this means that some page elements may need to be repainted after the stylesheet is downloaded, which causes flickering issues. So we should let the css be loaded as soon as possible

Following this meaning, if we study it more carefully, there are actually areas that can be optimized. For example, the two css files included on this site, . From the media, you can see that the first css is for the browser, and the second css file is for the print style. Judging from the user's behavioral habits, the action of printing the page must occur after the page is displayed. Therefore, a better method should be to dynamically add css for the printing device to this page after the page is loaded, which can increase the speed a little. (Haha)

Article 6. Put Scripts at the Bottom of the page

Placing the script at the bottom of the page has two purposes: 1. To prevent the execution of the script from blocking the download of the page. During the page loading process, when the browser reads the js execution statement, it will interpret it all and then read the following content. If you don’t believe it, you can write a js infinite loop to see if the things below the page will come out. (The execution of setTimeout and setInterval is somewhat similar to multi-threading, and the following content rendering will continue before the corresponding response time.) The logic of the browser doing this is because js may execute location.href at any time or otherwise completely interrupt this page The function of the process, that is, of course has to wait until it is executed before loading. Therefore, placing it at the end of the page can effectively reduce the loading time of the visual elements of the page. 2. The second problem caused by the script is that it blocks the number of parallel downloads. The HTTP/1.1 specification recommends that the number of parallel downloads per host of the browser should not exceed 2 (IE can only be 2, other browsers such as FF are set to 2 by default, but the new ie8 can reach 6) . So if you distribute the image files to multiple machines, you can achieve more than 2 parallel downloads. But while the script file is downloading, the browser does not initiate other parallel downloads.

Of course, for each website, the feasibility of loading scripts at the bottom of the page is still questionable. Just like the page of Alibaba Chinese website. There are inline js in many places, and the display of the page relies heavily on this. I admit that this is far from the concept of non-intrusive scripts, but many "historical problems" are not so easy to solve.

Article 7: Avoid using Expressions in CSS (Avoid CSS Expressions)

But this adds two more layers of meaningless nesting, which is definitely not good. A better way is needed.

Article 8. Put JavaScript and CSS into external files (Make JavaScript and CSS External)

I think this is easy to understand. This is done not only from the perspective of performance optimization, but also from the perspective of ease of code maintenance. Writing css and js in the page content can reduce 2 requests, but it also increases the size of the page. If the css and js have been cached, there will be no extra http requests. Of course, as I said before, some special page developers will still choose inline css and js files.

Article 9, Reduce DNS Lookups (Reduce DNS Lookups)

On the Internet, there is a one-to-one correspondence between domain names and IP addresses. The domain name (kuqin.com) is easy to remember, but the computer does not recognize it. The "recognition" between computers must be converted into an IP address. Each computer on the network corresponds to an independent IP address. The conversion between domain names and IP addresses is called domain name resolution, also known as DNS query. A DNS resolution process will take 20-120 milliseconds. Before the DNS query is completed, the browser will not download anything under the domain name. Therefore, reducing the time of DNS query can speed up the loading speed of the page. Yahoo recommends that the number of domain names contained in a page should be limited to 2-4. This requires a good planning for the page as a whole. At present, we are not doing well in this regard, and many advertising delivery systems are dragging us down.

Article 10, Compress JavaScript and CSS (Minify JavaScript)

The effect of compressing js and css is obvious, reducing the number of bytes on the page. Pages with small capacity will naturally load faster. In addition to reducing the volume, compression can also provide some protection. We do this well. Commonly used compression tools include JsMin, YUI compressor, etc. In addition, http://dean.edwards.name/packer/ also provides us with a very convenient online compression tool. You can see the difference in capacity between compressed js files and uncompressed js files on the jQuery web page:

Of course, one of the disadvantages of compression is that the readability of the code is lost. I believe many front-end friends have encountered this problem: the effect of looking at Google is cool, but when looking at its source code, it is a lot of characters crowded together, and even the function names have been replaced. It’s so sweaty! Wouldn't it be very inconvenient to maintain your own code like this? The current approach adopted by all Alibaba Chinese websites is to compress js and css on the server side when they are released. This makes it very convenient for us to maintain our own code.

Article 11, Avoid Redirects

I saw the article "Internet Explorer and Connection Limits" on ieblog not long ago. For example, when you enter http://www.kuqin.com/, the server will automatically generate a 301 The server redirects to http://www.kuqin.com/, you can see it by looking at the address bar of the browser. This kind of redirection naturally takes time. Of course, this is just an example, and there are many reasons for redirection, but what remains the same is that every additional redirection will increase a web request, so it should be reduced as much as possible.

Article 12. Remove Duplicate Scripts

I know this without even saying it, not only from a performance perspective, but also from a code specification perspective. But we have to admit that many times we will add some perhaps repetitive code because the picture is so fast. Perhaps a unified css framework and js framework can better solve our problems. Xiaozhu's point of view is right. Not only should it not be repeated, but it should also be reusable.

Article 13. Configure entity tags (ETags) (Configure ETags)

I don’t understand this either, haha. I found a more detailed explanation on inforQ "Using ETags to Reduce Web Application Bandwidth and Load". Interested students can check it out.

Article 14. Make Ajax Cacheable

Does ajax need to be cached? When making an ajax request, a timestamp is often added to avoid caching. It’s important to remember that “asynchronous” does not imply “instantaneous”. Remember, even if AJAX are generated dynamically and only affect one user, they can still be cached.

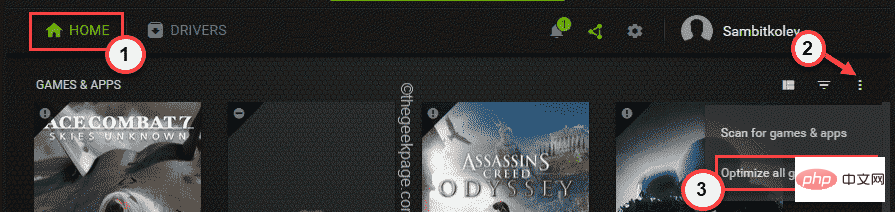

修复:Windows 11 无法优化游戏的问题Apr 30, 2023 pm 01:28 PM

修复:Windows 11 无法优化游戏的问题Apr 30, 2023 pm 01:28 PMGeforceExperience不仅为您下载最新版本的游戏驱动程序,它还提供更多!最酷的事情之一是它可以根据您的系统规格优化您安装的所有游戏,为您提供最佳的游戏体验。但是一些游戏玩家报告了一个问题,即GeForceExperience没有优化他们系统上的游戏。只需执行这些简单的步骤即可在您的系统上解决此问题。修复1–为所有游戏使用最佳设置您可以设置为所有游戏使用最佳设置。1.在您的系统上打开GeForceExperience应用程序。2.GeForceExperience面

Uplay下载速度持续为零,无变化Dec 23, 2023 pm 02:49 PM

Uplay下载速度持续为零,无变化Dec 23, 2023 pm 02:49 PM很多小伙伴吐槽Uplay下载速度慢,还有Uplay平台下载速度太慢有的时候只有几KB,那么应该如何解决呢?主要是dns和hosts文件的问题,下面由我带给大家Uplay下载速度慢的解决方案,具体的一起来看看吧。Uplay下载速度慢一直为0解决方法1、双击打开这台电脑!如下图所示2、进入这台电脑,找到你的系统盘,一般默认都是c盘,如下图所示3、接着进入这个文件夹:C:\Windows\System32\drivers\etc\,如下图所示4、右键单击hosts文件-选择打开方式,如下图所示5、打开

Win10电脑上传速度慢怎么解决Jul 01, 2023 am 11:25 AM

Win10电脑上传速度慢怎么解决Jul 01, 2023 am 11:25 AMWin10电脑上传速度慢怎么解决?我们在使用电脑的时候可能会觉得自己电脑上传文件的速度非常的慢,那么这是什么情况呢?其实这是因为电脑默认的上传速度为20%,所以才导致上传速度非常慢,很多小伙伴不知道怎么详细操作,小编下面整理了win11格式化c盘操作步骤,如果你感兴趣的话,跟着小编一起往下看看吧! Win10上传速度慢的解决方法 1、按下win+R调出运行,输入gpedit.msc,回车。 2、选择管理模板,点击网络--Qos数据包计划程序,双击限制可保留带宽。 3、选择已启用,将带

格式化笔记本电脑会使其速度更快吗?Feb 12, 2024 pm 11:54 PM

格式化笔记本电脑会使其速度更快吗?Feb 12, 2024 pm 11:54 PM格式化笔记本电脑会使其速度更快吗?如果您想格式化您的Windows笔记本电脑,但想知道它是否会使速度更快,本文将帮助您了解这个问题的正确答案。格式化笔记本电脑会使其速度更快吗?用户格式化Windows笔记本电脑的原因有很多。但最常见的原因是笔记本电脑的性能或速度缓慢。格式化笔记本电脑会彻底删除C盘或安装Windows操作系统的硬盘分区上存储的所有数据。因此,每个用户在采取这一步之前都会三思而后行,尤其是在笔记本电脑的性能方面。本文将帮助您了解格式化笔记本电脑是否会加快速度。格式化笔记本电脑有助于

深度解读:为何Laravel速度慢如蜗牛?Mar 07, 2024 am 09:54 AM

深度解读:为何Laravel速度慢如蜗牛?Mar 07, 2024 am 09:54 AMLaravel是一款广受欢迎的PHP开发框架,但有时候被人诟病的就是其速度慢如蜗牛。究竟是什么原因导致了Laravel的速度不尽如人意呢?本文将从多个方面深度解读Laravel速度慢如蜗牛的原因,并结合具体的代码示例,帮助读者更深入地了解此问题。1.ORM查询性能问题在Laravel中,ORM(对象关系映射)是一个非常强大的功能,可以让

Windows 11 Insiders 现在对在窗口模式下运行的传统游戏进行了优化Apr 25, 2023 pm 04:28 PM

Windows 11 Insiders 现在对在窗口模式下运行的传统游戏进行了优化Apr 25, 2023 pm 04:28 PM如果您在Windows机器上玩旧版游戏,您会很高兴知道Microsoft为它们计划了某些优化,特别是如果您在窗口模式下运行它们。该公司宣布,最近开发频道版本的内部人员现在可以利用这些功能。本质上,许多旧游戏使用“legacy-blt”演示模型在您的显示器上渲染帧。尽管DirectX12(DX12)已经利用了一种称为“翻转模型”的新演示模式,但Microsoft现在也正在向DX10和DX11游戏推出这一增强功能。迁移将改善延迟,还将为自动HDR和可变刷新率(VRR)等进一步增强打

麒麟9000s性能究竟如何?Mar 22, 2024 pm 03:21 PM

麒麟9000s性能究竟如何?Mar 22, 2024 pm 03:21 PM作为一款备受关注的旗舰手机,麒麟9000s一经推出便引起了广泛的讨论和关注。它搭载了麒麟9000系列最新的旗舰芯片,性能堪称强劲。那么,麒麟9000s的性能究竟如何?让我们一起来探讨。首先,麒麟9000s采用了全新的5nm工艺制造,极大提升了芯片的性能和功耗控制。与之前的麒麟处理器相比,麒麟9000s在性能上有着明显的提升。无论是运行大型游戏、多任务处理还是

比较谷歌浏览器的单核与双核浏览器速度Jan 29, 2024 pm 11:15 PM

比较谷歌浏览器的单核与双核浏览器速度Jan 29, 2024 pm 11:15 PM谷歌浏览器的单核要比双核浏览器速度更慢吗?现在很多人都在使用着各式各样的浏览器上网冲浪,谷歌浏览器就是其中之一,作为浏览器中的引领者,其技术毋庸置疑。一些小伙伴常常问小编,大家口中所说的浏览器单双核是什么意思?这会影响到浏览器的加载速度吗?今天小编就跟大家好好聊一聊这个问题吧。谷歌浏览器的单核与双核浏览器速度PK首先,小编先给大家一个结论:单核谷歌浏览器较于双核浏览器在速度上是不慢的。浏览器的内核并不是就像电池一样,数量越多就越强。双核浏览器其中一个内核是IE浏览器内核,另一个内核为WebKit

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Dreamweaver CS6

Visual web development tools

SecLists

SecLists is the ultimate security tester's companion. It is a collection of various types of lists that are frequently used during security assessments, all in one place. SecLists helps make security testing more efficient and productive by conveniently providing all the lists a security tester might need. List types include usernames, passwords, URLs, fuzzing payloads, sensitive data patterns, web shells, and more. The tester can simply pull this repository onto a new test machine and he will have access to every type of list he needs.

MantisBT

Mantis is an easy-to-deploy web-based defect tracking tool designed to aid in product defect tracking. It requires PHP, MySQL and a web server. Check out our demo and hosting services.

mPDF

mPDF is a PHP library that can generate PDF files from UTF-8 encoded HTML. The original author, Ian Back, wrote mPDF to output PDF files "on the fly" from his website and handle different languages. It is slower than original scripts like HTML2FPDF and produces larger files when using Unicode fonts, but supports CSS styles etc. and has a lot of enhancements. Supports almost all languages, including RTL (Arabic and Hebrew) and CJK (Chinese, Japanese and Korean). Supports nested block-level elements (such as P, DIV),

ZendStudio 13.5.1 Mac

Powerful PHP integrated development environment