OpenAI's latest language model, GPT-3.5 Turbo, represents a major leap forward in large language model capabilities. Built on the GPT-3 family of models, GPT-3.5 Turbo can generate remarkably human-like text while being more affordable and accessible than previous versions. However, the true power of GPT-3.5 Turbo lies in its ability to be customized through a process called fine-tuning.

Fine-tuning allows developers to bring their own data to adapt the model to specific use cases and significantly boost performance on specialized tasks. With fine-tuning, GPT-3.5 Turbo has even matched or exceeded GPT-4 on certain narrow applications.

This new level of customization unlocks the potential for businesses and developers to deploy GPT-3.5 Turbo to create tailored, high-performing AI applications. As fine-tuning becomes available for GPT-3.5 Turbo and the even more powerful GPT-4 later this year, we stand at the cusp of a new era in applied AI.

Why Fine-Tune Language Models?

Fine-tuning has become a crucial technique for getting the most out of large language models like GPT-3.5 Turbo. We have a separate guide on fine-tuning GPT-3, for example.

While pre-trained models can generate remarkably human-like text out of the box, their true capabilities are unlocked through fine-tuning. The process allows developers to customize the model by training it on domain-specific data, adapting it to specialized use cases beyond what general-purpose training can achieve. Fine-tuning improves the model's relevance, accuracy, and performance for niche applications.

Customization for specific use cases

Fine-tuning allows developers to customize the model to create unique and differentiated experiences, catering to specific requirements and domains. By training the model on domain-specific data, it can generate more relevant and accurate outputs for that niche. This level of customization enables businesses to build tailored AI applications.

Improved steerability and reliability

Fine-tuning improves the model's ability to follow instructions and produce reliable, consistent output formatting. Through training on formatted data, the model learns the desired structure and style, improving steerability. This results in more predictable and controllable outputs.

Enhanced performance

Fine-tuning can enhance model performance significantly, even allowing fine-tuned GPT-3.5 Turbo to match or exceed GPT-4 capabilities on certain specialized tasks. By optimizing the model for a narrow domain, it achieves superior results in that niche problem space compared to a generalist model. The performance lift from fine-tuning is substantial.

Check out our guide on 12 GPT-4 Open-Source Alternatives, which explores some of the tools that can offer similar performance and require fewer computational resources to run.

Impact of Fine-Tuning GPT 3.5-Turbo

In the beta testing conducted by OpenAI, they observed that customers who fine-tuned the model experienced notable enhancements in its performance for various standard applications. Here are some key takeaways:

1. Enhanced directability

Through fine-tuning, companies can better guide the model to adhere to certain guidelines. For example, if a company wants succinct responses or needs the model to always reply in a specific language, fine-tuning can help achieve that. A classic example is that developers can tweak the model to consistently reply in German whenever requested.

2. Consistent response structuring

One of the standout benefits of fine-tuning is its ability to make the model's outputs more uniform. This is especially valuable for tasks that require a particular response structure, like code suggestions or generating API interactions. For instance, with fine-tuning, developers can trust the model to transform user queries into quality JSON formats compatible with their systems.

3. Personalized tone

Fine-tuning can be employed to align the model's responses more closely with a company’s unique voice or style. Companies with a distinct brand voice can leverage this feature to ensure the model's tone matches their brand's essence.

Prerequisites for Fine-tuning

Fine-tuning allows customizing a pre-trained language model like GPT-3.5 Turbo by continuing the training process on your own data. This adapts the model to your specific use case and significantly improves its performance.

To start fine-tuning, you first need access to the OpenAI API. After signing up on the OpenAI website, you can obtain an API key which enables you to interact with the API and models.

Next, you need to prepare a dataset for fine-tuning. This involves curating examples of text prompts and desired model responses. The data should match the format your application will use the model for. Cleaning and formatting the data into the required JSONL structure is also important.

The OpenAI CLI provides useful tools to validate and preprocess your training data.

Once validated, you can upload the data to OpenAI servers.

Finally, you initiate a fine-tuning job through the API, selecting the base GPT-3.5

Turbo model and passing your training data file. The fine-tuning process can take hours or days, depending on the data size. You can monitor training progress through the API.

How to Fine Tune OpenAI GPT 3.5-Turbo Model: A Step-By-Step Guide

OpenAI has recently released a UI interface for fine-tuning language models. In this tutorial, I will be using the OpenAI UI to create a fine-tuned GPT model. To follow along with this part, you must have an OpenAI account and key.

1. Login to platform.openai.com

2. Prepare your data

For demonstration, I have curated a small dataset of question answers, and it is currently stored as Pandas DataFrame.

Just to demonstrate what I have done, I created 50 machine learning questions and their answers in Shakespeare style. Through this fine-tuning job, I am personalizing the style and tone of the GPT3.5-turbo model.

Even though it's not a very practical use-case, as you may simply add “Answer in Shakespeare style” in the prompt, GPT3.5 is certainly aware of Shakespeare and will generate answers in the required tone.

For OpenAI the data must be in jsonl format. JSONL is a format where each line is a valid JSON object, separated by newlines. I have written a simple code to convert pd.DataFrame into jsonl.

import json

import pandas as pd

DEFAULT_SYSTEM_PROMPT = 'You are a teaching assistant for Machine Learning. You should help the user to answer his question.'

def create_dataset(question, answer):

return {

"messages": [

{"role": "system", "content": DEFAULT_SYSTEM_PROMPT},

{"role": "user", "content": question},

{"role": "assistant", "content": answer},

]

}

if __name__ == "__main__":

df = pd.read_csv("path/to/file.csv", encoding='cp1252')

with open("train.jsonl", "w") as f:

for _, row in df.iterrows():

example_str = json.dumps(create_dataset(row["Question"], row["Answer"]))

f.write(example_str + "\n")

This is what my jsonl file looks like:

3. Create the fine-tuning job

Head over to platform.openai.com and navigate to Fine-tuning in the top menu and click on Create New.

Select the base model. As of right now, only 3 models are available for fine-tuning (babbage-002, davinci-002, gpt-3.5-turbo-0613).

Next, simply upload the jsonl file, give the name of the job, and click Create.

The tuning job may take several hours or even days, depending on the size of the dataset. In my example, the dataset only had 5,500 tokens, and it took well over 6 hours for fine-tuning. The cost of this job was insignificant (

This tutorial shows how you can use UI to fine-tune GPT models. If you would like to learn how to achieve the same thing using API, check out Fine-Tuning GPT-3 Using the OpenAI API and Python tutorial by Zoumana Keita on DataCamp.

4. Using the fine-tuned model

Once the tuning job completes, you can now use the fine-tuned model through API or using the playground available on platform.openai.com.

Notice that under the Model dropdown, there is now a personal 3.5-turbo available for selection. Let’s give it a try.

Notice the tone and style of responses.

If you want to learn how to work with the OpenAI Python package to programmatically have conversations with ChatGPT, check out Using GPT-3.5 and GPT-4 via the OpenAI API in the Python blog on DataCamp.

Safety and Privacy

OpenAI takes safety seriously and has rigorous processes before releasing new models, including testing, expert feedback, techniques to improve model behavior and monitoring systems. They aim to make powerful AI systems beneficial and minimize foreseeable risks.

Fine-tuning allows customizing models like GPT-3.5 Turbo while preserving important safety features. OpenAI applies interventions at multiple levels - measurements, model changes, policies, monitoring - to mitigate risks and align models.

OpenAI removes personal information from training data where feasible and has policies against generating content with private individuals' info. This minimizes privacy risks.

For common use cases without sensitive data, OpenAI models can be safely leveraged. But for proprietary or regulated data, options like data obfuscation, private AI processors, or in-house models may be preferable.

Cost of fine-tuning GPT 3.5-turbo

There are three costs associated with fine-tuning and using the fine-tuned GPT 3.5-turbo model.

- Training data preparation. This involves curating a dataset of text prompts and desired responses tailored to your specific use case. The cost will depend on the time and effort required to source and format the data.

- Initial training cost. This is charged per token of training data. At $0.008 per 1,000 tokens, a 100,000 token training set would cost $800 for the initial fine-tuning.

- Ongoing usage costs. These are charged per token for both input prompts and model outputs. At $0.012 per 1,000 input tokens and $0.016 per 1,000 output tokens, costs can add up quickly depending on application usage.

Let’s see an example of usage cost scenario:

- Chatbot with 4,000 token prompts/responses, 1,000 interactions per day:

(4,000/1000) input tokens x $0.012 x 1,000 interactions = $48 per day

(4,000/1000) output tokens x $0.016 x 1,000 interactions = $64 per day

Total = $112 per day or $3,360 per month

- Text summarization API with 2,000 token inputs, 500 requests per day:

(2,000/1000) input tokens x $0.012 x 500 requests = $12 per day

(2,000/1000) output tokens x $0.016 x 500 requests = $16 per day

Total = $28 per day or $840 per month

Note: Tokens divided by 1000 because the OpenAI pricing is quoted per 1K token.

Learn how to use ChatGPT in a real-life end-to-end data science project. Check out A Guide to Using ChatGPT For Data Science Projects to learn how to use ChatGPT for project planning, data analysis, data preprocessing, model selection, hyperparameter tuning, developing a web app, and deploying it on the Spaces.

Conclusion

As we delve into the frontier of large language model capabilities, GPT-3.5 Turbo stands out not just for its human-like text generation but also for the transformative potential unlocked by fine-tuning. This customization process allows developers to hone the model's prowess to suit niche applications, thereby achieving superior results that even match or surpass those of its successors in specialized domains.

The enhancements in directability, response structuring, and tone personalization are evident in applications fine-tuned to match distinct requirements, thereby allowing businesses to bring forth unique AI-driven experiences. However, with great power comes significant responsibility. It's crucial to understand the associated costs and be mindful of safety and privacy considerations when implementing Generative AI and language models.

Gain access to 60 ChatGPT prompts for data science tasks with the ChatGPT Cheat Sheet for Data Science.

The above is the detailed content of How to Fine Tune GPT 3.5: Unlocking AI's Full Potential. For more information, please follow other related articles on the PHP Chinese website!

I Tried Vibe Coding with Cursor AI and It's Amazing!Mar 20, 2025 pm 03:34 PM

I Tried Vibe Coding with Cursor AI and It's Amazing!Mar 20, 2025 pm 03:34 PMVibe coding is reshaping the world of software development by letting us create applications using natural language instead of endless lines of code. Inspired by visionaries like Andrej Karpathy, this innovative approach lets dev

Replit Agent: A Guide With Practical ExamplesMar 04, 2025 am 10:52 AM

Replit Agent: A Guide With Practical ExamplesMar 04, 2025 am 10:52 AMRevolutionizing App Development: A Deep Dive into Replit Agent Tired of wrestling with complex development environments and obscure configuration files? Replit Agent aims to simplify the process of transforming ideas into functional apps. This AI-p

Top 5 GenAI Launches of February 2025: GPT-4.5, Grok-3 & More!Mar 22, 2025 am 10:58 AM

Top 5 GenAI Launches of February 2025: GPT-4.5, Grok-3 & More!Mar 22, 2025 am 10:58 AMFebruary 2025 has been yet another game-changing month for generative AI, bringing us some of the most anticipated model upgrades and groundbreaking new features. From xAI’s Grok 3 and Anthropic’s Claude 3.7 Sonnet, to OpenAI’s G

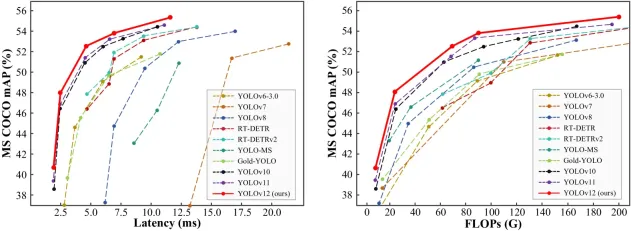

How to Use YOLO v12 for Object Detection?Mar 22, 2025 am 11:07 AM

How to Use YOLO v12 for Object Detection?Mar 22, 2025 am 11:07 AMYOLO (You Only Look Once) has been a leading real-time object detection framework, with each iteration improving upon the previous versions. The latest version YOLO v12 introduces advancements that significantly enhance accuracy

How to Use DALL-E 3: Tips, Examples, and FeaturesMar 09, 2025 pm 01:00 PM

How to Use DALL-E 3: Tips, Examples, and FeaturesMar 09, 2025 pm 01:00 PMDALL-E 3: A Generative AI Image Creation Tool Generative AI is revolutionizing content creation, and DALL-E 3, OpenAI's latest image generation model, is at the forefront. Released in October 2023, it builds upon its predecessors, DALL-E and DALL-E 2

Elon Musk & Sam Altman Clash over $500 Billion Stargate ProjectMar 08, 2025 am 11:15 AM

Elon Musk & Sam Altman Clash over $500 Billion Stargate ProjectMar 08, 2025 am 11:15 AMThe $500 billion Stargate AI project, backed by tech giants like OpenAI, SoftBank, Oracle, and Nvidia, and supported by the U.S. government, aims to solidify American AI leadership. This ambitious undertaking promises a future shaped by AI advanceme

5 Grok 3 Prompts that Can Make Your Work EasyMar 04, 2025 am 10:54 AM

5 Grok 3 Prompts that Can Make Your Work EasyMar 04, 2025 am 10:54 AMGrok 3 – Elon Musk and xAi’s latest AI model is the talk of the town these days. From Andrej Karpathy to tech influencers, everyone is talking about the capabilities of this new model. Initially, access was limited to

Google's GenCast: Weather Forecasting With GenCast Mini DemoMar 16, 2025 pm 01:46 PM

Google's GenCast: Weather Forecasting With GenCast Mini DemoMar 16, 2025 pm 01:46 PMGoogle DeepMind's GenCast: A Revolutionary AI for Weather Forecasting Weather forecasting has undergone a dramatic transformation, moving from rudimentary observations to sophisticated AI-powered predictions. Google DeepMind's GenCast, a groundbreak

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Atom editor mac version download

The most popular open source editor

MantisBT

Mantis is an easy-to-deploy web-based defect tracking tool designed to aid in product defect tracking. It requires PHP, MySQL and a web server. Check out our demo and hosting services.

Dreamweaver Mac version

Visual web development tools

PhpStorm Mac version

The latest (2018.2.1) professional PHP integrated development tool

Safe Exam Browser

Safe Exam Browser is a secure browser environment for taking online exams securely. This software turns any computer into a secure workstation. It controls access to any utility and prevents students from using unauthorized resources.