QwQ-32B

Use now

Introduction:Added on:Monthly Visitors:

Open-source reasoning model for complex tasks with 32B parameters.Mar-26,2025 0

0

0

0

Product Information

What is QwQ-32B?

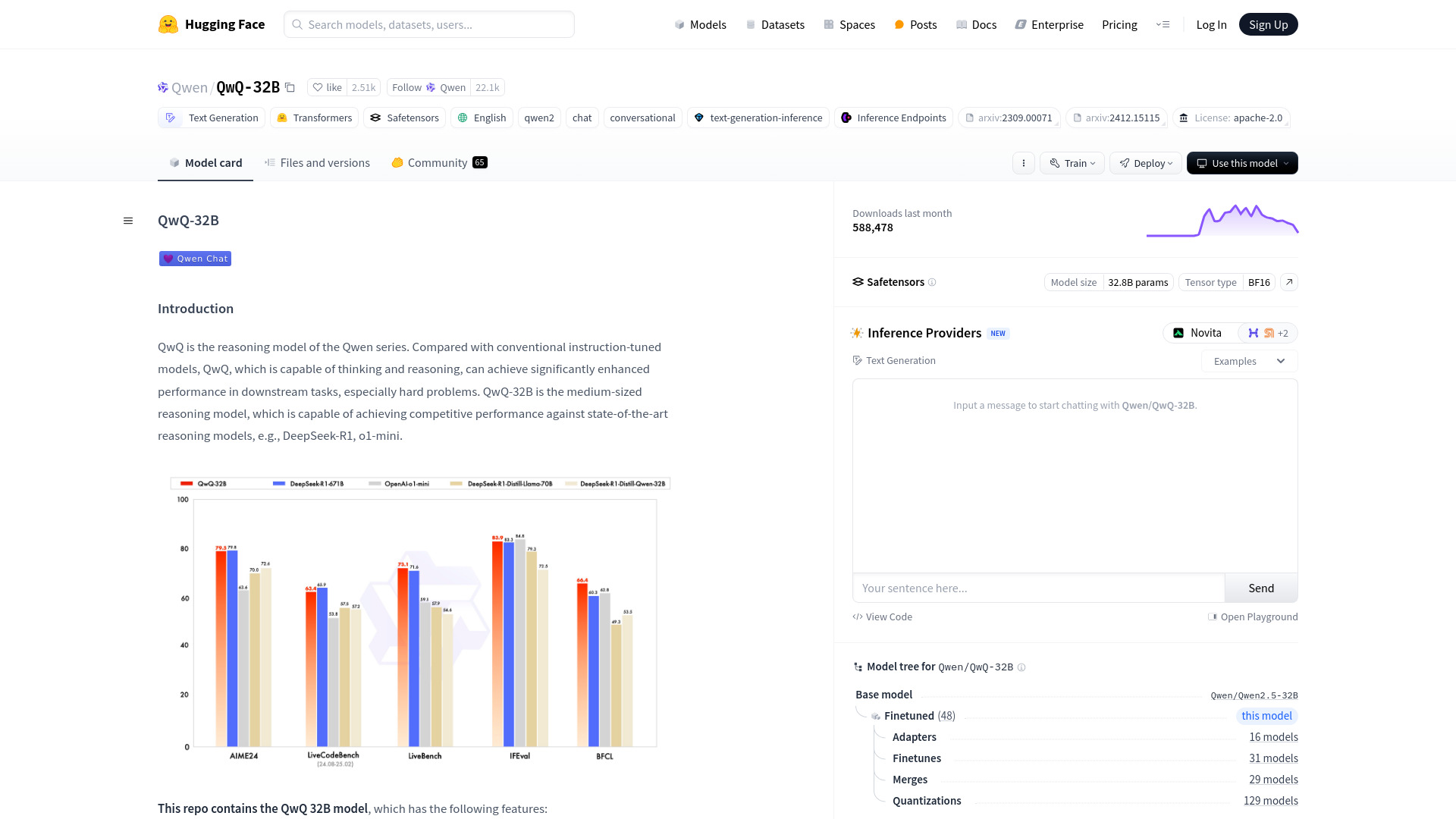

QwQ-32B, developed by the Alibaba Qwen team, is an open-source 32 billion parameter language model designed for deep reasoning. It utilizes reinforcement learning, making it capable of thoughtful reasoning and enhanced performance in complex tasks compared to conventional models.

How to use QwQ-32B?

To use QwQ-32B, load the model via Hugging Face's transformers library, input your prompt, and generate the response using the model's capabilities.

QwQ-32B's Core Features

Open-source

32 billion parameters

Deep reasoning capabilities

Supports thoughtful output

QwQ-32B's Use Cases

Text generation for complex reasoning tasks

Generating answers to math problems with step-by-step reasoning

Related resources

Hot Article

Replit Agent: A Guide With Practical Examples

2 months agoBy尊渡假赌尊渡假赌尊渡假赌

How to Use DALL-E 3: Tips, Examples, and Features

1 months agoBy尊渡假赌尊渡假赌尊渡假赌

Best AI Art Generators (Free & Paid) for Creative Projects

1 months agoBy百草

Getting Started With Meta Llama 3.2 - Analytics Vidhya

3 weeks agoBy尊渡假赌尊渡假赌尊渡假赌

I Tried Vibe Coding with Cursor AI and It's Amazing!

1 months agoBy尊渡假赌尊渡假赌尊渡假赌